Debian - Slow boot waiting for network

Solution 1

Did you check if systemd-networkd-wait-online.service is disabled?

"""systemd-networkd-wait-online is a oneshot system service (see systemd.service(5)), that waits for the network to be configured. By default, it will wait for all links it is aware of and which are managed by systemd-networkd.service(8) to be fully configured or failed, and for at least one link to gain a carrier.""" https://manpages.debian.org/testing/systemd/systemd-networkd-wait-online.service.8.en.html

Also make sure your network is free of rogue servers first. A broadcasting DHCP server with invalid leases maybe?

Solution 2

I had exactly the same problem as you (I even referenced your serverfault question in this posting to the Debian User Forums)

My problem turned out to be another instance of "Boottime Entropy Starvation" (I'm guessing that wpa-supplicant needed some random data for key exchange, and that the kernel blocked while waiting for the random number generator to provide the random data). It turns out that it's a surprisingly common problem - unfortunately almost all the "so-called solutions" that I've seen simply suggest reducing the time-out. :-(

The fix, for me, was to install the havege daemon (this is another random data generator that starts early during the boot process, so there's plenty of entropy available when wpa-supplicant requests it), thus:

sudo apt install haveged

Enjoy!! :-)

More info:

https://www.debian.org/releases/stable/amd64/release-notes/ch-information.en.html#entropy-starvation

Solution 3

Installing ifupdown2 reduced the network time to ~6 seconds.

5.974s networking.service

1.904s smb.mount

773ms nftables.service

552ms systemd-logind.service

208ms systemd-journald.service

205ms dev-nvme0n1p2.device

96ms systemd-udev-trigger.service

90ms lvm2-monitor.service

79ms smbd.service

62ms chrony.service

53ms nmbd.service

50ms libvirtd.service

[Di Jun 25 05:21:06 2019] [drm] Initialized amdgpu 3.27.0 20150101 for 0000:42:00.0 on minor 0

[Di Jun 25 05:21:09 2019] igb 0000:05:00.0 enp5s0: igb: enp5s0 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: RX

[Di Jun 25 05:21:09 2019] IPv6: ADDRCONF(NETDEV_CHANGE): enp5s0: link becomes ready

[Di Jun 25 05:21:11 2019] r8169 0000:08:00.0: firmware: direct-loading firmware rtl_nic/rtl8168e-3.fw

[Di Jun 25 05:21:11 2019] RTL8211E Gigabit Ethernet r8169-800:00: attached PHY driver [RTL8211E Gigabit Ethernet] (mii_bus:phy_addr=r8169-800:00, irq=IGNORE)

[Di Jun 25 05:21:11 2019] r8169 0000:08:00.0 enp8s0: No native access to PCI extended config space, falling back to CSI

[Di Jun 25 05:21:11 2019] IPv6: ADDRCONF(NETDEV_UP): enp8s0: link is not ready

[Di Jun 25 05:21:11 2019] bridge: filtering via arp/ip/ip6tables is no longer available by default. Update your scripts to load br_netfilter if you need this.

[Di Jun 25 05:21:11 2019] br0: port 1(enp9s0) entered blocking state

[Di Jun 25 05:21:11 2019] br0: port 1(enp9s0) entered disabled state

[Di Jun 25 05:21:11 2019] device enp9s0 entered promiscuous mode

[Di Jun 25 05:21:11 2019] RTL8211E Gigabit Ethernet r8169-900:00: attached PHY driver [RTL8211E Gigabit Ethernet] (mii_bus:phy_addr=r8169-900:00, irq=IGNORE)

[Di Jun 25 05:21:11 2019] br0: port 1(enp9s0) entered blocking state

[Di Jun 25 05:21:11 2019] br0: port 1(enp9s0) entered forwarding state

[Di Jun 25 05:21:11 2019] wireguard: loading out-of-tree module taints kernel.

[Di Jun 25 05:21:11 2019] wireguard: module verification failed: signature and/or required key missing - tainting kernel

[Di Jun 25 05:21:11 2019] wireguard: WireGuard 0.0.20190406 loaded. See www.wireguard.com for information.

[Di Jun 25 05:21:11 2019] wireguard: Copyright (C) 2015-2019 Jason A. Donenfeld <[email protected]>. All Rights Reserved.

[Di Jun 25 05:21:12 2019] br0: port 1(enp9s0) entered disabled state

[Di Jun 25 05:21:14 2019] r8169 0000:08:00.0 enp8s0: Link is Up - 1Gbps/Full - flow control off

[Di Jun 25 05:21:14 2019] IPv6: ADDRCONF(NETDEV_CHANGE): enp8s0: link becomes ready

[Di Jun 25 05:21:14 2019] r8169 0000:09:00.0 enp9s0: Link is Up - 1Gbps/Full - flow control off

[Di Jun 25 05:21:14 2019] br0: port 1(enp9s0) entered blocking state

[Di Jun 25 05:21:14 2019] br0: port 1(enp9s0) entered forwarding state

Maybe some weird timeout issues with the normal ifupdown? This definitely seems like a bug.

EDIT:

Alright, so it seems this didn't fix anything, since the interfaces were not actually fully "up". I noticed this because dnsmasq didn't work until a manual restart. This was "fixed" by using the following override for networking.service:

[Unit]

Before=network.target shutdown.target network-online.target

But alas, this returned me to square one:

51.036s networking.service

1.114s smb.mount

837ms nftables.service

557ms systemd-logind.service

211ms systemd-journald.service

199ms dev-nvme0n1p2.device

100ms lvm2-monitor.service

91ms systemd-udev-trigger.service

77ms smbd.service

62ms nmbd.service

58ms chrony.service

56ms libvirtd.service

55ms [email protected]

Related videos on Youtube

Kona98

Updated on September 18, 2022Comments

-

Kona98 almost 2 years

I have a Debian 10 Server that I think could/should boot faster, but I don't know what the problem is.

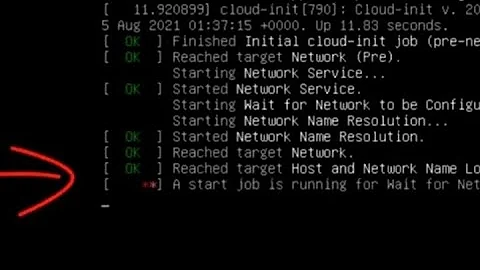

I see

A start job is running for Raise network interfaceswhen booting, and looking atsystemd-analyze blameit seems a lot of time is spend innetworking.service(I left out the services below 50ms).50.846s networking.service 1.872s smb.mount 717ms nftables.service 560ms ifupdown-pre.service 544ms systemd-logind.service 205ms systemd-journald.service 194ms dev-nvme0n1p2.device 88ms systemd-udev-trigger.service 83ms smbd.service 66ms libvirtd.service 61ms lvm2-monitor.service 60ms chrony.service 57ms [email protected] 50ms nmbd.serviceDoing a

systemctl stop networking.serviceandsystemctl start networking.servicetakes less than 2 seconds though.This is my /etc/network/interfaces for reference (br0 is used for VMs):

# This file describes the network interfaces available on your system # and how to activate them. For more information, see interfaces(5). source /etc/network/interfaces.d/* # The loopback network interface auto lo iface lo inet loopback auto enp5s0 iface enp5s0 inet static address 192.168.1.190 netmask 255.255.255.0 gateway 192.168.1.1 auto enp9s0 iface enp9s0 inet manual auto enp8s0 iface enp8s0 inet static address 10.36.14.242 netmask 255.255.255.0 post-up ip route add 10.0.0.0/8 via 10.36.14.1 dev enp8s0 pre-down ip route del 10.0.0.0/8 via 10.36.14.1 dev enp8s0 auto br0 iface br0 inet static address 10.36.15.11 netmask 255.255.255.0 bridge_ports enp9s0 bridge_stp off bridge_fd 0 auto wg-p2p iface wg-p2p inet static address 10.88.88.1 netmask 255.255.255.0 pre-up ip link add $IFACE type wireguard pre-up wg setconf $IFACE /etc/wireguard/$IFACE.conf post-down ip link del $IFACEI did see some posts about changing

autotoallow-hotplug, but the explanations make it sound like it just starts the interfaces without blocking the boot i.e. the time to reach the network would be the same. Changing the enp* interfaces to that did however improve at least the time to start routing. Pinging another network from another device after starting the server takes about 1 minute withautoand ~32 seconds withallow-hotplug(probably because the interfaces are started in parallel?), and I get a console immediately without theA start job is runningmessage. Changing br0 to it made that interface not start automatically though, so I left it.Also only when auto is active on the enp* interfaces, I get

A stop job is running for Raise network interfaceson shutdown, which takes about 50 seconds to complete.So I want to know if it's OK to leave the interfaces on

allow-hotplug(maybe there can be interface binding issues for some services?), and if there are any other problems I can fix to improve boot times.Edit:

Relevant output of

dmesg -Tis:[Mo Jun 24 13:16:48 2019] IPv6: ADDRCONF(NETDEV_UP): enp5s0: link is not ready [Mo Jun 24 13:16:51 2019] igb 0000:05:00.0 enp5s0: igb: enp5s0 NIC Link is Up 1000 Mbps Full Duplex, Flow Control: RX [Mo Jun 24 13:16:51 2019] IPv6: ADDRCONF(NETDEV_CHANGE): enp5s0: link becomes ready [Mo Jun 24 13:16:58 2019] r8169 0000:09:00.0: firmware: direct-loading firmware rtl_nic/rtl8168e-3.fw [Mo Jun 24 13:16:58 2019] RTL8211E Gigabit Ethernet r8169-900:00: attached PHY driver [RTL8211E Gigabit Ethernet] (mii_bus:phy_addr=r8169-900:00, irq=IGNORE) [Mo Jun 24 13:16:58 2019] r8169 0000:09:00.0 enp9s0: No native access to PCI extended config space, falling back to CSI [Mo Jun 24 13:16:58 2019] IPv6: ADDRCONF(NETDEV_UP): enp9s0: link is not ready [Mo Jun 24 13:17:01 2019] r8169 0000:09:00.0 enp9s0: Link is Up - 1Gbps/Full - flow control off [Mo Jun 24 13:17:01 2019] IPv6: ADDRCONF(NETDEV_CHANGE): enp9s0: link becomes ready [Mo Jun 24 13:17:08 2019] RTL8211E Gigabit Ethernet r8169-800:00: attached PHY driver [RTL8211E Gigabit Ethernet] (mii_bus:phy_addr=r8169-800:00, irq=IGNORE) [Mo Jun 24 13:17:08 2019] IPv6: ADDRCONF(NETDEV_UP): enp8s0: link is not ready [Mo Jun 24 13:17:11 2019] r8169 0000:08:00.0 enp8s0: Link is Up - 1Gbps/Full - flow control off [Mo Jun 24 13:17:11 2019] IPv6: ADDRCONF(NETDEV_CHANGE): enp8s0: link becomes ready [Mo Jun 24 13:17:18 2019] bridge: filtering via arp/ip/ip6tables is no longer available by default. Update your scripts to load br_netfilter if you need this. [Mo Jun 24 13:17:18 2019] br0: port 1(enp9s0) entered blocking state [Mo Jun 24 13:17:18 2019] br0: port 1(enp9s0) entered disabled state [Mo Jun 24 13:17:18 2019] device enp9s0 entered promiscuous mode [Mo Jun 24 13:17:18 2019] br0: port 1(enp9s0) entered blocking state [Mo Jun 24 13:17:18 2019] br0: port 1(enp9s0) entered forwarding state [Mo Jun 24 13:17:18 2019] IPv6: ADDRCONF(NETDEV_UP): br0: link is not ready [Mo Jun 24 13:17:19 2019] IPv6: ADDRCONF(NETDEV_CHANGE): br0: link becomes ready [Mo Jun 24 13:17:28 2019] wireguard: loading out-of-tree module taints kernel. [Mo Jun 24 13:17:28 2019] wireguard: module verification failed: signature and/or required key missing - tainting kernel [Mo Jun 24 13:17:28 2019] wireguard: WireGuard 0.0.20190406 loaded. See www.wireguard.com for information. [Mo Jun 24 13:17:28 2019] wireguard: Copyright (C) 2015-2019 Jason A. Donenfeld <[email protected]>. All Rights Reserved.Disabling any interface seems to gain me ~10 seconds per interface

-

fmyhr almost 5 yearsA fix may be coming in a future Linux kernel: Fixing getrandom() (article behind paywall for another week or so)

-

Chris_F almost 4 yearsInstalling haveged made no difference for me. It still takes over 4 minutes to initialize the four interfaces on my hp nc365t when they are set to auto.

Chris_F almost 4 yearsInstalling haveged made no difference for me. It still takes over 4 minutes to initialize the four interfaces on my hp nc365t when they are set to auto.