Decrease of transfer rate when copying large amount of data

Unfortunately, this is normal and expected for your big file use case. Your case of two hard disks, and a 50GB+ file eliminates a lot of misleading talk of "slow devices", "slow buses", and "slow filesystems", and you are left with the unexplained problem of a slow copy. You must have quite a bit of memory to get the performance you have for 30GB files. System buffers are used, filled up, and after your copy command finishes, eventually will get flushed to the target, making real timing/rates somewhat difficult (even the "time" command will finish long before the buffers finally get flushed.

The only "workaround" I have found is to use a "copy" command which allows you to set up explicit buffers yourself, like tar or cpio can do. Setting a 2MB buffer on tar allowed me to speed up a 10MB/sec copy of a 50G file to about 35MB/sec -- still much slower than the nominal 100MB/sec I get on smaller files (or in Windows).

Another workaround which may be a better solution is to install the nocache package and use nocache cp file target to limit filling the system buffers and dragging the system to a crawl. A 43G file copy to /dev/null ran at 53MB/sec, better than the large buffer for a tar copy.

Some disks, using Shingled Magnetic Recording (SMR) become really slow for large writes. If you copy speed is really low, but you still have lots of free memory buffers, this may be the cause.

Specific situations may be helped by settings for swappiness, vm_dirty bytes/ratio, renice, ionice, nocache, pre-allocating file space, scheduling,... but the basic problem remains that a system cannot work well without enough free memory.

See launchpad bug 1208993 and add yourself to the "Does this affect me?" list.

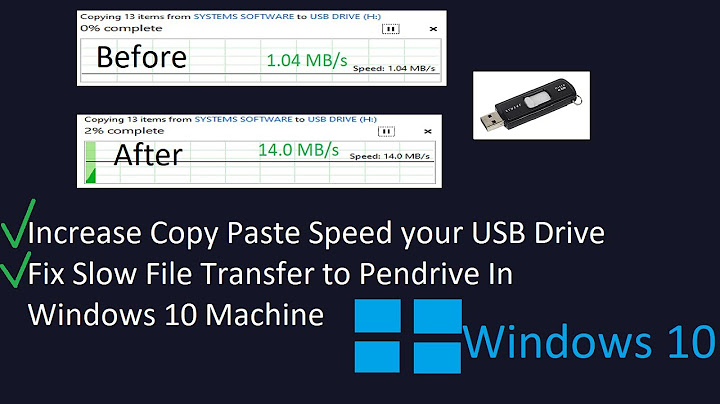

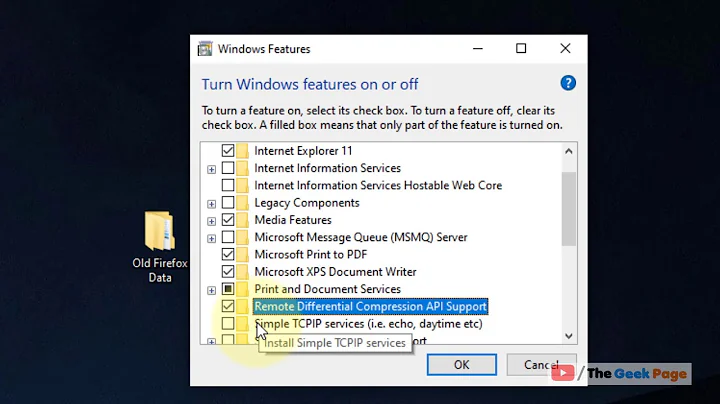

Related videos on Youtube

user3074126

Updated on September 18, 2022Comments

-

user3074126 over 1 year

I am using a Ubuntu 16.04.3 LTS system (4.10.0-40-generic) with two HDD's and several partitions on each disk. When I copy data (<5GB) between the two disk I get a transfer rate around 70 MB/s. However, when I try to copy a large amount of data (>30GB) from one disk to another I notice several performance issues.

My question is whether or not this behaviour is normal and to be expected in Linux systems?

Can anyone explain this to me and advise me how to avoid this performance decrease?Below I will describe my observations. In the example I copied a disk image file of 54GB from sda8 (325 GB partition) to sdb8 (1.6TB partition)

1) Transfer rate decreases and iowait increases

When I try to copy more than 50 GB I notice that gradually the transfer rate decreases. I am monitoring the performance using glances, atop, iotop and iostat. At 30GB progress the transfer rate has dropped to 58 MB/s, at 46 GB to 36MB/s, at 52GB to 12 MB/s. After that the transfer rate really starts to fluctuate and drops below 1MB/s. At the same time I see that iowait is increasing from initially 0% up to 62% at the end. During copying disk sd8 has a 'busy' percentage between 40% and 60%. Disk sdb is 100% busy all the time. Not only the transfer rate drops but also my system becomes less responsive. I expect the iowait to be the cause of that.

Is this normal behaviour? How can the decrease in performance be avoided?2) IOwait stays high after copying

When copying has ended I notice that iowait is still high and gradually starts to reduce to normal values. This takes a couple of minutes. I think that during that time data is still written to sdb at a rate around 1 or 2 MB/s. Using iotop it looks like the process "jdb2/sdb4-8" is causing this disk write. During the time that IOwait is decreasing, my system still suffers from bad responsiveness. Also is see that disk sda is not busy anymore, but disk sdb is still operating at 100% busy.

What is causing that my system has bad responsiveness for a couple of minutes after the copying action?

Can this be avoided?3) Copying from network drive increases the effects

When I try to copy from my Synology NAS to my local disk (sdb8) the effects are even worse. First the network drive is mounted to my system and then copying is started. Initially also a transfer rate of 70MB/s is realized, but the transfer rate drops must faster. After a couple of GB the transfer rate has dropped far below 1 MB/s. Copying was tried using drag and drop from Nautilus, command "cp", command rsync, FreeFileSync application, but all showed poor performance.

What could be the cause that the performance decrease effects are worse using network drives?Additional information

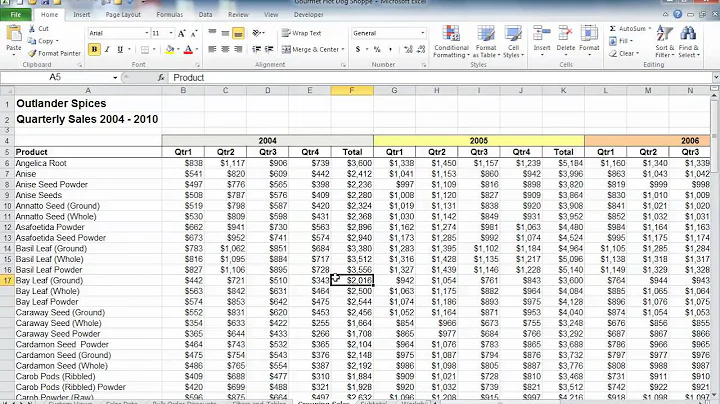

During copying "iostat -dx 5" was used to monitor the disk performance. Around 5 GB of copying progress monitoring shows:Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util sda 0,00 0,00 530,40 0,00 68064,80 0,00 256,65 1,62 3,06 3,06 0,00 1,63 86,72 sdb 0,00 18767,20 0,20 112,40 23,20 73169,60 1300,05 144,32 1345,39 308,00 1347,23 8,88 100,00When copying has progressed to around 52 GB it shows:

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util sda 0,00 0,00 64,60 0,00 8268,80 0,00 256,00 0,22 3,41 3,41 0,00 1,76 11,36 sdb 0,00 1054,40 0,20 10,60 6,40 6681,60 1238,52 148,56 9458,00 0,00 9636,45 92,59 100,00 Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util sda 0,00 0,00 50,20 0,00 6425,60 0,00 256,00 0,16 3,09 3,09 0,00 1,64 8,24 sdb 0,00 2905,80 0,40 17,00 8,80 10289,60 1183,72 141,86 10199,77 652,00 10424,42 57,47 100,00I realize that these are multiple questions, but I suspect these are all related to the same cause and hope that someone can clarify this to me.

-

thomasrutter over 6 yearsI was going to talk about how HDD transfer rate is higher at the outside tracks where data starts and lower on the inside tracks where data ends but it would not explain why it would slow so drastically to ~1MB/s

-

jdwolf over 6 yearsCould be related to ext4s delayed allocation. However on its own that shouldn't be causing such a regression.

jdwolf over 6 yearsCould be related to ext4s delayed allocation. However on its own that shouldn't be causing such a regression.

-

-

user3074126 over 6 yearsThanx for your reply. In the meantime I have tried some additional test, and what I observe is in line with your answer.