Difference between logistic regression and softmax regression

Solution 1

There are minor differences in multiple logistic regression models and a softmax output.

Essentially you can map an input of size d to a single output k times, or map an input of size d to k outputs a single time. However, multiple logistic regression models are confusing, and perform poorer in practice. This is because most libraries (TensorFlow, Caffe, Theano) are implemented in low level compiled languages and are highly optimized. Since managing the multiple logistic regression models is likely handled at a higher level, it should be avoided.

Solution 2

Echoing on what others have already conveyed.

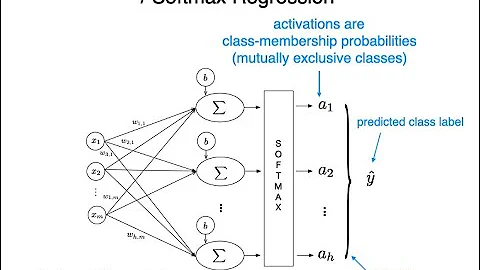

- Softmax Regression is a generalization of Logistic Regression that summarizes a 'k' dimensional vector of arbitrary values to a 'k' dimensional vector of values bounded in the range (0, 1).

- In Logistic Regression we assume that the labels are binary (0 or 1).

However, Softmax Regression allows one to handle

classes.

classes. - Hypothesis function:

- LR:

- Softmax Regression:

- LR:

Reference: http://ufldl.stanford.edu/tutorial/supervised/SoftmaxRegression/

Solution 3

You can think of logistic regression as a binary classifier and softmax regression is one way(there are other ways) to implement an multi-class classifier. The number of output layers in softmax regression is equal to the number of class you want to predict.

Example: In the case of digit recognition, you have 10 class to predict[0-9] so you can think of this as a situation where you model outputs 10 probabilities for each class and in practice we choose the class that has the highest probability as our predicted class.

From the example above it can be seen that the output a softmax function is equal to the number of classes. These outputs are indeed equal to the probabilities of each class and so they sum up to one. For the algebraic explanation please take a look into Stanford University website which has a good and short explanation of this topic.

link:http://ufldl.stanford.edu/tutorial/supervised/SoftmaxRegression/

Difference: In the link above it is described in detail that a softmax with only 2 class is same as a logistic regression. Therefore it can be said the major difference is only the naming convention. we call it as logistic regression when we are dealing with a 2-class problem and softmax when we are dealing with a multinational(more than 2 classes) problem.

Note: It is worth to remember that softmax regression can also be used in other models like neural networks.

Hope this helps.

Solution 4

You can relate logistic regression to binary softmax regression when you transfer the latent model outputs pairs (z1, z2) to z = z1-z2 and apply the logistic function

softmax(z1, z2) = exp(z1)/(exp(z1) + exp(z2)) = exp(z1 - z2)/(exp(z1-z2) + exp(0)) = exp(z)/(exp(z) + 1)

Related videos on Youtube

Xuan Wang

Updated on July 09, 2022Comments

-

Xuan Wang almost 2 years

I know that logistic regression is for binary classification and softmax regression for multi-class problem. Would it be any differences if I train several logistic regression models with the same data and normalize their results to get a multi-class classifier instead of using one softmax model. I assume the result is of the same. Can I say : "all the multi-class classifier is the cascading result of binary classifiers". (except neuron network)

-

mcdowella about 8 yearsBe aware that there is a standard way of adapting logistic regression to multi-class problems, which is explained e.g. in en.wikipedia.org/wiki/Multinomial_logistic_regression with multiple interpretations, one of which mentions softmax.

-

Xuan Wang about 8 yearsThank you very much, it is really helpful.

-

Lerner Zhang over 7 yearsI have written an answer about that on stats.stackexchange.com. May that help.

-

-

Att Righ over 2 yearsPresumably in the case that you are fitting a linear model as an argument for the softmax. This transformation can be acheived through the weights instead and you can write a forumla to translate one sets of weights into another such that the two methods of fitting are "equivalent" for binary classifiers.

![Softmax Regression as a Generalization of Logistic Regression for Classification [Lecture 2.3]](https://i.ytimg.com/vi/JcIjftdBF7M/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLA2pQZatQZk02D01GowZUfZonSlXg)