How to apply machine learning to fuzzy matching

The main advantage of using machine learning is the time saving.

It is very likely that, given enough time, you could hand tune weights and come up with matching rules that are very good for your particular dataset. A machine learning approach could have a hard time outperforming your hand made system customized for a particular dataset.

However, this will probably take days to make a good matching system by hand. If you use an existing ML for matching tool, like Dedupe, then good weights and rules can be learned in an hour (including set up time).

So, if you have already built a matching system that is performing well on your data, it may not be worth investigating ML. But, if this is a new data project, then it almost certainly will be.

Related videos on Youtube

blackgreen

If you have questions about go but feel your post may be off-topic, come to the Go chat. I wrote a userscript to quickly and easily close duplicate questions. Find out more on StackApps. However, if it takes me less than 10 seconds to dupe-close your question, you should do more research before posting on this website. I'm serious, google your question before posting on Stack Overflow. If a solution is found by just googling the title of your question, you are guaranteed to get downvotes. Senior backend engineer with a knack for natural languages. Or senior speaker of natural languages with a knack for backend engineering. Either way: polyglot. const profilePicHues = ["#15321f", "#245434", "#398653", "#48a868"] If you want to thank me for something good I did, you can buy me a coffee. Coffee is what keeps my motivation strong. If want to contribute to site-wide curation and cleanup efforts, come to SOCVR.

Updated on June 30, 2022Comments

-

blackgreen almost 2 years

blackgreen almost 2 yearsLet's say that I have an MDM system (Master Data Management), whose primary application is to detect and prevent duplication of records.

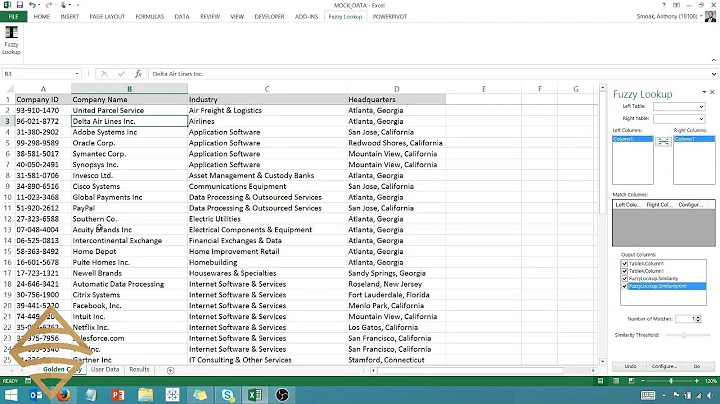

Every time a sales rep enters a new customer in the system, my MDM platform performs a check on existing records, computes the Levenshtein or Jaccard or XYZ distance between pair of words or phrases or attributes, considers weights and coefficients and outputs a similarity score, and so on.

Your typical fuzzy matching scenario.

I would like to know if it makes sense at all to apply machine learning techniques to optimize the matching output, i.e. find duplicates with maximum accuracy.

And where exactly it makes the most sense.- optimizing the weights of the attributes?

- increase the algorithm confidence by predicting the outcome of the match?

- learn the matching rules that otherwise I would configure into the algorithm?

- something else?

There's also this excellent answer about the topic but I didn't quite get whether the guy actually made use of ML or not.

Also my understanding is that weighted fuzzy matching is already a good enough solution, probably even from a financial perspective, since whenever you deploy such an MDM system you have to do some analysis and preprocessing anyway, be it either manually encoding the matching rules or training an ML algorithm.

So I'm not sure that the addition of ML would represent a significant value proposition.

Any thoughts are appreciated.

-

ImDarrenG about 7 yearsMy intuition is that the incremental gain you would achieve would not justify the effort. What would be interesting is to use natural language processing/understanding to provide additional context when searching for possible duplicates, but it would be no small project!

ImDarrenG about 7 yearsMy intuition is that the incremental gain you would achieve would not justify the effort. What would be interesting is to use natural language processing/understanding to provide additional context when searching for possible duplicates, but it would be no small project! -

ImDarrenG about 7 yearsIf you do pursue this project one thing to watch will be the essentially binary outcome of your task (match vs no match), combined with potentially unbalanced dataset (more non-matches than matches). You could end up with a machine that looks very accurate, but is actually just telling you what you already know.

ImDarrenG about 7 yearsIf you do pursue this project one thing to watch will be the essentially binary outcome of your task (match vs no match), combined with potentially unbalanced dataset (more non-matches than matches). You could end up with a machine that looks very accurate, but is actually just telling you what you already know.