Difference between numpy dot() and Python 3.5+ matrix multiplication @

Solution 1

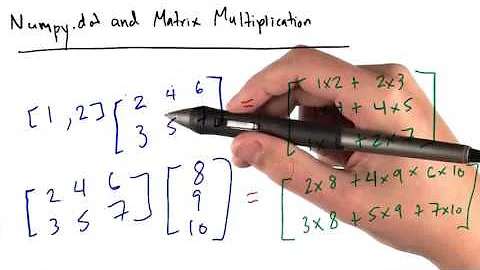

The @ operator calls the array's __matmul__ method, not dot. This method is also present in the API as the function np.matmul.

>>> a = np.random.rand(8,13,13)

>>> b = np.random.rand(8,13,13)

>>> np.matmul(a, b).shape

(8, 13, 13)

From the documentation:

matmuldiffers fromdotin two important ways.

- Multiplication by scalars is not allowed.

- Stacks of matrices are broadcast together as if the matrices were elements.

The last point makes it clear that dot and matmul methods behave differently when passed 3D (or higher dimensional) arrays. Quoting from the documentation some more:

For matmul:

If either argument is N-D, N > 2, it is treated as a stack of matrices residing in the last two indexes and broadcast accordingly.

For np.dot:

For 2-D arrays it is equivalent to matrix multiplication, and for 1-D arrays to inner product of vectors (without complex conjugation). For N dimensions it is a sum product over the last axis of a and the second-to-last of b

Solution 2

Just FYI, @ and its numpy equivalents dot and matmul are all equally fast. (Plot created with perfplot, a project of mine.)

Code to reproduce the plot:

import perfplot

import numpy

def setup(n):

A = numpy.random.rand(n, n)

x = numpy.random.rand(n)

return A, x

def at(data):

A, x = data

return A @ x

def numpy_dot(data):

A, x = data

return numpy.dot(A, x)

def numpy_matmul(data):

A, x = data

return numpy.matmul(A, x)

perfplot.show(

setup=setup,

kernels=[at, numpy_dot, numpy_matmul],

n_range=[2 ** k for k in range(15)],

)

Solution 3

The answer by @ajcr explains how the dot and matmul (invoked by the @ symbol) differ. By looking at a simple example, one clearly sees how the two behave differently when operating on 'stacks of matricies' or tensors.

To clarify the differences take a 4x4 array and return the dot product and matmul product with a 3x4x2 'stack of matricies' or tensor.

import numpy as np

fourbyfour = np.array([

[1,2,3,4],

[3,2,1,4],

[5,4,6,7],

[11,12,13,14]

])

threebyfourbytwo = np.array([

[[2,3],[11,9],[32,21],[28,17]],

[[2,3],[1,9],[3,21],[28,7]],

[[2,3],[1,9],[3,21],[28,7]],

])

print('4x4*3x4x2 dot:\n {}\n'.format(np.dot(fourbyfour,threebyfourbytwo)))

print('4x4*3x4x2 matmul:\n {}\n'.format(np.matmul(fourbyfour,threebyfourbytwo)))

The products of each operation appear below. Notice how the dot product is,

...a sum product over the last axis of a and the second-to-last of b

and how the matrix product is formed by broadcasting the matrix together.

4x4*3x4x2 dot:

[[[232 152]

[125 112]

[125 112]]

[[172 116]

[123 76]

[123 76]]

[[442 296]

[228 226]

[228 226]]

[[962 652]

[465 512]

[465 512]]]

4x4*3x4x2 matmul:

[[[232 152]

[172 116]

[442 296]

[962 652]]

[[125 112]

[123 76]

[228 226]

[465 512]]

[[125 112]

[123 76]

[228 226]

[465 512]]]

Solution 4

In mathematics, I think the dot in numpy makes more sense

dot(a,b)_{i,j,k,a,b,c} =

since it gives the dot product when a and b are vectors, or the matrix multiplication when a and b are matrices

As for matmul operation in numpy, it consists of parts of dot result, and it can be defined as

>matmul(a,b)_{i,j,k,c} =

So, you can see that matmul(a,b) returns an array with a small shape, which has smaller memory consumption and make more sense in applications. In particular, combining with broadcasting, you can get

matmul(a,b)_{i,j,k,l} =

for example.

From the above two definitions, you can see the requirements to use those two operations. Assume a.shape=(s1,s2,s3,s4) and b.shape=(t1,t2,t3,t4)

-

To use dot(a,b) you need

- t3=s4;

-

To use matmul(a,b) you need

- t3=s4

- t2=s2, or one of t2 and s2 is 1

- t1=s1, or one of t1 and s1 is 1

Use the following piece of code to convince yourself.

Code sample

import numpy as np

for it in xrange(10000):

a = np.random.rand(5,6,2,4)

b = np.random.rand(6,4,3)

c = np.matmul(a,b)

d = np.dot(a,b)

#print 'c shape: ', c.shape,'d shape:', d.shape

for i in range(5):

for j in range(6):

for k in range(2):

for l in range(3):

if not c[i,j,k,l] == d[i,j,k,j,l]:

print it,i,j,k,l,c[i,j,k,l]==d[i,j,k,j,l] #you will not see them

Solution 5

Here is a comparison with np.einsum to show how the indices are projected

np.allclose(np.einsum('ijk,ijk->ijk', a,b), a*b) # True

np.allclose(np.einsum('ijk,ikl->ijl', a,b), a@b) # True

np.allclose(np.einsum('ijk,lkm->ijlm',a,b), a.dot(b)) # True

Related videos on Youtube

blaz

Updated on July 08, 2022Comments

-

blaz almost 2 years

I recently moved to Python 3.5 and noticed the new matrix multiplication operator (@) sometimes behaves differently from the numpy dot operator. In example, for 3d arrays:

import numpy as np a = np.random.rand(8,13,13) b = np.random.rand(8,13,13) c = a @ b # Python 3.5+ d = np.dot(a, b)The

@operator returns an array of shape:c.shape (8, 13, 13)while the

np.dot()function returns:d.shape (8, 13, 8, 13)How can I reproduce the same result with numpy dot? Are there any other significant differences?

-

user2357112 over 8 yearsYou can't get that result out of dot. I think people generally agreed that dot's handling of high-dimension inputs was the wrong design decision.

-

hpaulj over 8 yearsWhy didn't they implement the

matmulfunction years ago?@as an infix operator is new, but the function works just as well without it.

-

-

Alex K over 8 yearsThe confusion here is probably because of the release notes, which directly equate the "@" symbol to the dot() function of numpy in the example code.

Alex K over 8 yearsThe confusion here is probably because of the release notes, which directly equate the "@" symbol to the dot() function of numpy in the example code. -

Ronak Agrawal over 6 yearsdot(a, b) [i,j,k,m] = sum(a[i,j,:] * b[k,:,m]) ------- like documentation says: it is a sum product over the last axis of a and the second-to-last axis of b:

-

Subhaneil Lahiri over 4 years

Subhaneil Lahiri over 4 yearsnp.matmulalso gives the dot product on vectors and the matrix product on matrices. -

Nathan almost 4 yearsGood catch however, its a 3x4x2. Another way to build the matrix would be

Nathan almost 4 yearsGood catch however, its a 3x4x2. Another way to build the matrix would bea = np.arange(24).reshape(3, 4, 2)which would create an array with the dimensions 3x4x2. -

Grzegorz Krug about 3 yearsAnswer above is suggesting that these methods are not the same

Grzegorz Krug about 3 yearsAnswer above is suggesting that these methods are not the same -

hpaulj almost 2 years

np.dotis converting the dataframe to array, and returning an array, (200,3) witth (3,)=.(200,). The error withmatmulis produced by pandas, which is trying make a 3 column frame from that array. I suspect a full traceback will show that.

![matrix multiplication python using numpy [using @ operator, matmul and dot, etc.]](https://i.ytimg.com/vi/zFg3OssYANU/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLADYgQ1NaxmKmtf_YYvJnNjGZYVrQ)