Does Google ignore robots.txt

Solution 1

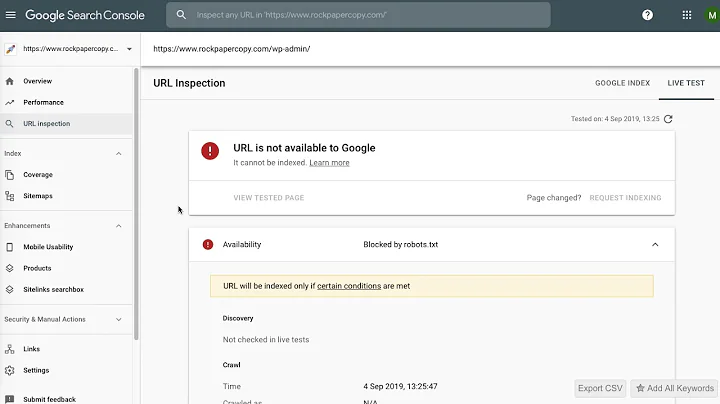

Google will still see sites blocked by robots.txt, and may even list them in search results.

This is especially the case when entire domains/subdomains are blocked. Google will list links to these along with the text A description for this result is not available because of this site's robots.txt – learn more with a link to https://support.google.com/webmasters/answer/156449 .

They tell us that while won't crawl or index the content of pages blocked by robots.txt, they may still index the URLs if we find links to them elsewhere. They also give this helpful advice:

To entirely prevent a page's contents from being listed in the Google web index even if other sites link to it, use a noindex meta tag or x-robots-tag. As long as Googlebot fetches the page, it will see the noindex meta tag and prevent that page from showing up in the web index. The x-robots-tag HTTP header is particularly useful if you wish to limit indexing of non-HTML files like graphics or other kinds of documents.

So if you really don't want your pages indexed then make sure to use a META tag or HTTP header. I've found <meta name="robots" content="noindex, nofollow"> particularly helpful for back-end admin areas and control panels when I don't trust Disallow: /admin to be good enough.

Solution 2

Google does not ignore robots.txt. If you were to find Googlebot crawling a page blocked by robots.txt you should report it to Google in their "crawling, indexing, and ranking" product forum.

There are some cases in which it may look like Googlebot disobeys robots.txt:

- The

robots.txtfile is recently updated -- Googlebot may only fetch it once a day. - A robot claims to be Googlebot but is not actually run by Google -- How to verify Googlebot

- There is an error in your

robots.txtfile. -- Test it in Google Webmaster Tools - A page is listed in search results even when blocked -- Google may list pages that are in

robots.txtwhen there are several external links to them. When this happens, Googlebot does not crawl the page, but rather uses third party information (such as link anchor text) to determine what the page is about.

While Google is good at following robots.txt, not all web crawlers are as friendly. It is not uncommon to see other, less well mannered, robots crawling blocked pages.

Solution 3

Google may index the URL but not the contents of a page if it is restricted by robots.txt or a robots meta directive. This is, providing that nowhere else on the web links to the same destination without a nofollow link relationship.

You can read more on how Google listens to robots here.

Related videos on Youtube

eternicode

Updated on September 18, 2022Comments

-

eternicode over 1 year

I've been developing a django site (irrelevant) under python 2.5 up until now, when I wanted to switch to python 2.6 to make sure things worked there. However, when I was setting up my virtualenv for 2.6, pip threw an error "ImportError: No module named _md5".

Background:

- I'm running on Ubuntu Maverick 10.10.

- My python 2.5 was coming from fkrull's deadsnakes repo, and has been working without issues.

- I create virtualenvs with

virtualenv <path> --no-site-packages --python=python2.[56]

If I try to import hashlib from outside a virtualenv, it works fine:

$ python2.6 Python 2.6.6 (r266:84292, Sep 15 2010, 15:52:39) [GCC 4.4.5] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> import hashlib >>>But inside it throws the same ImportError.

I've tried reinstalling python2.6, libpython2.6, and python2.6-minimal and recreating my virtualenv, but I get the same error.

None of the list of potential duplicates didn't help, as they either use different linux distros or simply say "recompile python".

Ideas?

-

user237419 about 13 yearsI got tricked by module name. what is _md5 anyway? I am not aware of any module named _md5 in default python install

user237419 about 13 yearsI got tricked by module name. what is _md5 anyway? I am not aware of any module named _md5 in default python install -

eternicode about 13 yearsFrom what I understand, _md5 is a wrapper around a C library, or maybe a C module.

import _md5at the python shell fails with an ImportError, too, so I wonder if hashlib is doing some magic in there somewhere. -

user237419 about 13 yearsyes it's a C module used internally by hashlib (probably named with an _ to ease the process of md5 module deprecation); your problem has to do with your upgrade and virtualenv running a different python/dep version; I suppose your fix (wiping out and recreating the venv from scratch) is the only fix :)

user237419 about 13 yearsyes it's a C module used internally by hashlib (probably named with an _ to ease the process of md5 module deprecation); your problem has to do with your upgrade and virtualenv running a different python/dep version; I suppose your fix (wiping out and recreating the venv from scratch) is the only fix :)

-

Stéphane over 12 yearsI have same error, but while creating the virtualenv ... any idea ?

-

Alexis Wilke over 10 yearsAndrew, note that anonymous users should not have access to an /admin folder unless your log in page is under that page and need to be reached by such...

-

Andrew Lott over 10 yearsI was thinking specifically about admin login pages when implementing that, but good call pointing that out.

Andrew Lott over 10 yearsI was thinking specifically about admin login pages when implementing that, but good call pointing that out.