Does np.dot automatically transpose vectors?

Solution 1

The semantics of np.dot are not great

As Dominique Paul points out, np.dot has very heterogenous behavior depending on the shapes of the inputs. Adding to the confusion, as the OP points out in his question, given that weights is a 1D array, np.array_equal(weights, weights.T) is True (array_equal tests for equality of both value and shape).

Recommendation: use np.matmul or the equivalent @ instead

If you are someone just starting out with Numpy, my advice to you would be to ditch np.dot completely. Don't use it in your code at all. Instead, use np.matmul, or the equivalent operator @. The behavior of @ is more predictable than that of np.dot, while still being convenient to use. For example, you would get the same dot product for the two 1D arrays you have in your code like so:

returns = expected_returns_annual @ weights

You can prove to yourself that this gives the same answer as np.dot with this assert:

assert expected_returns_annual @ weights == expected_returns_annual.dot(weights)

Conceptually, @ handles this case by promoting the two 1D arrays to appropriate 2D arrays (though the implementation doesn't necessarily do this). For example, if you have x with shape (N,) and y with shape (M,), if you do x @ y the shapes will be promoted such that:

x.shape == (1, N)

y.shape == (M, 1)

Complete behavior of matmul/@

Here's what the docs have to say about matmul/@ and the shapes of inputs/outputs:

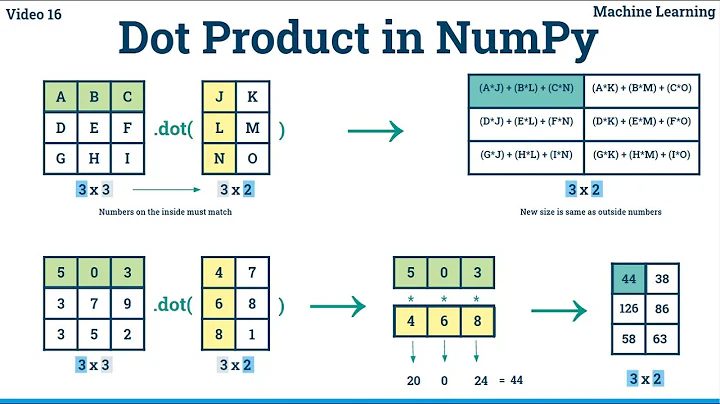

- If both arguments are 2-D they are multiplied like conventional matrices.

- If either argument is N-D, N > 2, it is treated as a stack of matrices residing in the last two indexes and broadcast accordingly.

- If the first argument is 1-D, it is promoted to a matrix by prepending a 1 to its dimensions. After matrix multiplication the prepended 1 is removed.

- If the second argument is 1-D, it is promoted to a matrix by appending a 1 to its dimensions. After matrix multiplication the appended 1 is removed.

Notes: the arguments for using @ over dot

As hpaulj points out in the comments, np.array_equal(x.dot(y), x @ y) for all x and y that are 1D or 2D arrays. So why do I (and why should you) prefer @? I think the best argument for using @ is that it helps to improve your code in small but significant ways:

-

@is explicitly a matrix multiplication operator.x @ ywill raise an error ifyis a scalar, whereasdotwill make the assumption that you actually just wanted elementwise multiplication. This can potentially result in a hard-to-localize bug in whichdotsilently returns a garbage result (I've personally run into that one). Thus,@allows you to be explicit about your own intent for the behavior of a line of code. -

Because

@is an operator, it has some nice short syntax for coercing various sequence types into arrays, without having to explicitly cast them. For example,[0,1,2] @ np.arange(3)is valid syntax.- To be fair, while

[0,1,2].dot(arr)is obviously not valid,np.dot([0,1,2], arr)is valid (though more verbose than using@).

- To be fair, while

-

When you do need to extend your code to deal with many matrix multiplications instead of just one, the

NDcases for@are a conceptually straightforward generalization/vectorization of the lower-Dcases.

Solution 2

I had the same question some time ago. It seems that when one of your matrices is one dimensional, then numpy will figure out automatically what you are trying to do.

The documentation for the dot function has a more specific explanation of the logic applied:

If both a and b are 1-D arrays, it is inner product of vectors (without complex conjugation).

If both a and b are 2-D arrays, it is matrix multiplication, but using matmul or a @ b is preferred.

If either a or b is 0-D (scalar), it is equivalent to multiply and using numpy.multiply(a, b) or a * b is preferred.

If a is an N-D array and b is a 1-D array, it is a sum product over the last axis of a and b.

If a is an N-D array and b is an M-D array (where M>=2), it is a sum product over the last axis of a and the second-to-last axis of b:

Solution 3

In NumPy, a transpose .T reverses the order of dimensions, which means that it doesn't do anything to your one-dimensional array weights.

This is a common source of confusion for people coming from Matlab, in which one-dimensional arrays do not exist. See Transposing a NumPy Array for some earlier discussion of this.

np.dot(x,y) has complicated behavior on higher-dimensional arrays, but its behavior when it's fed two one-dimensional arrays is very simple: it takes the inner product. If we wanted to get the equivalent result as a matrix product of a row and column instead, we'd have to write something like

np.asscalar(x @ y[:, np.newaxis])

adding a trailing dimension to y to turn it into a "column", multiplying, and then converting our one-element array back into a scalar. But np.dot(x,y) is much faster and more efficient, so we just use that.

Edit: actually, this was dumb on my part. You can, of course, just write matrix multiplication x @ y to get equivalent behavior to np.dot for one-dimensional arrays, as tel's excellent answer points out.

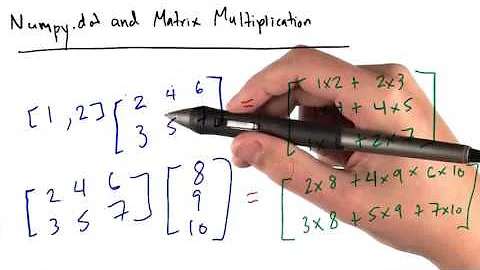

Related videos on Youtube

Tom

Updated on September 16, 2022Comments

-

Tom over 1 year

I am trying to calculate the first and second order moments for a portfolio of stocks (i.e. expected return and standard deviation).

expected_returns_annual Out[54]: ticker adj_close CNP 0.091859 F -0.007358 GE 0.095399 TSLA 0.204873 WMT -0.000943 dtype: float64 type(expected_returns_annual) Out[55]: pandas.core.series.Series weights = np.random.random(num_assets) weights /= np.sum(weights) returns = np.dot(expected_returns_annual, weights)So normally the expected return is calculated by

(x1,...,xn' * (R1,...,Rn)

with x1,...,xn are weights with a constraint that all the weights have to sum up to 1 and ' means that the vector is transposed.

Now I am wondering a bit about the numpy dot function, because

returns = np.dot(expected_returns_annual, weights)and

returns = np.dot(expected_returns_annual, weights.T)give the same results.

I tested also the shape of weights.T and weights.

weights.shape Out[58]: (5,) weights.T.shape Out[59]: (5,)The shape of weights.T should be (,5) and not (5,), but numpy displays them as equal (I also tried np.transpose, but there is the same result)

Does anybody know why numpy behave this way? In my opinion the np.dot product automatically shape the vector the right why so that the vector product work well. Is that correct?

Best regards Tom

-

hpaulj over 5 years(,5) is not a valid python expression. (5,) is single element tuple. As a shape it means a 5 element 1d array, not a row or column vector in the matlab sense. Transpose switches the order of the axes. With only one axis, the transpose of a 1d is itself.

-

-

tel over 5 yearsThere's some good information in this answer, but you're making the use of

tel over 5 yearsThere's some good information in this answer, but you're making the use of@seem too complicated. If you just dox @ yyou'll get your expected inner product (given thatxandyare both1D).matmuldoes the shape promotion automagically. -

macroeconomist over 5 yearsyes, I just realized this and did an edit. forgot about this behavior of

x @ y- thanks! -

hpaulj over 5 yearsI don't see any real difference (or advantage) between

dotandmatmulwhen dealing with 1 and 2 arrays.1d@2dproduces the same thing adot(1d,2d), etc. Maybe it's because I've useddotlong enough, but thematmultalk of prepending or postpending dimensions sounds unnecessarily detailed. -

tel over 5 yearsI thought so too at first, but after chewing on it for a bit I think the shape promotion concept does help to make the behavior of all of the

tel over 5 yearsI thought so too at first, but after chewing on it for a bit I think the shape promotion concept does help to make the behavior of all of the1D x NDandND x 1Dcases more concrete/easy to derive in your head. -

tel over 5 yearsStill, you're right (I think?) that there's no case in which

tel over 5 yearsStill, you're right (I think?) that there's no case in whichdotvsmatmulmakes a difference for any combination of1Dand2Darrays. Thus, in following the Zen of Python, in any given codebase you should pick one or the other and stick with it. My preference is formatmul, since@is just plain nifty, and when you do need to extend toNDarrays, I personally have never found a use case for theNDbehavior ofdot. -

Tom over 5 yearsMuch thanks to all the answers on this post. They are shedding some light on the use of np.dot for me. I just have an economic background and therefore I assumed that the shape attribute of Python should display the dimensions of 1d arrays different (equivalent to mathematics).

-

hpaulj over 5 yearsYes the ND case for

dotis confusing, and rarely useful. That's a big reason whymatmulwas added. But I also like the greater control (and clarity) of theeinsumnotation. -

Tom over 5 yearsOf course I mean (x1,...,xn)' * (R1,...,Rn) and not (x1,...,xn' * (R1,...,Rn). And you are correct with your assumptions that this is a matrix product, and therefore the outcome is just a scalar (dim(1,1)).