Easy way to store JSON under Node.js

Solution 1

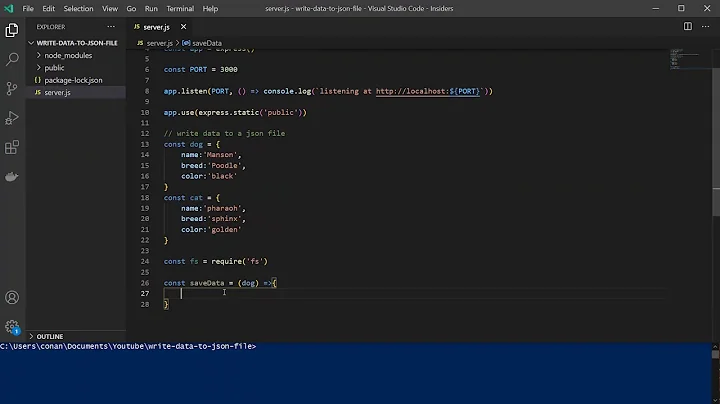

Why not write to a file?

// Writing...

var fs = require("fs");

var myJson = {

key: "myvalue"

};

fs.writeFile( "filename.json", JSON.stringify( myJson ), "utf8", yourCallback );

// And then, to read it...

myJson = require("./filename.json");

And remember that if you need to write-and-read repeatedly, you will have to clear Node's cache for each file you're repeatedly reading, or Node will not reload your file and instead yield the content that it saw during the first require call. Clearing the cache for a specific require is thankfully very easy:

delete require.cache[require.resolve('./filename.json')]

Also note that if (as of Node 12) you're using ES modules rather than CommonJS, the import statement does not have a corresponding cache invaldation. Instead, use the dynamic import(), and then because imports are from URL, you can add a query string as a form of cache busting as part of the import string.

Alternatively, to avoid any built-in caching, use fs.readFile()/fs.readFileSync() instead of require (using fs/promises instead of fs if you're writing promise-based code, either explicitly, or implicitly by using async/await)

Solution 2

Try NeDB: https://github.com/louischatriot/nedb

"Embedded persistent database for Node.js, written in Javascript, with no dependency (except npm modules of course). You can think of it as a SQLite for Node.js projects, which can be used with a simple require statement. The API is a subset of MongoDB's. You can use it as a persistent or an in-memory only datastore."

Solution 3

I found a library named json-fs-store to serialize JavaScript object to JSON in a file and retrieve it later.

When retrieving a file via the store.load method (not described in the docs at the moment), it is parsed with JSON.parse which is better than doing a require like in the other answer:

- you get proper error handling when the contents are malformed

- if someone has managed to sneak JavaScript code into the file, it will lead to a parse error instead of the JS being executed.

Solution 4

If you are looking for performance consider: LokiJS

https://www.npmjs.com/package/lokijs

Solution 5

If you need simple data storage, with no external processes, very high and stable performance, you might like Rocket-store. It's very simple to use, include the module and you can start inserting records right away.

It has three major methods: put, get, and delete (and some special methods and options settings)

Example:

// collection/table = "cars", key = "Mercedes"

result = await rs.post("cars", "Mercedes", {owner: "Lisa Simpson", reg: "N3RD"});

// Get all records from "cars" collection

result = await rs.get("cars","*");

// Delete records in collection "cars" where keys match "*cede*"

result = await rs.delete("cars","*cede*");

It relies on the underlying filesystem cache, to optimize for speed, and can operate with millions of records.

You get simple sequences, auto increment and other minor conveniences, but that about it. Nothing fancy. I like it a lot, when ever I need a simple storage solutions, but I'm also very biased, as the author ;)

Related videos on Youtube

johnny

Updated on July 09, 2022Comments

-

johnny almost 2 years

I'm looking for a super-simple way to store one JSON array in a persistent way under Node.js. It doesn't need to have any special features. I just want to put a JSON object in there and be able to read it at the next server restart.

(Solutions like MongoDB and CouchDB both seem like overkill for this purpose.)

-

Mörre about 11 yearsThere is no such thing as a "JSON object". Either you have an object (here: Javascript object), or you have JSON - which is a string. You can save this string in the normal way - files, databases...

Mörre about 11 yearsThere is no such thing as a "JSON object". Either you have an object (here: Javascript object), or you have JSON - which is a string. You can save this string in the normal way - files, databases...

-

-

Mörre about 11 yearsWhat would that accomplish? The contents of the file would be lost - it won't be assigned to any variable. See the other answer...

Mörre about 11 yearsWhat would that accomplish? The contents of the file would be lost - it won't be assigned to any variable. See the other answer... -

Mörre about 11 yearsOnly one thing to add: if you "require" the file you must TRUST its contents. JSON as data exchange format is regularly not trusted, if you get JSON from an external source always use the JSON parse method. I'm only saying this to prevent this (read) pattern to be remembered as common for ALL JSON content.

Mörre about 11 yearsOnly one thing to add: if you "require" the file you must TRUST its contents. JSON as data exchange format is regularly not trusted, if you get JSON from an external source always use the JSON parse method. I'm only saying this to prevent this (read) pattern to be remembered as common for ALL JSON content. -

gustavohenke about 11 yearsYup, but I think that validations must be done before writing, not when reading.

-

Mörre about 11 yearsActually, the whole Rails stack does the exact opposite - NEVER trust anything in the database, and regardless of what you may think about Rails, they have adopted that policy for a reason.

Mörre about 11 yearsActually, the whole Rails stack does the exact opposite - NEVER trust anything in the database, and regardless of what you may think about Rails, they have adopted that policy for a reason. -

gustavohenke about 11 yearsI've never used Ruby, so I don't think anything about it :D Also, the security of the data is something not specified by the question author.

-

Mörre about 11 yearsNo, I did not claim that. Questions here IMHO always have two components: for the person asking them AND for all those people later finding them when they enter related questions. Also, the Rails policy I mention has NOTHING WHATSOEVER to do with the language Rails is implemented in. It is a general web app design principle. Over and OUT - as I said, I just added the comment for future generations, I did not criticize your answer in this context, so let's just leave it at that, no reason to become defensive.

Mörre about 11 yearsNo, I did not claim that. Questions here IMHO always have two components: for the person asking them AND for all those people later finding them when they enter related questions. Also, the Rails policy I mention has NOTHING WHATSOEVER to do with the language Rails is implemented in. It is a general web app design principle. Over and OUT - as I said, I just added the comment for future generations, I did not criticize your answer in this context, so let's just leave it at that, no reason to become defensive. -

Soroush Hakami about 11 yearsI ment that you'd write the file first (but didnt specify how), then at server restart read it. So basicly what your answer was. I just wrote mine in a terribly worse way :)

Soroush Hakami about 11 yearsI ment that you'd write the file first (but didnt specify how), then at server restart read it. So basicly what your answer was. I just wrote mine in a terribly worse way :) -

leonheess over 4 yearsWhat would be the advantage over say Nedb?

leonheess over 4 yearsWhat would be the advantage over say Nedb? -

Simon Rigét over 4 yearsThere are a few things; mainly simplicity and speed. You won't find an easier API than Rocket-store anywhere. You even don't need to initialize anything to start posting to it. Its using the operating systems file caching very effectively. Only bottlenecks is lag in the underlying fs interface in libuv. But that is about to change with node v13. There is a data compatible version for PHP as well.

Simon Rigét over 4 yearsThere are a few things; mainly simplicity and speed. You won't find an easier API than Rocket-store anywhere. You even don't need to initialize anything to start posting to it. Its using the operating systems file caching very effectively. Only bottlenecks is lag in the underlying fs interface in libuv. But that is about to change with node v13. There is a data compatible version for PHP as well. -

Mike 'Pomax' Kamermans almost 3 yearsnote for future visitors: the mentioned library has not kept up with Node since Node v0.4 and should/can not be used with current versions of Node.

Mike 'Pomax' Kamermans almost 3 yearsnote for future visitors: the mentioned library has not kept up with Node since Node v0.4 and should/can not be used with current versions of Node. -

Mike 'Pomax' Kamermans almost 3 yearsNote for future visitors: the mentioned library was last updated in 2015 has since been officially deprecated.

Mike 'Pomax' Kamermans almost 3 yearsNote for future visitors: the mentioned library was last updated in 2015 has since been officially deprecated. -

Mike 'Pomax' Kamermans almost 3 yearsThis does need a little note on how to invalidate Node's cache, because if you write to file, then require it, then write to it again and re-require it, you're just going to get content that Node cached on first require, not the updated content.

Mike 'Pomax' Kamermans almost 3 yearsThis does need a little note on how to invalidate Node's cache, because if you write to file, then require it, then write to it again and re-require it, you're just going to get content that Node cached on first require, not the updated content.

![[Nodejs] Bài 10 : File package.json](https://i.ytimg.com/vi/kU6u9AkZPaU/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLBj3G-WLCimB5ltIAqJEBfdN1fs1Q)