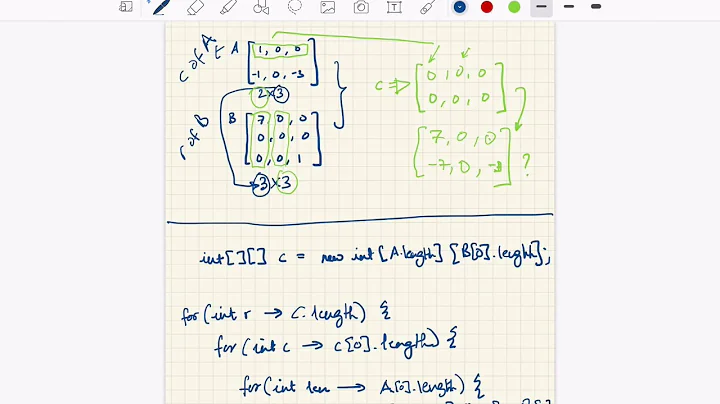

Fast sparse matrix multiplication

The most common choices are CSC or CSR storage. These are both efficient for matrix-vector multiplication. It's also very easy to code those multiplication routines, if you have to do it yourself.

That said, Yale storage also yields very efficient matrix-vector multiply. If you are performing matrix element lookup, then you have misunderstood how to use the format. I suggest you study some of the standard sparse libraries to learn how matrix-vector multiplication is implemented.

Even with your current storage you can perform matrix multiplication in O(n) complexity. All sparse matrix-vector multiplication algorithms that I have ever seen boil down to the same steps. For example consider y = Ax.

- Zeroise the result vector, y.

- Initialise an iterator for the non-zero elements of the matrix, A.

- Get the next non-zero element of the matrix, A[i,j] say. Note that the pattern of i,j doesn't follow a regular pattern. It simply reflects the order in which the non-zero elements of A are stored.

- y[i] += A[i,j]*x[j]

- If there are more elements of A, goto 3.

I suspect you are writing the classic double for loop dense multiplication code:

for (i=0; i<N; i++)

for (j=0; j<N; j++)

y[i] += A[i,j]*x[j]

and that's what is leading you to perform lookups.

But I'm not suggesting that you stick with your std::map storage. That's not going to be super efficient. I'd recommend CSC mainly because it is the most widely used.

Related videos on Youtube

lezebulon

Updated on February 26, 2020Comments

-

lezebulon about 4 years

lezebulon about 4 yearsfor class I have to write my own linear equation solver for sparse matrices. I am free to use any type of data structure for sparse matrices and I have to implement several solves, including conjuguate gradient.

I was wondering if there is a famous way to store sparse matrices such that multiplication with a vector is relatively fast.

Right now my sparse matrices are basically implemented a wrapped

std::map< std::pair<int, int>, double>which stores the data, if any. This transforms the multiplication of a matrix with from vector to a O(n²) complexity to a O(n²log(n)) as I have to perform look-up for each matrix elements. I've looked into the Yale Sparse matrix format and it seems that retrieval of an element is also in O(log(n)) so I'm not sure if it would be much faster.For reference I have a 800x800 matrix that is populated with 5000 entries. It takes roughly to 450 seconds solve such a system with the conjugate gradient method.

Do you think it's possible to do it much quicker with another data structure?

thanks!

-

Daniel Williams about 11 yearsRead wikipedia first. en.wikipedia.org/wiki/Sparse_matrix it has a good list of the common storage methods that will give you efficient operations.

-

lezebulon about 11 years@Song Wang : the purpose of the class is basically to roll our own finite element method solver

lezebulon about 11 years@Song Wang : the purpose of the class is basically to roll our own finite element method solver

-

-

lezebulon about 11 years" If you are performing matrix element lookup, then you have misunderstood how to use the format" yeah you're exactly right, that was my original problem. For the CSR method for instance I'll have the same complexity of look-up but indeed I don't need to do lookup to do a matrix-vector multiplication, just to travers the array once

lezebulon about 11 years" If you are performing matrix element lookup, then you have misunderstood how to use the format" yeah you're exactly right, that was my original problem. For the CSR method for instance I'll have the same complexity of look-up but indeed I don't need to do lookup to do a matrix-vector multiplication, just to travers the array once -

lezebulon about 11 yearsregarding your edit : you're right this will work as well, but only because my points are already sorted by row first right?

lezebulon about 11 yearsregarding your edit : you're right this will work as well, but only because my points are already sorted by row first right? -

David Heffernan about 11 years"Just to traverse the array once". That is exactly it. You've got it now! Takes a bit of a shift of mind, but once you think that way, you are on the home straight!

David Heffernan about 11 years"Just to traverse the array once". That is exactly it. You've got it now! Takes a bit of a shift of mind, but once you think that way, you are on the home straight! -

David Heffernan about 11 yearsFWIW 800x800 with nz=5000 should be almost instant. You should do around 1000 linear solves for such a matrix in a second.

David Heffernan about 11 yearsFWIW 800x800 with nz=5000 should be almost instant. You should do around 1000 linear solves for such a matrix in a second. -

lezebulon about 11 yearsthanks for the info! I quickly implemented the multiplication in O(n) you mentionned and the total time to solve is now ~3seconds, with doing 3000 matrix-vector multiplications. So I think the next step is to improve my solver, but that's for another question !

lezebulon about 11 yearsthanks for the info! I quickly implemented the multiplication in O(n) you mentionned and the total time to solve is now ~3seconds, with doing 3000 matrix-vector multiplications. So I think the next step is to improve my solver, but that's for another question ! -

David Heffernan about 11 yearsIt does depend on the form of your matrices. My FE code works with long beams and I think the problems are really easy for a solver. I'm personally using Tim Davis's

David Heffernan about 11 yearsIt does depend on the form of your matrices. My FE code works with long beams and I think the problems are really easy for a solver. I'm personally using Tim Davis'sCSparsewhich is excellent. His companion book is also superb.