Fastest way of reading relatively huge byte-files in Java

Solution 1

I would use a memory mapped file which is fast enough to do in the same thread.

final FileChannel channel = new FileInputStream(fileName).getChannel();

MappedByteBuffer buffer = channel.map(FileChannel.MapMode.READ_ONLY, 0, channel.size());

// when finished

channel.close();

This assumes the file is smaller than 2 GB and will take 10 milli-seconds or less.

Solution 2

Don't use available(): it's not reliable. And don't ignore the result of the read() method: it tells you how many bytes were actually read. And if you want to read everything in memory, use a ByteArrayOutputStream rather than using a List<byte[]>:

ByteArrayOutputStream baos = new ByteArrayOutputStream();

int read;

while ((read = reader.read(buffer)) >= 0) {

baos.write(buffer, 0, read);

}

byte[] everything = baos.toByteArray();

I think 1024 is a bit small as a buffer size. I would use a larger buffer (something like 16 KB or 32KB)

Note that Apache commons IO and Guava have utility methods that do this for you, and have been optimized already.

Solution 3

Have a look at Java NIO (Non-Blocking Input/Output) API. Also, this question might prove being useful.

I don't have much experience with IO, but I've heard that NIO is much more efficient way of handling large sets of data.

Related videos on Youtube

chollinger

Updated on July 09, 2022Comments

-

chollinger almost 2 years

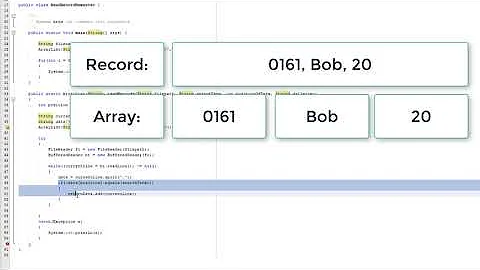

chollinger almost 2 yearswhat's the probably fastest way of reading relatively huge files with Java's I/O-methods? My current solution uses the

BufferedInputStreamsaving to an byte-array with 1024 bytes allocated to it. Each buffer is than saved in anArrayListfor later use. The whole process is called via a separate thread (callable-interface).Not very fast though.

ArrayList<byte[]> outputArr = new ArrayList<byte[]>(); try { BufferedInputStream reader = new BufferedInputStream(new FileInputStream (dir+filename)); byte[] buffer = new byte[LIMIT]; // == 1024 int i = 0; while (reader.available() != 0) { reader.read(buffer); i++; if (i <= LIMIT){ outputArr.add(buffer); i = 0; buffer = null; buffer = new byte[LIMIT]; } else continue; } System.out.println("FileReader-Elements: "+outputArr.size()+" w. "+buffer.length+" byte each.");-

therobyouknow over 12 yearsHave a look at the Apache Commons libraries for more options. And for determining the speed have a look at the Java Performance Tuning book by O'Reilly.

-

Jon Skeet over 12 yearsCurrently you're ignoring the value returned by your

read()call. Don't do that.

-

-

chollinger over 12 yearsBloody hell! Why the heck is that thing so extremely fast? Thanks anyways, works perfectly. (edit: it gets the file from the memory, the java docs just told me. clever)

chollinger over 12 yearsBloody hell! Why the heck is that thing so extremely fast? Thanks anyways, works perfectly. (edit: it gets the file from the memory, the java docs just told me. clever) -

Vishy over 12 yearsIf you need to access more than 2 GB you need to use more than one mapping.

-

Arnav Sengupta almost 4 years@PeterLawrey on the same lines is there an efficient way to convert a large input stream into a byte array? For instance, reading an input stream from a ContainerRequestContext?

Arnav Sengupta almost 4 years@PeterLawrey on the same lines is there an efficient way to convert a large input stream into a byte array? For instance, reading an input stream from a ContainerRequestContext?