FastText using pre-trained word vector for text classification

Solution 1

FastText's native classification mode depends on you training the word-vectors yourself, using texts with known classes. The word-vectors thus become optimized to be useful for the specific classifications observed during training. So that mode typically wouldn't be used with pre-trained vectors.

If using pre-trained word-vectors, you'd then somehow compose those into a text-vector yourself (for example, by averaging all the words of a text together), then training a separate classifier (such as one of the many options from scikit-learn) using those features.

Solution 2

FastText supervised training has -pretrainedVectors argument which can be used like this:

$ ./fasttext supervised -input train.txt -output model -epoch 25 \

-wordNgrams 2 -dim 300 -loss hs -thread 7 -minCount 1 \

-lr 1.0 -verbose 2 -pretrainedVectors wiki.ru.vec

Few things to consider:

- Chosen dimension of embeddings must fit the one used in pretrained vectors. E.g. for Wiki word vectors is must be 300. It is set by

-dim 300argument. - As of mid-February 2018, Python API (v0.8.22) doesn't support training using pretrained vectors (the corresponding parameter is ignored). So you must use CLI (command line interface) version for training. However, a model trained by CLI with pretrained vectors can be loaded by Python API and used for predictions.

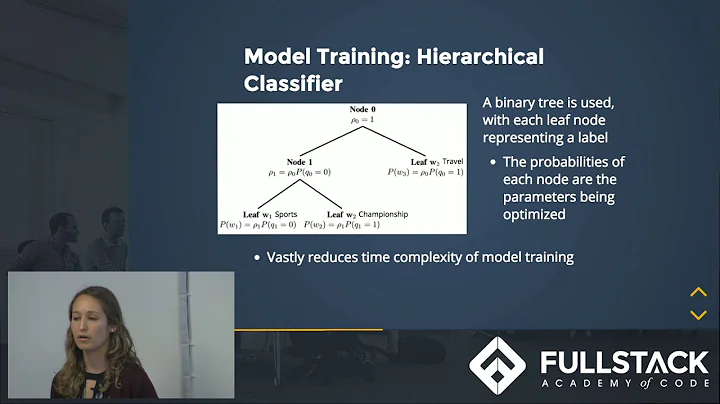

- For large number of classes (in my case there were 340 of them) even CLI may break with an exception so you will need to use hierarchical softmax loss function (

-loss hs) - Hierarchical softmax is worse in performance than normal softmax so it can give up all the gain you've got from pretrained embeddings.

- The model trained with pretrained vectors can be several times larger than one trained without.

- In my observation, the model trained with pretrained vectors gets overfitted faster than one trained without

Related videos on Youtube

JarvisIA

Updated on August 03, 2020Comments

-

JarvisIA almost 4 years

I am working on a text classification problem, that is, given some text, I need to assign to it certain given labels.

I have tried using fast-text library by Facebook, which has two utilities of interest to me:

A) Word Vectors with pre-trained models

B) Text Classification utilities

However, it seems that these are completely independent tools as I have been unable to find any tutorials that merge these two utilities.

What I want is to be able to classify some text, by taking advantage of the pre-trained models of the Word-Vectors. Is there any way to do this?

-

Navaneethan Santhanam almost 6 yearsRe: Python version doesn't support

pretrainedVectors, how did you figure this out? Any idea why this option has been dropped? -

prrao over 4 yearsUpdate: As of

prrao over 4 yearsUpdate: As offasttext==0.9.1(Python API),pretrainedVectorsare accessible via Python. You can include it as an additional hyperparameter during training to load in the pretrained vector (.vec) file and then perform training. For whatever reason though, I'm unable to significantly improve on the model's F1 scores using pretrained vectors. Somehow, FastText doesn't seem to transfer knowledge from the pretrained vectors as well as modern deep learning approaches do.. If anybody has had better luck with pretrained vectors for classification, I'd love to know! -

Ozgur Ozturk about 4 years@prrao My scores also appeared to not change. Did you have any luck? Thanks...

Ozgur Ozturk about 4 years@prrao My scores also appeared to not change. Did you have any luck? Thanks... -

prrao about 4 years@OzgurOzturk I don't think FastText is designed to be used as a transfer learning classifier. See the accepted answer above. I was able to significantly improve on my F1 scores using the automatic hyperparameter optimization tool provided by Facebook. Note that it also allows you to simultaneously optimize for quantization along with the classifier by rapidly sweeping the entire parameter space, so it results in a massively reduced model size while also improving F1 scores. So it's a win-win to use that method.

prrao about 4 years@OzgurOzturk I don't think FastText is designed to be used as a transfer learning classifier. See the accepted answer above. I was able to significantly improve on my F1 scores using the automatic hyperparameter optimization tool provided by Facebook. Note that it also allows you to simultaneously optimize for quantization along with the classifier by rapidly sweeping the entire parameter space, so it results in a massively reduced model size while also improving F1 scores. So it's a win-win to use that method.