Google Webmaster Tools tells me that robots is blocking access to the sitemap

Solution 1

It would seem that Google has probably not yet updated it's cache of your robots.txt file. Your current robots.txt file (above) does not look as if it should be blocking your sitemap URL.

I guess google just hasnt updated its cache.

There is no need to guess. In Google Webmaster Tools (GWT) under "Health" > "Blocked URLs", you can see when your robots.txt was last downloaded and whether it was successful. It will also inform you of how many URLs have been blocked by the robots.txt file.

As mentioned in my comments, GWT has a robots.txt checker tool ("Health" > "Blocked URLs"). So you can immediately test changes to your robots.txt (without changing your actual file). Specify the robots.txt file in the upper textarea and the URLs you would like to test in the lower textarea and it will tell you whether they would be blocked or not.

Caching of robots.txt

A robots.txt request is generally cached for up to one day, but may be cached longer in situations where refreshing the cached version is not possible (for example, due to timeouts or 5xx errors). The cached response may be shared by different crawlers. Google may increase or decrease the cache lifetime based on max-age Cache-Control HTTP headers.

Source: Google Developers - Robots.txt Specifications

Solution 2

I had the same problem with my site because during install WP I select don't track with search engine or same option.

To resolve this problem:

- go to Webmaster Tools crawls remove URL and submit your

www.example.com/robots.txtwith this option -> remove from cach for change content or ... - wait a min

- resubmit your sitemap URL

- finish

Related videos on Youtube

Gaia

Updated on September 18, 2022Comments

-

Gaia over 1 year

Gaia over 1 yearThis is my robots.txt:

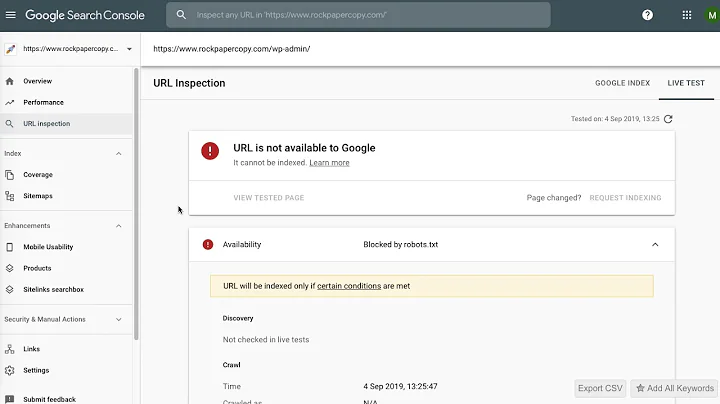

User-agent: * Disallow: /wp-admin/ Disallow: /wp-includes/ Sitemap: http://www.example.org/sitemap.xml.gzBut Google Webmaster Tools tells me that robots is blocking access to the sitemap:

We encountered an error while trying to access your Sitemap. Please ensure your Sitemap follows our guidelines and can be accessed at the location you provided and then resubmit: URL restricted by robots.txt.

I read that Google Webmaster Tools caches robots.txt, but the file has been updated more than 36 hours ago.

Update:

Hitting TEST sitemap does not cause Google to fetch a new sitemap. Only SUBMIT sitemap was able to do that. (BTW, I don't see what's the point in 'test sitemap' unless you paste your current sitemap in there - it doesn't fetch a fresh copy of the sitemap from the address it asks you to enter before the test - but that's a question for another day.)

After submitting (instead of testing) a new sitemap the situation changed. I now get "URL blocked by robots.txt. The sitemap contains URLs which are blocked by robots.txt." for 44 URLs. There are exactly 44 URLs in the sitemap. This means that Google is using the new sitemap but it is still going by the old robots rule (which kept everything off-limits) None of the 44 URLs are in

/wp-admin/or/wp-includes/(which is kind of impossible anyways, since robots.txt is built on the fly by the same plugin that creates the sitemap).Update 2:

It gets worse: on a Google Search results page, the description for the homepage reads: "A description for this result is not available because of this site's robots.txt – learn more". All other pages have fine descriptions. There is no robots.txt OR robots meta blocking indexing of the homepage.

I'm stuck.

-

Admin over 11 yearsIn Google Webmaster Tools > Health > Blocked URLs, you can immediately test whether your robots.txt would block your sitemap URL (or any other URL you would like to test). It doesn't look as if your current robots.txt should block your sitemap, but you say this has been updated. Did a previous version of your robots.txt file block this?

Admin over 11 yearsIn Google Webmaster Tools > Health > Blocked URLs, you can immediately test whether your robots.txt would block your sitemap URL (or any other URL you would like to test). It doesn't look as if your current robots.txt should block your sitemap, but you say this has been updated. Did a previous version of your robots.txt file block this? -

Admin over 11 yearsYes, the previous version did block. I guess google just hasnt updated its cache...

Admin over 11 yearsYes, the previous version did block. I guess google just hasnt updated its cache... -

Admin about 11 yearsI have exactly THE SAME problem. My robots.txt cache is from 23'rd April this year, today is 25'th April and cache is still old. I don't have time for waiting, I need to googleboot to index my site now (it's business site) but it seems I can do nothing, just wait not knowing how long. It's so frustrating!

Admin about 11 yearsI have exactly THE SAME problem. My robots.txt cache is from 23'rd April this year, today is 25'th April and cache is still old. I don't have time for waiting, I need to googleboot to index my site now (it's business site) but it seems I can do nothing, just wait not knowing how long. It's so frustrating!

-

-

Gaia over 11 yearsCould that still be the case 24hrs later??

Gaia over 11 yearsCould that still be the case 24hrs later?? -

MrWhite over 11 yearsWhat is the "Downloaded" date as reported in Webmaster Tools? That will tell you if it is still the case. As shown in the above screenshot (from one of my sites), the robots.txt file was last downloaded on "Sep 3, 2012" (3 days ago). But in my case there is no need to download the file again since nothing has changed (the Last-Modified header should be the same). How often Google fetches your robots.txt file will depend on the Expires and Last-Modified headers as set by your server.

-

Gaia over 11 yearsDownloaded 22 hours ago, and expires header says +24 hrs. I will try again in a couple of hours it should be solved!

Gaia over 11 yearsDownloaded 22 hours ago, and expires header says +24 hrs. I will try again in a couple of hours it should be solved! -

Gaia over 11 yearsThat didnt do it. google is using the new sitemap but it is still going by the old robots.txt rule (which kept everything off-limits)

Gaia over 11 yearsThat didnt do it. google is using the new sitemap but it is still going by the old robots.txt rule (which kept everything off-limits) -

MrWhite over 11 years"That didn't do it" - has Google not yet updated it's cache of your robots.txt file? Although you say you changed the file 36+ hours ago and it was reported as downloaded 22 hours ago?! What do you see when you click on the link to your robots.txt file?

-

Gaia over 11 yearsI see the proper, allowing robots. But google.com/webmasters/tools/… still shows the old, denying robots.txt.

Gaia over 11 yearsI see the proper, allowing robots. But google.com/webmasters/tools/… still shows the old, denying robots.txt.