Hadoop HDFS: set file block size from commandline?

13,820

Solution 1

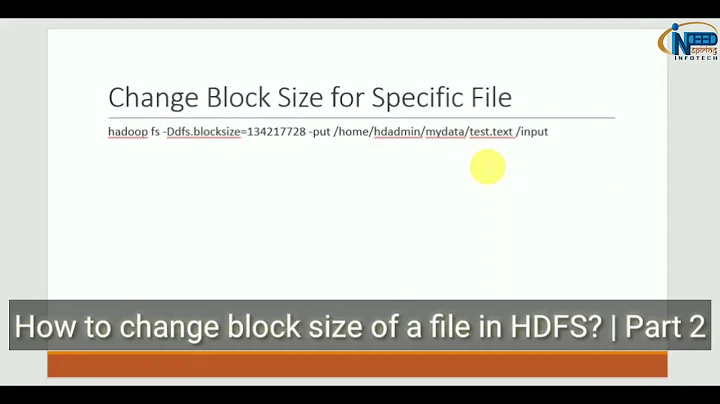

You can do this by setting -Ddfs.block.size=something with your hadoop fs command. For example:

hadoop fs -Ddfs.block.size=1048576 -put ganglia-3.2.0-1.src.rpm /home/hcoyote

As you can see here, the block size changes to what you define on the command line (in my case, the default is 64MB, but I'm changing it down to 1MB here).

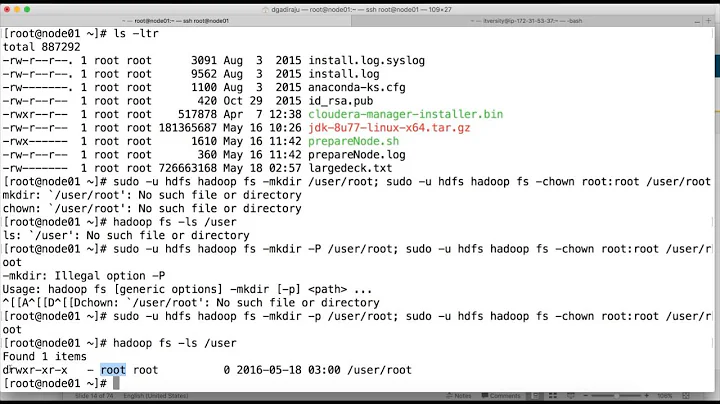

:; hadoop fsck -blocks -files -locations /home/hcoyote/ganglia-3.2.0-1.src.rpm

FSCK started by hcoyote from /10.1.1.111 for path /home/hcoyote/ganglia-3.2.0-1.src.rpm at Mon Aug 15 14:34:14 CDT 2011

/home/hcoyote/ganglia-3.2.0-1.src.rpm 1376561 bytes, 2 block(s): OK

0. blk_5365260307246279706_901858 len=1048576 repl=3 [10.1.1.115:50010, 10.1.1.105:50010, 10.1.1.119:50010]

1. blk_-6347324528974215118_901858 len=327985 repl=3 [10.1.1.106:50010, 10.1.1.105:50010, 10.1.1.104:50010]

Status: HEALTHY

Total size: 1376561 B

Total dirs: 0

Total files: 1

Total blocks (validated): 2 (avg. block size 688280 B)

Minimally replicated blocks: 2 (100.0 %)

Over-replicated blocks: 0 (0.0 %)

Under-replicated blocks: 0 (0.0 %)

Mis-replicated blocks: 0 (0.0 %)

Default replication factor: 3

Average block replication: 3.0

Corrupt blocks: 0

Missing replicas: 0 (0.0 %)

Number of data-nodes: 12

Number of racks: 1

FSCK ended at Mon Aug 15 14:34:14 CDT 2011 in 0 milliseconds

The filesystem under path '/home/hcoyote/ganglia-3.2.0-1.src.rpm' is HEALTHY

Solution 2

NOTE FOR HADOOP 0.21 There's an issue in 0.21 here you have to use -D dfs.blocksize instead of -D dfs.block.size

Related videos on Youtube

Author by

BigChief

Updated on September 18, 2022Comments

-

BigChief over 1 year

I need to set the block-size of a file when I load it into HDFS, to some value lower than the cluster block size. For example, if HDFS is using 64mb blocks, I may want a large file to be copied in with 32mb blocks.

I've done this before within a Hadoop workload using the org.apache.hadoop.fs.FileSystem.create() function, but is there a way to do it from the commandline ?

-

BigChief over 12 yearsI just tried this and can't get it to work, either with -put or -copyFromLocal. In both cases, I get (avg. block size 67022309 B), with the default block size as 64mb. This is with Hadoop 0.21 from Apache (not CDH). Any other ideas?

-

BigChief over 12 yearsYep, passing options on the commandline in 0.21 is broken, due to some deprecation issues. However, your solution would work for other versions (trunk, 0.20.x), so I'll accept. Thanks!

-

BigChief over 12 yearsI will give that a shot and check back. I know -D dfs.block.size definitely does not work.

-

BigChief over 12 yearsYep this works!