Hive error when creating an external table (state=08S01,code=1)

15,494

The issue was that I was pointing the external table at a file in HDFS instead of a directory. The cryptic Hive error message really threw me off.

The solution is to create a directory and put the data file in there. To fix this for the above example, you'd create a directory under /tmp/foobar and place hive_test_1375711405.45852.txt in it. Then create the table like so:

create external table foobar (a STRING, b STRING) row format delimited fields terminated by "\t" stored as textfile location "/tmp/foobar";

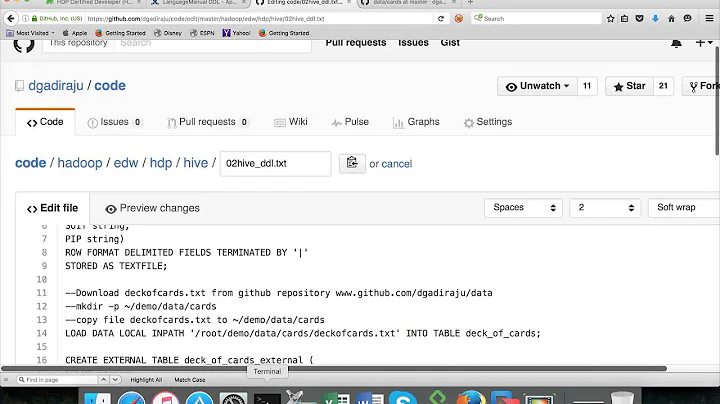

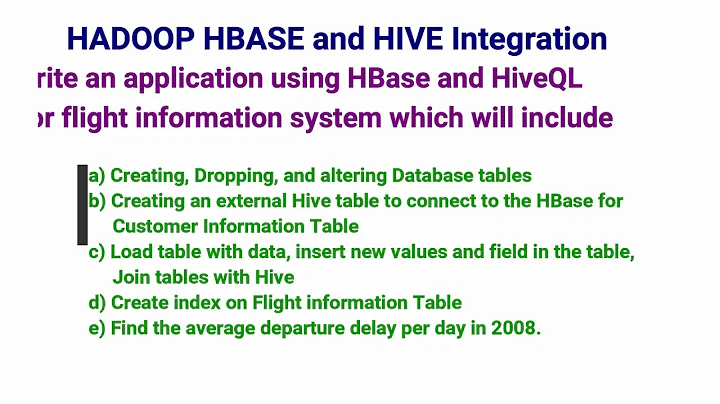

Related videos on Youtube

Author by

yoni

Data Scientist at Nest Labs My personal homepage My Github profile My LinkedIn profile

Updated on September 15, 2022Comments

-

yoni over 1 year

I'm trying to create an external table in Hive, but keep getting the following error:

create external table foobar (a STRING, b STRING) row format delimited fields terminated by "\t" stored as textfile location "/tmp/hive_test_1375711405.45852.txt"; Error: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask (state=08S01,code=1) Error: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask (state=08S01,code=1) Aborting command set because "force" is false and command failed: "create external table foobar (a STRING, b STRING) row format delimited fields terminated by "\t" stored as textfile location "/tmp/hive_test_1375711405.45852.txt";"The contents of

/tmp/hive_test_1375711405.45852.txtare:abc\tdefI'm connecting via the

beelinecommand line interface, which uses ThriftHiveServer2.System:

- Hadoop 2.0.0-cdh4.3.0

- Hive 0.10.0-cdh4.3.0

- Beeline 0.10.0-cdh4.3.0

- Client OS - Red Hat Enterprise Linux Server release 6.4 (Santiago)