How do I get Windows to go as fast as Linux for compiling C++?

Solution 1

Unless a hardcore Windows systems hacker comes along, you're not going to get more than partisan comments (which I won't do) and speculation (which is what I'm going to try).

File system - You should try the same operations (including the

dir) on the same filesystem. I came across this which benchmarks a few filesystems for various parameters.Caching. I once tried to run a compilation on Linux on a RAM disk and found that it was slower than running it on disk thanks to the way the kernel takes care of caching. This is a solid selling point for Linux and might be the reason why the performance is so different.

Bad dependency specifications on Windows. Maybe the chromium dependency specifications for Windows are not as correct as for Linux. This might result in unnecessary compilations when you make a small change. You might be able to validate this using the same compiler toolchain on Windows.

Solution 2

A few ideas:

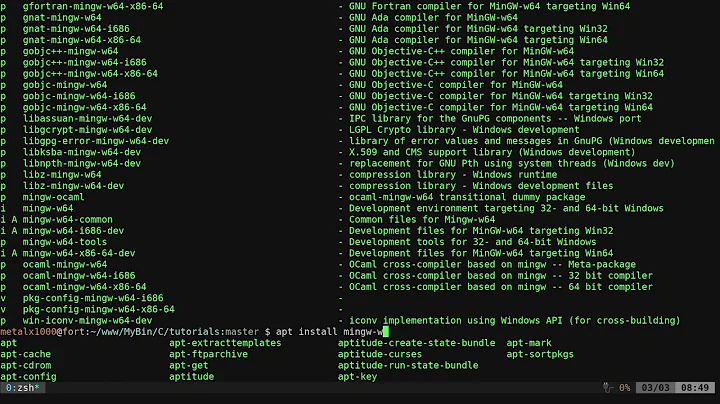

- Disable 8.3 names. This can be a big factor on drives with a large number of files and a relatively small number of folders:

fsutil behavior set disable8dot3 1 - Use more folders. In my experience, NTFS starts to slow down with more than about 1000 files per folder.

- Enable parallel builds with MSBuild; just add the "/m" switch, and it will automatically start one copy of MSBuild per CPU core.

- Put your files on an SSD -- helps hugely for random I/O.

- If your average file size is much greater than 4KB, consider rebuilding the filesystem with a larger cluster size that corresponds roughly to your average file size.

- Make sure the files have been defragmented. Fragmented files cause lots of disk seeks, which can cost you a factor of 40+ in throughput. Use the "contig" utility from sysinternals, or the built-in Windows defragmenter.

- If your average file size is small, and the partition you're on is relatively full, it's possible that you are running with a fragmented MFT, which is bad for performance. Also, files smaller than 1K are stored directly in the MFT. The "contig" utility mentioned above can help, or you may need to increase the MFT size. The following command will double it, to 25% of the volume:

fsutil behavior set mftzone 2Change the last number to 3 or 4 to increase the size by additional 12.5% increments. After running the command, reboot and then create the filesystem. - Disable last access time:

fsutil behavior set disablelastaccess 1 - Disable the indexing service

- Disable your anti-virus and anti-spyware software, or at least set the relevant folders to be ignored.

- Put your files on a different physical drive from the OS and the paging file. Using a separate physical drive allows Windows to use parallel I/Os to both drives.

- Have a look at your compiler flags. The Windows C++ compiler has a ton of options; make sure you're only using the ones you really need.

- Try increasing the amount of memory the OS uses for paged-pool buffers (make sure you have enough RAM first):

fsutil behavior set memoryusage 2 - Check the Windows error log to make sure you aren't experiencing occasional disk errors.

- Have a look at Physical Disk related performance counters to see how busy your disks are. High queue lengths or long times per transfer are bad signs.

- The first 30% of disk partitions is much faster than the rest of the disk in terms of raw transfer time. Narrower partitions also help minimize seek times.

- Are you using RAID? If so, you may need to optimize your choice of RAID type (RAID-5 is bad for write-heavy operations like compiling)

- Disable any services that you don't need

- Defragment folders: copy all files to another drive (just the files), delete the original files, copy all folders to another drive (just the empty folders), then delete the original folders, defragment the original drive, copy the folder structure back first, then copy the files. When Windows builds large folders one file at a time, the folders end up being fragmented and slow. ("contig" should help here, too)

- If you are I/O bound and have CPU cycles to spare, try turning disk compression ON. It can provide some significant speedups for highly compressible files (like source code), with some cost in CPU.

Solution 3

NTFS saves file access time everytime. You can try disabling it: "fsutil behavior set disablelastaccess 1" (restart)

Solution 4

The issue with visual c++ is, as far I can tell, that it is not a priority for the compiler team to optimize this scenario. Their solution is that you use their precompiled header feature. This is what windows specific projects have done. It is not portable, but it works.

Furthermore, on windows you typically have virus scanners, as well as system restore and search tools that can ruin your build times completely if they monitor your buid folder for you. windows 7 resouce monitor can help you spot it. I have a reply here with some further tips for optimizing vc++ build times if you're really interested.

Solution 5

The difficulty in doing that is due to the fact that C++ tends to spread itself and the compilation process over many small, individual, files. That's something Linux is good at and Windows is not. If you want to make a really fast C++ compiler for Windows, try to keep everything in RAM and touch the filesystem as little as possible.

That's also how you'll make a faster Linux C++ compile chain, but it is less important in Linux because the file system is already doing a lot of that tuning for you.

The reason for this is due to Unix culture: Historically file system performance has been a much higher priority in the Unix world than in Windows. Not to say that it hasn't been a priority in Windows, just that in Unix it has been a higher priority.

-

Access to source code.

You can't change what you can't control. Lack of access to Windows NTFS source code means that most efforts to improve performance have been though hardware improvements. That is, if performance is slow, you work around the problem by improving the hardware: the bus, the storage medium, and so on. You can only do so much if you have to work around the problem, not fix it.

Access to Unix source code (even before open source) was more widespread. Therefore, if you wanted to improve performance you would address it in software first (cheaper and easier) and hardware second.

As a result, there are many people in the world that got their PhDs by studying the Unix file system and finding novel ways to improve performance.

-

Unix tends towards many small files; Windows tends towards a few (or a single) big file.

Unix applications tend to deal with many small files. Think of a software development environment: many small source files, each with their own purpose. The final stage (linking) does create one big file but that is an small percentage.

As a result, Unix has highly optimized system calls for opening and closing files, scanning directories, and so on. The history of Unix research papers spans decades of file system optimizations that put a lot of thought into improving directory access (lookups and full-directory scans), initial file opening, and so on.

Windows applications tend to open one big file, hold it open for a long time, close it when done. Think of MS-Word. msword.exe (or whatever) opens the file once and appends for hours, updates internal blocks, and so on. The value of optimizing the opening of the file would be wasted time.

The history of Windows benchmarking and optimization has been on how fast one can read or write long files. That's what gets optimized.

Sadly software development has trended towards the first situation. Heck, the best word processing system for Unix (TeX/LaTeX) encourages you to put each chapter in a different file and #include them all together.

-

Unix is focused on high performance; Windows is focused on user experience

Unix started in the server room: no user interface. The only thing users see is speed. Therefore, speed is a priority.

Windows started on the desktop: Users only care about what they see, and they see the UI. Therefore, more energy is spent on improving the UI than performance.

-

The Windows ecosystem depends on planned obsolescence. Why optimize software when new hardware is just a year or two away?

I don't believe in conspiracy theories but if I did, I would point out that in the Windows culture there are fewer incentives to improve performance. Windows business models depends on people buying new machines like clockwork. (That's why the stock price of thousands of companies is affected if MS ships an operating system late or if Intel misses a chip release date.). This means that there is an incentive to solve performance problems by telling people to buy new hardware; not by improving the real problem: slow operating systems. Unix comes from academia where the budget is tight and you can get your PhD by inventing a new way to make file systems faster; rarely does someone in academia get points for solving a problem by issuing a purchase order. In Windows there is no conspiracy to keep software slow but the entire ecosystem depends on planned obsolescence.

Also, as Unix is open source (even when it wasn't, everyone had access to the source) any bored PhD student can read the code and become famous by making it better. That doesn't happen in Windows (MS does have a program that gives academics access to Windows source code, it is rarely taken advantage of). Look at this selection of Unix-related performance papers: http://www.eecs.harvard.edu/margo/papers/ or look up the history of papers by Osterhaus, Henry Spencer, or others. Heck, one of the biggest (and most enjoyable to watch) debates in Unix history was the back and forth between Osterhaus and Selzer http://www.eecs.harvard.edu/margo/papers/usenix95-lfs/supplement/rebuttal.html You don't see that kind of thing happening in the Windows world. You might see vendors one-uping each other, but that seems to be much more rare lately since the innovation seems to all be at the standards body level.

That's how I see it.

Update: If you look at the new compiler chains that are coming out of Microsoft, you'll be very optimistic because much of what they are doing makes it easier to keep the entire toolchain in RAM and repeating less work. Very impressive stuff.

Related videos on Youtube

gman

FYI: I no longer answer questions on stack overflow If you have a WebGL question you can try asking in the comments on one of the appropriate sites listed below or on the site's corresponding github issues. I was on the Google Chrome GPU team implementing Chrome's GPU subsystem including WebGL and Pepper 3D. I've shipped over 17 commercial games from Atari 800/Apple 2/Commodore 64 days all the way through PS3 and Xbox 360. Some links to things I've worked on: ThreeJSFundamentals,jsgist.org, jsbenchit.org, webgl-lint, games.greggman.com, github, twgl, WebGLFundamentals, WebGL2Fundamentals, vertexshaderart, happyfuntimes, Servez, unzipit, Virtual-WebGL, react-split-it, dekapng, check-all-the-errors @greggman PS: Any code I've posted on Stack Overflow is public domain / CC0. You do not have to credit me.

Updated on October 26, 2020Comments

-

gman over 3 years

gman over 3 yearsI know this is not so much a programming question but it is relevant.

I work on a fairly large cross platform project. On Windows I use VC++ 2008. On Linux I use gcc. There are around 40k files in the project. Windows is 10x to 40x slower than Linux at compiling and linking the same project. How can I fix that?

A single change incremental build 20 seconds on Linux and > 3 mins on Windows. Why? I can even install the 'gold' linker in Linux and get that time down to 7 seconds.

Similarly git is 10x to 40x faster on Linux than Windows.

In the git case it's possible git is not using Windows in the optimal way but VC++? You'd think Microsoft would want to make their own developers as productive as possible and faster compilation would go a long way toward that. Maybe they are trying to encourage developers into C#?

As simple test, find a folder with lots of subfolders and do a simple

dir /s > c:\list.txton Windows. Do it twice and time the second run so it runs from the cache. Copy the files to Linux and do the equivalent 2 runs and time the second run.

ls -R > /tmp/list.txtI have 2 workstations with the exact same specs. HP Z600s with 12gig of ram, 8 cores at 3.0ghz. On a folder with ~400k files Windows takes 40seconds, Linux takes < 1 second.

Is there a registry setting I can set to speed up Windows? What gives?

A few slightly relevant links, relevant to compile times, not necessarily i/o.

Apparently there's an issue in Windows 10 (not in Windows 7) that closing a process holds a global lock. When compiling with multiple cores and therefore multiple processes this issue hits.

The

/analyseoption can adversely affect perf because it loads a web browser. (Not relevant here but good to know)

-

Spudd86 almost 13 yearsI don't know the why, but this is a known difference in the performance characteristics of Windows and Linux, Linux is WAY better than windows at dealing with loads of files in a single directory, possibly it's just NTFS vs ext4/whatever? Could also be that the Windows equivalent of Linux's dentry cache just isn't as good.

-

ninjalj almost 13 years1) What build system are you using in each OS? 2) git is known to have performance problems on Windows, due to assumptions about use of dentry, etc... If you want comparisons, try hg. 3) Linux's dentry cache is a big win, and I don't think it has equivalent on other OSes.

ninjalj almost 13 years1) What build system are you using in each OS? 2) git is known to have performance problems on Windows, due to assumptions about use of dentry, etc... If you want comparisons, try hg. 3) Linux's dentry cache is a big win, and I don't think it has equivalent on other OSes. -

Nils over 12 yearsWhy was this closed? "Not being constructive" ??! I find it quite relevant for developers.

-

Halil Özgür over 12 yearsThis question does include facts and can be backed by any number of facts, references, anything. Just thinking that a title seems controversial shouldn't prevent us discussing a long-standing but not-enough-talked-about issue. Being a long-time Windows user myself, I'd like to ask this question and hopefully get some productive answers any time. Please reopen the question unless you can provide actual evidence that the question is inherently argumentative and not backed by facts. Otherwise you are just being a moderatorobot.

-

Halil Özgür over 12 yearsPlus, the title is very specific and doesn't ask something like "Why is Windows so slow?" (which it is, unfortunately...)

-

BoltClock over 12 years@HalilÖzgür: OK, your comment prompted me to look at the revision history - the original question title was asking something like that. That may very well have been the reason (I didn't vote to close), because there was a post by someone clearly offended by the original title and started raging, which was then deleted, leading to this question's closure. The title has been edited since, so I think we're good to go. Reopened. Bear in mind that you should still try not to discuss the question... since the OP is looking for answers, provide answers, nothing else.

-

Benjamin Podszun over 12 yearsIt would be awesome to see someone like @raymond-chen chime in with some insights - if the question stays technical and offers clear enough data/facts to reproduce the issue.

-

Rich over 12 yearsOne thing that springs to mind is that NTFS sorts the files returned by querying directories by name. This requires that they are all fetched before returning anything. This in turn hinders efficient streaming of the work as most work in the FS is done up-front without even the option of doing client work on the files in parallel already (e.g. compiling). This is a detail that won't change for compatibility reasons, though.

-

reconbot over 12 yearsSomeone could good look at some benchmarks NTFS vs ext3. They could also do an strace and see what GCC is doing that's so "slow". Most answers so far are anecdotal evidence.

-

b7kich over 12 yearsThis is all about disk I/O. Windows is testing 60x slower for me: Preparation executed separately both under win/linux: > git clone github.com/chromium/chromium.git > cd chromium/ > git checkout remotes/origin/trunk Windows 7 Home Premium SP1 8GB RAM PS > dir -Recurse > ../list.txt PS > (measure-command { dir -Recurse > ../list.txt }).TotalSeconds 36.3024971 Ubuntu 11.04 2GB Ram running under VMware on my Windows 7 Workstation above (with non-preallocated .vmdk disk image) $ ls -lR > ../list.txt $ time ls -lR > ../list.txt real 0m0.595s user 0m0.244s sys 0m0.348s

-

Tom Kerr over 12 yearsOne minor thing on git performance: MSYS git is faster than the cygwin version.

-

Halil Özgür over 12 years@BoltClock OK, sorry. And I take my word about being a robot back. But without that background information on the title, it looked like it was closed just because it is something about Windows vs Linux :)

-

Tim Robinson over 12 years@Joey NTFS returns file listing sorted alphabetically because that's how directories are stored on disk - they're kept in a tree structure for efficient lookups.

-

user541686 over 12 yearsAlso: Are you doing Whole Program Optimization on Linux? How about Windows?

-

ravi over 12 yearsthough this suggestion is not os related, try using a SSD.

-

fmorency over 12 yearsCould you give the compilation command line generated/used for both OSes?

-

RickNZ over 12 yearsAfter making some of the changes I suggested in my answer, the second run of "ls -R" for the chromium tree takes 4.3 seconds for me. "dir /s" takes about a second. Switching to an SSD didn't help for enumeration alone, but I suspect it will help for compiles.

RickNZ over 12 yearsAfter making some of the changes I suggested in my answer, the second run of "ls -R" for the chromium tree takes 4.3 seconds for me. "dir /s" takes about a second. Switching to an SSD didn't help for enumeration alone, but I suspect it will help for compiles. -

tauran almost 12 yearsDon't know if it has been mentioned: What happens if you use sourceforge.net/projects/ext2fsd + ext3 or ext4 partition under windows?

-

nowox about 8 years

nowox about 8 years -

v.oddou over 6 yearsYou saw the famous blog.zorinaq.com/… I hope. If not run to read it.

v.oddou over 6 yearsYou saw the famous blog.zorinaq.com/… I hope. If not run to read it.

-

Brian Campbell over 12 yearsSaying that the reason is "cultural, not technical" doesn't really answer the question. Obviously, there are one or more underlying technical reasons why certain operations are slower on Windows than on Linux. Now, the cultural issues can explain why people made technical decisions that they made; but this is a technical Q&A site. Answers should cover the technical reasons why one system is slower than the other (and what can be done to improve the situation), not unprovable conjectures about culture.

-

Martin Booka Weser over 12 yearsseriously? You mean i should give it a trial to use a VM as dev maschine? Sounds odd... what VM do you use?

-

b7kich over 12 yearsI tested the scenario above with a Ubuntu 11.04 VM running inside my windows 7 workstation. 0.6 sec for the linux VM, 36 sec for my windows workstation

-

surfasb over 12 yearsThis doesn't seem to have a lot of technical information. Mostly circumstancial. I think the only way we'll get real technical info is by looking at the differences between the two compilers, build systems, etc etc.

-

Noufal Ibrahim over 12 yearsWindows applications tend to open one big file, hold it open for a long time - Plenty of UNIX apps do this. Servers, my Emacs etc.

-

b7kich over 12 yearsTesting that shaved 4 seconds down from the previous 36. Still abominable compared to .6 seconds on my linux VM

-

user541686 over 12 yearsCould you elaborate a bit on #2? It's quite surprising -- is it because the kernel doesn't cache data on the RAM disk or something?

-

Noufal Ibrahim over 12 yearsIf you allocate a piece of a memory as a ramdisk, it's not available to the kernel for caching or use for anything else. In effect, you're wringing its hand and forcing it to use less memory for its own algorithms. My knowledge is empirical. I lost performance when I used a RAMdisk for compilations.

-

b7kich over 12 yearsEven if you did all these things, you wouldn't come close to the Linux perfomance. Give the test below a try and and post your timing if you disagree.

-

RickNZ over 12 yearsWe need a better benchmark. Measuring the time it takes to enumerate a folder is not a very useful, IMO. NTFS is optimized for single-file lookup times, with a btree structure. In Linux (last I looked), an app can read an entire folder with a single system call, and iterate through the resulting structure entirely in user code; Windows requires a separate sys call for each file. Either way, compilers shouldn't need to read the entire folder....

RickNZ over 12 yearsWe need a better benchmark. Measuring the time it takes to enumerate a folder is not a very useful, IMO. NTFS is optimized for single-file lookup times, with a btree structure. In Linux (last I looked), an app can read an entire folder with a single system call, and iterate through the resulting structure entirely in user code; Windows requires a separate sys call for each file. Either way, compilers shouldn't need to read the entire folder.... -

b7kich over 12 yearsThen what you're describing is precisely the problem. Picking a different benchmark doesn't solve the issue - you're just looking away.

-

RickNZ over 12 yearsThe question was about optimizing compile times. Folder enumeration times do not dominate compilation times on Windows, even with tens of thousands of files in a folder.

RickNZ over 12 yearsThe question was about optimizing compile times. Folder enumeration times do not dominate compilation times on Windows, even with tens of thousands of files in a folder. -

b7kich over 12 yearsThe author has tied the bad compilation performance to the number of files. There is no reason to deny that.

-

RickNZ over 12 yearsA downvote for that? I'm not denying that the number of files is an issue at all. My point is that there's a difference between the time to enumerate a folder with tens of thousands of files and the time it takes to open a file in that folder. Enumeration in that case can be slow on Windows compared to Linux; opening a file is not.

RickNZ over 12 yearsA downvote for that? I'm not denying that the number of files is an issue at all. My point is that there's a difference between the time to enumerate a folder with tens of thousands of files and the time it takes to open a file in that folder. Enumeration in that case can be slow on Windows compared to Linux; opening a file is not. -

RickNZ over 12 yearsAfter doing a few of the tuning I describe in my answer for Windows, running the "ls -lR" test above on the chromium tree took 19.4 seconds. If I use "ls -UR" instead (which doesn't get file stats), the time drops to 4.3 seconds. Moving the tree to an SSD didn't speed anything up, since the file data gets cached by the OS after the first run.

RickNZ over 12 yearsAfter doing a few of the tuning I describe in my answer for Windows, running the "ls -lR" test above on the chromium tree took 19.4 seconds. If I use "ls -UR" instead (which doesn't get file stats), the time drops to 4.3 seconds. Moving the tree to an SSD didn't speed anything up, since the file data gets cached by the OS after the first run. -

b7kich over 12 yearsThanks for sharing! Despite a solid factor 10 improvement compared to the Windows 7 'out-of-the-box' scenario, that's still a factor 10 worse than Linux/ext4.

-

RickNZ over 12 yearsI thought the point of the OP was to improve Windows performance, right? Also, as I posted above, "dir /s" runs in about a second.

RickNZ over 12 yearsI thought the point of the OP was to improve Windows performance, right? Also, as I posted above, "dir /s" runs in about a second. -

Dolph over 11 years"Unless [an expert on a specific topic] comes along, you're not going to get more than partisan comments ... and speculation": how is that different than any other question?

-

Noufal Ibrahim over 11 yearsThis one, thanks to the Win vs. Lin subject is more of a fanboy magnet. Also the question is rather nuanced unlike direct ones which just ask for commands or methods of usage.

-

RickNZ over 11 yearsAfter making some of the changes suggested above, the second run of "ls -R" for the chromium tree takes 4.3 seconds for me (vs 40 seconds in the OP). "dir /s" takes about a second. Switching to an SSD didn't help for enumeration alone, but I suspect it will help for compiles.

RickNZ over 11 yearsAfter making some of the changes suggested above, the second run of "ls -R" for the chromium tree takes 4.3 seconds for me (vs 40 seconds in the OP). "dir /s" takes about a second. Switching to an SSD didn't help for enumeration alone, but I suspect it will help for compiles. -

orlp over 11 yearsIf you use virtualbox and set up a shared drive you can essentially speed up your compilations for free.

-

user541686 almost 10 years@RickNZ: That's not true regarding Windows, nor is it the explanation for its slowness. You can enumerate multiple files with

NtQueryDierctoryFile, it's indeed faster but I don't think to the extent that addresses this question. -

TomOnTime over 9 yearsI don't think emacs holds files open for a long time whether or not it is large or small. It certainly doesn't write to the middle of the file, updating it like a database would.

TomOnTime over 9 yearsI don't think emacs holds files open for a long time whether or not it is large or small. It certainly doesn't write to the middle of the file, updating it like a database would. -

underscore_d over 8 yearsThe wording here is very confusing, but I assume it means a Windows-hosted VM running Linux, not a Windows-running VM hosted on Linux... which is interesting, but my first - literal - reading of this suggested that running Windows in a VM hosted on Linux to compile led to faster speeds than running Windows natively - and that would've really been something.

-

spectras about 7 years…and servers don't do that either. Their features on *nix systems are usually split in lots of small modules with the server core essentially being an empty shell.

spectras about 7 years…and servers don't do that either. Their features on *nix systems are usually split in lots of small modules with the server core essentially being an empty shell. -

alkasm over 5 yearsThe link in #1 is no longer active.

alkasm over 5 yearsThe link in #1 is no longer active. -

hanshenrik about 5 years

The history of Windows benchmarking and optimization has been on how fast one can read or write long files. That's what gets optimized.- does that mean windows is faster than linux at making big files? -

Prof. Falken over 4 years@underscore_d, I've seen that something, where a Windows in a VM run much faster than on real hardware. Probably because Linux told Windows that it's operating on a real disk, while Linux in fact did aggressive caching behind the scenes. Installing Windows in the virtual machine also went blazingly fast, for instance. This was back in the XP days, but I would be surprised if there was much difference today.