Why is Linux 30x faster than Windows 10 in Copying files?

Solution 1

The basics of it break down to a few key components of the total system: the UI element (the graphical part), the kernel itself (what talks to the hardware), and the format in which the data is stored (i.e. the file system).

Going backwards, NTFS has been the de-facto for Windows for some time, while the de-facto for the major Linux variants is the ext file system. The NTFS file system itself hasn't changed since Windows XP (2001), a lot of features that exist (like partition shrinking/healing, transactional NTFS, etc.) are features of the OS (Windows Vista/7/8/10) and not NTFS itself. The ext file system had it's last major stable release (ext4) in 2008. Since the file system itself is what governs how and where files are accessed, if you're using ext4 there's a likely chance you'll notice an improvement to speed over NTFS; note however if you used ext2 you might notice that it's comparable in speed.

It could be as well that one partition is formatted in smaller chunks than the other. The default for most systems is a 4096 byte 1, 2 cluster size, but if you formatted your ext4 partition to something like 16k 3 then each read on the ext4 system would get 4x the data vs. the NTFS system (which could mean 4x the files depending on what's stored where/how and how big, etc.). Fragmentation of the files can also play a role in speeds. NTFS handles file fragmentation very differently than the ext file system, and with 100k+ files, there's a good chance there's some fragmentation.

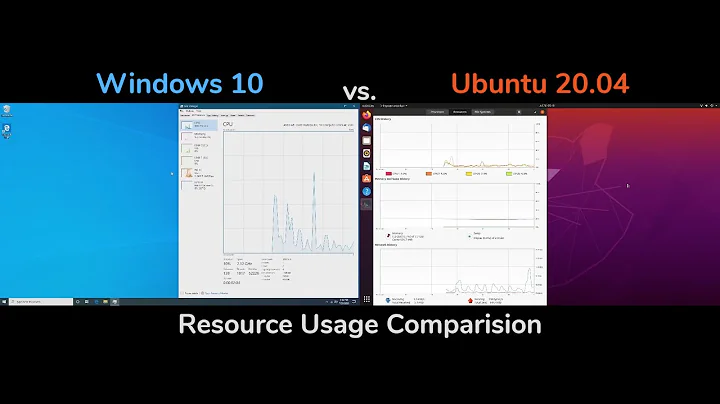

The next component is the kernel itself (not the UI, but the code that actually talks to the hardware, the true OS). Here, there honestly isn't much difference. Both kernels can be configured to do certain things, like disk caching/buffering, to speed up reads and perceived writes, but these configurations usually have the same trade-offs regardless of OS; e.g. caching might seem to massively increase the speed of copying/saving, but if you lose power during the cache write (or pull the USB drive out), then you will lose all data not actually written to disk and possibly even corrupt data already written to disk.

As an example, copy a lot of files to a FAT formatted USB drive in Windows and Linux. On Windows it might take 10 minutes while on Linux it will take 10 seconds; immediately after you've copied the files, safely remove the drive by ejecting it. On Windows it would be immediately ejected from the system and thus you could remove the drive from the USB port, while on Linux it might take 10 minutes before you could actually remove the drive; this is because of the caching (i.e. Linux wrote the files to RAM then wrote them to the disk in the background, while the cache-less Windows wrote the files immediately to disk).

Last is the UI (the graphical part the user interacts with). The UI might be a pretty window with some cool graphs and nice bars that give me a general idea of how many files are being copied and how big it all is and how long it might take; the UI might also be a console that doesn't print any information except when it's done. If the UI has to first go through each folder and file to determine how many files there are, plus how big they are and give a rough estimate before it can actually start copying, then the copy process can take longer due to the UI needing to do this. Again, this is true regardless of OS.

You can configure some things to be equal (like disk caching or cluster size), but realistically speaking it simply comes down to how all the parts tie together to make the system work and more specifically how often those pieces of code actually get updated. The Windows OS has come a long way since Windows XP, but the disk sub-system is an area that hasn't seen much TLC in the OS across all versions for many years (compared to the Linux ecosystem that seems to see some new FS or improvement rather frequently).

Hope that adds some clarity.

Solution 2

Windows has less performance because they don't carry about HDDs. Window's write cache is normally deactivated on external devices. You can enable it - but you can't deactivate timed flushing of buffer from windows. Linux itself has a better write caching. My external HDD on Ubuntu: 100MB/s, down to 40MB/s. On windows: Once a bit faster, then 19-20MB/s. You might use SSDs - that's much faster on windows. Generally windows isn't doing much anymore about performance. Linux has better algorythms. It is already faster than windows with better process sheduling etc. It dosesn't really differentate if you have NTFS or ext. I copied twice on the same partition and same drive. I think there is no way to speed up windows' write performance because you don't even have acces to the whole system and it isn't open source

Just use Linux to copy large files :D

Related videos on Youtube

Jones G

Updated on September 18, 2022Comments

-

Jones G almost 2 years

I got 20.3 Gig of files and folders totaling 100k+ items. I duplicated all those files in one directory from Windows 10, and it took me an excruciating 3hrs of copying. Done.

The other day, I booted in Linux Fedora 24, recopied the same folder and bam! It took me just 5 mins to duplicate it on the same place but different directory.

Why is Linux so Fast? And Windows is painstakingly slow?

There is a similar question here

Is (Ubuntu) Linux file copying algorithm better than Windows 7?

But the accepted answer is quite lacking.

-

Matt over 5 yearsHorrible answer in my opinion and down voted. You are introducing differences where there are none. Nobody asked how differently partitioned drives perform. Of course does the question center on the "all else being equal" precept. I can choose a fs for an 8 nvme raid0 any way I want with native read speeds of over 16 gigabytes per second and yet a Windows file copy maxes out at 1.4-1.5 gigabytes any time, all the time. Has nothing to do with caching, fs, partitions, but more with windows OS limitations.

-

txtechhelp over 5 years@Matt what file system are you formatting said RAID array in? If it's NTFS, that could explain the slow down .. but if you have more information to provide, you're free to add a relevant answer, especially if you have any source code (and not an assembly dump) to the core Windows OS to explain directly why said slow down occurs (I for one would especially be interested in that!).

-

Matt over 5 yearsI use ntfs, what better option is there as fs on a windows server ?

-

Matt over 5 yearsI contacted MSFT and had many many discussions and tried many things over the years and never got it to exceed 1.5GB/second, despite having 100Gb nics on each machines and have all other traffic per Mellanox profiling tools show the connects are working perfectly fine at 94-95Gb/sec throughput. No slowdowns between linux machines, but as soon as a windows OS machine is involved I see those bottlenecks

-

Matt over 5 yearsI am talking about single file transfers, all single threaded. There is no hardware bottleneck whatsoever, its purely OS based.

-

Mikey about 5 yearsI learned something but I really don't understand why Windows Explorer takes forever to open a folder, I mean you see the files but it keeps adding to them. Like my downloads folder or my screenshots folder which has a lot of PNGs. Sure if there were only 10 files in the folder, you'd barely notice it but I have an .m2 SSD - it's kinda ridiculous. Also, search for files sucks in windows. I have to use a 3rd party tool which maintains an index but even using 3rd party tools that don't seems faster than searching with windows which does maintain an index?

Mikey about 5 yearsI learned something but I really don't understand why Windows Explorer takes forever to open a folder, I mean you see the files but it keeps adding to them. Like my downloads folder or my screenshots folder which has a lot of PNGs. Sure if there were only 10 files in the folder, you'd barely notice it but I have an .m2 SSD - it's kinda ridiculous. Also, search for files sucks in windows. I have to use a 3rd party tool which maintains an index but even using 3rd party tools that don't seems faster than searching with windows which does maintain an index? -

hanshenrik over 4 years@MatthiasWolf consider this: Linux is a system optimized by Linus Torvalds. Windows is a system not optimized by Linus Torvalds. Linus is a speed-junkie, and i assume Windows devs just want things to work, and a paycheck

-

txtechhelp over 3 years@MatthiasWolf I'm not sure why you mention a thread of execution and assume it to mean a kernel thread, and more specifically what that has to do with file access on the actual file system or the drivers for the PCIe drive? When it comes to the FS, the OS doesn't do anything other than call other API's. So I'd like to know what MSFT had to say to your issue? Also, have you tried exFAT, or WSL with ext4? I'd be curious if your assertions are correct as I've specifically opened up the NTFS DLL's in assembly so I could re-implemnt some of their API's in the Linux kernel (more efficiently).

-

txtechhelp over 3 years@Mikey ultimately it breaks down to Software Engineering and a simple

for..loopiterating over each file in your current view and trying to "view" them all at once. It's the human equivalent of trying to have each eye look through a telescope pointed in a different direction; you can see it, discern it, and understand it, but it gives you a headache and you didn't really grasp the totality of it all until much later. The software could be re-written, but that's a different subject altogether. -

Richard Muvirimi about 3 yearsThis is the most truthful answer, i have come across. Most times, linux fans over sell it forgetting it all comes down to the hardware and when one actually tries it out they get different results from what they where made to believe.

Richard Muvirimi about 3 yearsThis is the most truthful answer, i have come across. Most times, linux fans over sell it forgetting it all comes down to the hardware and when one actually tries it out they get different results from what they where made to believe.