How much space to leave free on HDD or SSD?

Solution 1

Has there been any research, preferably published in a peer-reviewed journal […]?

One has to go back a lot further than 20 years, of system administration or otherwise, for this. This was a hot topic, at least in the world of personal computer and workstation operating systems, over 30 years ago; the time when the BSD people were developing the Berkeley Fast File System and Microsoft and IBM were developing the High Performance File System.

The literature on both by its creators discusses the ways that these filesystems were organized so that the block allocation policy yielded better performance by trying to make consecutive file blocks contiguous. You can find discussions of this, and of the fact that the amount and location of free space left to allocate blocks affects block placement and thus performance, in the contemporary articles on the subject.

It should be fairly obvious, for example, from the description of the block allocation algorithm of the Berkeley FFS that, if there is no free space in the current and secondary cylinder group and the algorithm thus reaches the fourth level fallback ("apply an exhaustive search to all cylinder groups"), performance of allocating disc blocks will suffer as also will fragmentation of the file (and hence read performance).

It is these and similar analyses (these being far from the only filesystem designs that aimed to improve on the layout policies of the filesystem designs of the time) that the received wisdom of the past 30 years has built upon.

For example: The dictum in the original paper that FFS volumes be kept less than 90% full, lest performance suffer, which was based upon experiments made by the creators, can be found uncritically repeated even in books on Unix filesystems published this century (e.g., Pate2003 p. 216). Few people question this, although Amir H. Majidimehr actually did the century before, saying that xe has in practice not observed a noticeable effect; not least because of the customary Unix mechanism that reserves that final 10% for superuser use, meaning that a 90% full disc is effectively 100% full for non-superusers anyway (Majidimehr1996 p. 68). So did Bill Calkins, who suggests that in practice one can fill up to 99%, with 21st century disc sizes, before observing the performance effects of low free space because even 1% of modern size discs is enough to have lots of unfragmented free space still to play with (Calkins2002 p. 450).

This latter is an example of how received wisdom can become wrong. There are other examples of this. Just as the SCSI and ATA worlds of logical block addressing and zoned bit recording rather threw out of the window all of the careful calculations of rotational latency in the BSD filesystem design, so the physical mechanics of SSDs rather throw out of the window the free space received wisdom that applies to Winchester discs.

With SSDs, the amount of free space on the device as a whole, i.e., across all volumes on the disc and in between them, has an effect both upon performance and upon lifetime. And the very basis for the idea that a file needs to be stored in blocks with contiguous logical block addresses is undercut by the fact that SSDs do not have platters to rotate and heads to seek. The rules change again.

With SSDs, the recommended minimum amount of free space is actually more than the traditional 10% that comes from experiments with Winchester discs and Berkeley FFS 33 years ago. Anand Lal Shimpi gives 25%, for example. This difference is compounded by the fact that this has to be free space across the entire device, whereas the 10% figure is within each single FFS volume, and thus is affected by whether one's partitioning program knows to TRIM all of the space that is not allocated to a valid disc volume by the partition table.

It is also compounded by complexities such as TRIM-aware filesystem drivers that can TRIM free space within disc volumes, and the fact that SSD manufacturers themselves also already allocate varying degrees of reserved space that is not even visible outwith the device (i.e., to the host) for various uses such as garbage collection and wear levelling.

Bibliography

- Marshall K. McKusick, William N. Joy, Samuel J. Leffler, and Robert S. Fabry (1984-08). A Fast File System for UNIX. ACM Transactions on Computer Systems. Volume 2 issue 3. pp.181–197. Archived at cornell.edu.

- Ray Duncan (1989-09). Design goals and implementation of the new High Performance File System. Microsoft Systems Journal. Volume 4 issue 5. pp. 1–13. Archived at wisc.edu.

- Marshall Kirk McKusick, Keith Bostic, Michael J. Karels, and John S. Quarterman (1996-04-30). "The Berkeley Fast Filesystem". The Design and Implementation of the 4.4 BSD Operating System. Addison-Wesley Professional. ISBN 0201549794.

- Dan Bridges (1996-05). Inside the High Performance File System — Part 4: Fragmentation, Diskspace Bitmaps and Code Pages. Significant Bits. Archived at Electronic Developer Magazine for OS/2.

- Keith A. Smith and Margo Seltzer (1996). A Comparison of FFS Disk Allocation Policies. Proceedings of the USENIX Annual Technical Conference.

- Steve D. Pate (2003). "Performance analysis of the FFS". UNIX Filesystems: Evolution, Design, and Implementation. John Wiley amp; Sons. ISBN 9780471456759.

- Amir H. Majidimehr (1996). Optimizing UNIX for Performance. Prentice Hall. ISBN 9780131115514.

- Bill Calkins (2002). "Managing File Systems". Inside Solaris 9. Que Publishing. ISBN 9780735711013.

- Anand Lal Shimpi (2012-10-04). Exploring the Relationship Between Spare Area and Performance Consistency in Modern SSDs. AnandTech.

- Henry Cook, Jonathan Ellithorpe, Laura Keys, and Andrew Waterman (2010). IotaFS: Exploring File System Optimizations for SSDs. IEEE Transactions on Consumer Electronics. Archived at stanford.edu.

- https://superuser.com/a/1081730/38062

- Accela Zhao (2017-04-10). A Summary on SSD & FTL. github.io.

- Does Windows trim unpartitioned (unformatted) space on an SSD?

Solution 2

Though I can't talk about "research" being published by "peer reviewed journals" - and I wouldn't want to have to rely on those for day-to-day work - I can though talk about the realities of hundreds of production servers under a variety of OSes over many years:

There are three reasons why a full disk reduces performance:

- Free space starvation: Think of temp files, Updates, etc.

- File system degradation: Most file systems suffer in their ability to optimally lay out files if not enough room is present

- Hardware level degradation: SSDs and SMR disks without enough free space will show decreased throughput and - even worse - increased latency (sometimes by many orders of magnitude)

The first point is trivial, especially since no sane production system would ever use swap space in dynamically expanding and shrinking files.

The second point differs highly between file systems and workload. For a Windows system with mixed workload, a 70% threshold turns out to be quite usable. For a Linux ext4 file system with few but big files (e.g. video broadcast systems), this might go up to 90+%.

The third point is hardware and firmware dependent, but especially SSDs with a Sandforce controller can fall back in free-block erasure on high-write workloads, leading to write latencies going up by thousands of percent. We usually leave 25% free on the partition level, then observe a fill rate of below 80%.

Recommendations

I realize that I mentioned how to make sure a max fill rate is enforced. Some random thoughts, none of them "peer reviewed" (paid, faked or real) but all of them from production systems.

- Use filesystem boundaries:

/vardoesn't belong into the root file system. - Monitoring, monitoring, monitoring. Use a ready-made solution if it fits you, else parse the output of

df -hand let alarm bells go of in case. This can save you from 30 kernels on a root fs with automatic-upgrades installed and running without the autoremove option. - Weigh the potential disruption of a fs overflow against the cost of making it bigger in the first place: If you are not on an embedded device, you might just double those 4G for root.

Solution 3

Has there been any research...into either the percentage or absolute amount of free space required by specific combinations of operating systems, filesystem, and storage technology...?

In 20 years of system administration, I've never encountered research detailing the free space requirements of various configurations. I suspect this is because computers are so variously configured it would be difficult to do because of the sheer number of possible system configurations.

To determine how much free space a system requires, one must account for two variables:

-

The minimum space required to prevent unwanted behavior, which itself may have a fluid definition.

Note that it's unhelpful to define required free space by this definition alone, as that's the equivalent of saying it's safe to drive 80 mph toward a brick wall until the very point at which you collide with it.

-

The rate at which storage is consumed, which dictates an additional variable amount of space up be reserved, lest the system degrade before the admin has time to react.

The specific combination of OS, filesystems, underlying storage architecture, along with application behavior, virtual memory configuration, etc. creates quite the challenge to one wishing to provide definitive free space requirements.

That's why there are so many "nuggets" of advice out there. You'll notice that many of them make a recommendation around a specific configuration. For example, "If you have an SSD that's subject to performance issues when nearing capacity, stay above 20% free space."

Because there is no simple answer to this question, the correct approach to identify your system's minimum free space requirement is to consider the various generic recommendations in light of your system's specific configuration, then set a threshold, monitor it, and be willing to adjust it as necessary.

Or you could just keep at least 20% free space. Unless of course you have a 42 TB RAID 6 volume backed by a combination of SSDs and traditional hard disks and a pre-allocated swap file... (that's a joke for the serious folks.)

Solution 4

Of course, a drive itself (HDD or SSD alike) couldn't care less about how many percents of it are in use, apart from SSDs being able to erase their free space for you beforehand. Read performance will be exactly the same, and write performance may be somewhat worse on SSD. Anyway, write performance is not that important on a nearly full drive, since there's no space to write anything.

Your OS, filesystem and applications on the other hand will expect you to have free space available at all times. 20 years ago it was typical for an application to check how much space you had on the drive before trying to save your files there. Today, application create temporary files without asking your permission, and typically crash or behave erratically when they fail to do so.

Filesystems have a similar expectation. For example, NTFS reserves a big chunk of your disk for MFT, but still shows you this space as free. When you fill your NTFS disk above 80% of its capacity, you get MFT fragmentation which has a very real impact on performance.

Furthermore, having free space does indeed help against fragmentation of regular files. Filesystems tend to avoid file fragmenation by finding the right place for each file depending on its size. On a near-fill disk they'll have fewer options, so they'll have to make poorer choices.

On Windows, you're also expected to have enough disk space for the swap file, which can grow when necessary. If it can't, you should expect your apps getting forcibly closed. Having very little swap space can indeed worsen the performance.

Even if your swap has fixed size, running completely out of system disk space can crash your system and / or make it unbootable (Windows and Linux alike), because the OS will expect to be able to write to disk during booting. So yes, hitting 90% of disk usage should make you consider your paints being on fire. Not once have I seen computers which failed to boot properly until recent downloads were removed to give the OS a little disk space.

Solution 5

For SSDs there should be some space left because rewrite rate then increases and negatively affects write performance of the disk. The 80% full is safe value probably for all SSD disks, some latest models may work fine even with 90-95% occupied capacity.

https://www.howtogeek.com/165542/why-solid-state-drives-slow-down-as-you-fill-them-up/

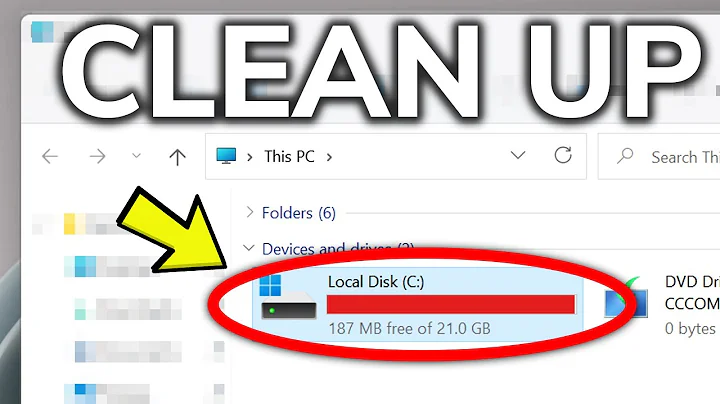

Related videos on Youtube

Giacomo1968

Updated on September 18, 2022Comments

-

Giacomo1968 over 1 year

Giacomo1968 over 1 yearIn the informal (i.e. journalistic) technology press, and in online technology blogs and discussion forums, one commonly encounters anecdotal advice to leave some amount of space free on hard disk drives or solid state drives. Various reasons for this are given, or sometimes no reason at all. As such, these claims, while perhaps reasonable in practice, have a mythical air about them. For instance:

-

“Once your disk(s) are 80% full, you should consider them full, and you should immediately be either deleting things or upgrading. If they hit 90% full, you should consider your own personal pants to be on actual fire, and react with an appropriate amount of immediacy to remedy that.” (Source.)

-

“To keep the garbage collection going at peak efficiency, traditional advice is aim to keep 20 to 30 percent of your drive empty.” (Source.)

-

“I've been told I should leave about 20% free on a HD for better performance, that a HD really slows down when it's close to full.” (Source.)

-

“You should leave room for the swap files and temporary files. I currently leave 33% percent free and and vow to not get below 10GB free HDD space.” (Source.)

-

“I would say typically 15%, however with how large hard drives are now adays, as long as you have enough for your temp files and swap file, technically you are safe.” (Source.)

-

“I would recommend 10% plus on Windows because defrag won't run if there is not about that much free on the drive when you run it.” (Source.)

-

“You generally want to leave about 10% free to avoid fragmentation.” (Source.)

-

“If your drive is consistently more than 75 or 80 percent full, upgrading to a larger SSD is worth considering.” (Source.)

Has there been any research, preferably published in a peer-reviewed journal, into either the percentage or absolute amount of free space required by specific combinations of operating systems, filesystem, and storage technology (e.g. magnetic platter vs. solid state)? (Ideally, such research would also explain the reason to not exceed the specific amount of used space, e.g. in order to prevent the system running out of swap space, or to avoid performance loss.)

If you know of any such research, I would be grateful if you could answer with a link to it plus a short summary of the findings. Thanks!

-

Ramhound over 6 yearsFree space doesn’t have a performance hit if your using mechanical drives and how honestly the firmware on SSDs today are so efficient it isn’t true on them anymore

-

Abdul over 6 yearswhat I don't get is the SWAP argument. Why would I leave a percentage of the HDD's capacity, and not just 100% of the RAM's capacity?

-

AFH over 6 yearsI don't know about research findings, but I know about my own findings. There will be no performance penalty (apart from marginally slower directory accesses) on a nearly full drive if all its files are defragmented. The problem is that many defragmenters optimise file fragmentation, but in the process leave the free space even more fragmented, so that new files become immediately fragmented. Free space fragmentation becomes much worse as discs become full.

AFH over 6 yearsI don't know about research findings, but I know about my own findings. There will be no performance penalty (apart from marginally slower directory accesses) on a nearly full drive if all its files are defragmented. The problem is that many defragmenters optimise file fragmentation, but in the process leave the free space even more fragmented, so that new files become immediately fragmented. Free space fragmentation becomes much worse as discs become full. -

AFH over 6 years@Abdul - A lot of the advice on swap file size is misleading. The key requirement is to have enough memory (real and virtual) for all the programs you want to have active at a time, so the less RAM you have, the more swap you need. Thus taking a proportion of the RAM size (double is often suggested) is wrong, other than as an arbitrary initial size until you find out how much you really need. Find out how much memory is used when your system is busiest, then double it and subtract the RAM size: you never want to run out of swap space.

AFH over 6 years@Abdul - A lot of the advice on swap file size is misleading. The key requirement is to have enough memory (real and virtual) for all the programs you want to have active at a time, so the less RAM you have, the more swap you need. Thus taking a proportion of the RAM size (double is often suggested) is wrong, other than as an arbitrary initial size until you find out how much you really need. Find out how much memory is used when your system is busiest, then double it and subtract the RAM size: you never want to run out of swap space. -

enkryptor over 6 yearsCould you please add what filesystem/OS do you ask about.

enkryptor over 6 yearsCould you please add what filesystem/OS do you ask about. -

Baldrickk over 6 yearson swap, it should be at least as large as your RAM if you are going to hibernate the machine. This is because swap is where your memory contents are saved. Ideally, you want it bigger, so you can hibernate while using swap, if needed.

-

phuclv over 6 years@Ramhound I doubt that. The less space left on the disk, the higher chance new files will be fragmented around and reduce performance

phuclv over 6 years@Ramhound I doubt that. The less space left on the disk, the higher chance new files will be fragmented around and reduce performance -

PCARR over 6 years10% HDD, 25% SSD.

-

The Onin over 6 yearsempties his trash can

The Onin over 6 yearsempties his trash can -

Eugen Rieck over 6 yearswashingtonpost.com/news/morning-mix/wp/2015/03/27/… and friends. I simply don't believe them.

-

David over 6 yearsI think this really depends on what you are using the drive for. If you need to add and remove tons of data from the hard drive in large segments, I would leave decent amount of free space based off of the size of the files you need to move around. 10-20% seems a reasonable general suggestion, but I have nothing to support that apart from personal experience.

David over 6 yearsI think this really depends on what you are using the drive for. If you need to add and remove tons of data from the hard drive in large segments, I would leave decent amount of free space based off of the size of the files you need to move around. 10-20% seems a reasonable general suggestion, but I have nothing to support that apart from personal experience. -

Ramhound over 6 years@LưuVĩnhPhúc - I don't agree there is a huge hit on performance from file fragmentation on SSDs

-

Admin over 6 years@EugenRieck, see Jon Turney's "End of the peer show" (New Scientist, 22 Sept 1990). Peer review is known to be imperfect, but there are few better options. Even a mediocre & erroneous paper should be more falsifiable than a vague, passing claim in a blog or forum post, making it a better starting point for understanding.

Admin over 6 years@EugenRieck, see Jon Turney's "End of the peer show" (New Scientist, 22 Sept 1990). Peer review is known to be imperfect, but there are few better options. Even a mediocre & erroneous paper should be more falsifiable than a vague, passing claim in a blog or forum post, making it a better starting point for understanding. -

Eugen Rieck over 6 years@sampablokuper Please don't get me wrong - I don't want to declare a superiority of Stack Overflow over peer-review Money makers. If you want to write a paper for one of these journals, go with sources from these journals. But if you want to keep production systems alive and well, go with Stack Overflow. These two worlds share no overlap.

-

phuclv over 6 years@Ramhound I mean on HDD as you were saying "Free space doesn’t have a performance hit if your using mechanical drives". Anyway fragmentation will introduce a very small on SSD because instead of reading the whole giant extent on ext4 and NTFS, the driver now has to determine which block to read next

phuclv over 6 years@Ramhound I mean on HDD as you were saying "Free space doesn’t have a performance hit if your using mechanical drives". Anyway fragmentation will introduce a very small on SSD because instead of reading the whole giant extent on ext4 and NTFS, the driver now has to determine which block to read next -

Dima over 6 yearsImportant thing: if it is the drive where software constantly performs operations, e.g. C: with OS installed - you should care for a free space. If it is a storage drive - e.g. a drive with photos and videos - safely fill it up 100%.

-

Admin over 6 years@EugenRieck: "peer-review Money makers"; some publishers are more ethical than others. (In case you are wondering, yes I know the tragedy of U.S. v. Aaron Swartz.) "These two worlds share no overlap." Happily, they do. At universities & elsewhere, I see sysadmins & academics alike benefit from both SE & PR. Please let's stay on-topic now on, thanks :)

Admin over 6 years@EugenRieck: "peer-review Money makers"; some publishers are more ethical than others. (In case you are wondering, yes I know the tragedy of U.S. v. Aaron Swartz.) "These two worlds share no overlap." Happily, they do. At universities & elsewhere, I see sysadmins & academics alike benefit from both SE & PR. Please let's stay on-topic now on, thanks :) -

barbecue over 6 yearsIt's important to draw a distinction between the performance impact of a data storage disk being full and the impact of an operating system boot volume being full, especially for Windows OSes. The answers are completely different for the two scenarios.

barbecue over 6 yearsIt's important to draw a distinction between the performance impact of a data storage disk being full and the impact of an operating system boot volume being full, especially for Windows OSes. The answers are completely different for the two scenarios.

-

-

Admin over 6 yearsThis is helpful: it is more detailed, and has greater explanatory power, than the typical anecdote. I upvoted it accordingly. But I really want more solid evidence than just, "someone on the internet says that this is their experience".

Admin over 6 yearsThis is helpful: it is more detailed, and has greater explanatory power, than the typical anecdote. I upvoted it accordingly. But I really want more solid evidence than just, "someone on the internet says that this is their experience". -

Admin over 6 yearsThanks for the answer :) I'd like to take up one of your points: "It's not necessary to justify advice to leave some free space since the consequence of a storage depleted machine is self-evident." No, it isn't self-evident. It surprises people more than you might expect. And different combinations of operating system, filesystem, etc, are likely to respond to this situation in different ways: some might warn; some might fail without warning; who knows? So, it would be great to shine more light on this. Hence my question :)

Admin over 6 yearsThanks for the answer :) I'd like to take up one of your points: "It's not necessary to justify advice to leave some free space since the consequence of a storage depleted machine is self-evident." No, it isn't self-evident. It surprises people more than you might expect. And different combinations of operating system, filesystem, etc, are likely to respond to this situation in different ways: some might warn; some might fail without warning; who knows? So, it would be great to shine more light on this. Hence my question :) -

I say Reinstate Monica over 6 yearsWhen I assert it's self-evident there are consequences of a storage depleted machine, I'm not describing those consequences, but rather asserting that storage depleted machines always experience a consequence. As I attempt to prove in my answer, the nature of those consequences, and the "best" free space amount to avoid them, is highly configuration-specific. I suppose one could try to catalog them all, but I think that would be more confusing than helpful.

I say Reinstate Monica over 6 yearsWhen I assert it's self-evident there are consequences of a storage depleted machine, I'm not describing those consequences, but rather asserting that storage depleted machines always experience a consequence. As I attempt to prove in my answer, the nature of those consequences, and the "best" free space amount to avoid them, is highly configuration-specific. I suppose one could try to catalog them all, but I think that would be more confusing than helpful. -

I say Reinstate Monica over 6 yearsAlso, if you do mean to ask how specific configurations are likely to react to low disk space (e.g. with warnings, performance issues, failures, etc.) please edit your question accordingly.

I say Reinstate Monica over 6 yearsAlso, if you do mean to ask how specific configurations are likely to react to low disk space (e.g. with warnings, performance issues, failures, etc.) please edit your question accordingly. -

Pysis over 6 yearsI like to think while reading this answer, an important note is that there is no 'end-all' answer, and that you may find more details you were looking for by thinking about each use case. I definitely understood how to solve the problem better here when Eugen listed what significant processes might use that last available space.

-

chrylis -cautiouslyoptimistic- over 6 yearsThe first point isn't trivial now that the systemd cancer has eaten most distros.

/varfills up and your server falls over. -

Eugen Rieck over 6 years@chrylis While I agree with you in the core of the problem, I tend to use a separate file system for

/varand another for/var/logto even out the playing field. In addition to that, df monitoring is important - I ought to edit that into my answer -

UTF-8 over 6 yearsYou should point out that how much of your storage space you can use doesn't depend on the OS. One could currently interpret it like that. If you were to use NTFS on Linux for some odd reason, you probably shouldn't use 90% of your storage space, even through using 90% on an ext4 FS isn't a problem at all (with HDDs).

UTF-8 over 6 yearsYou should point out that how much of your storage space you can use doesn't depend on the OS. One could currently interpret it like that. If you were to use NTFS on Linux for some odd reason, you probably shouldn't use 90% of your storage space, even through using 90% on an ext4 FS isn't a problem at all (with HDDs). -

Eugen Rieck over 6 years@UTF-8 I understand your point - the only reason though to use NTFS on Linux that I can think of is Windows compatibility, and I wrote about Windows and a 70% rule of thumb earlier on.

-

Brad over 6 yearsMod Up - SSDs are very different the HDDs. Though the exact mechanism differs between drives, SSDs write [even identically placed] data to different [free] places on the disk and use subsequent garbage collection to prevent excessive wear on one spot (this is callled "wear leveling"). The fuller the disk - the less effectively it can do this.

-

Ott Toomet over 6 yearsEugen Rieck--I hate to say but your answer is about a) what do you do; and b) why it is useful. I don't see any pointers toward relevant research, e.g. what happens if you fill more than 70% on a windows system. Note that the original question was about actual (not necessarily peer-reviewed) research.

Ott Toomet over 6 yearsEugen Rieck--I hate to say but your answer is about a) what do you do; and b) why it is useful. I don't see any pointers toward relevant research, e.g. what happens if you fill more than 70% on a windows system. Note that the original question was about actual (not necessarily peer-reviewed) research. -

Dmitry Grigoryev over 6 yearsThis is only true if no files are ever removed from the drive. In real life by the time you reach 90% capacity, you'll have a bunch of free spots scattered all over the drive.

Dmitry Grigoryev over 6 yearsThis is only true if no files are ever removed from the drive. In real life by the time you reach 90% capacity, you'll have a bunch of free spots scattered all over the drive. -

Stuart Brock over 6 yearsAlso worth noting that the reason some "newer" disks work fine is that they already provision a decent amount of empty space that the user doesn't have access to (especially true of "enterprise" SSDs). This means they always have "free blocks" to write data to without the "read-erase-rewrite" cycle that slows down "full" SSDs.

-

Wes Toleman over 6 yearsI don't mean to say that the hard disk controller will refrain from filling gaps but that as a drive fills more inner sectors will be used. A disk at 90% capacity will use more inner sectors than one at only 55%. Seek time has a great impact on performance so this is mainly a benefit to large contiguous files. Greater available space does however mean that there is more opportunity to store large files contiguously.

-

MSalters over 6 yearsKeeping 25% unpartitioned and another 20% free on an SSD is completely pointless, even counterproductive. The underlying reason is that wear levelling on an SSD decouples physical and logical block numbers. The 25% unpartitioned space and 20% free inside the partition are both unused logical numbers, adding up to 40% unused logical numbers. It would be much better to partition 100% and leave 40% free. This gives the file system (dealing in logical numbers) more space to play with, while the SSD doesn't care at all.

-

MSalters over 6 yearsNote that all SSD's already do this to a degree, and hide it from you. This is done as part of wear leveling. Leaving more free space gives more room for wear leveling. This can be beneficial for a disk that's often written to, especially if it's a cheap TLC model of SSD. Then again, you lose some of the benefits of a cheap disk if you have to leave 20% free. Finally, new disks certainly aren't better. The first generation of SSD's were SLC disks, and had 100.000 erasure cycles. Current TLC can be as low as 5000 - that's 20 times worse.

-

Eugen Rieck over 6 years@MSalters It is not completely pointless - you just missed the point. Keeping 25% free on the partition level makes 100% sure, that even if the partitioned space runs full (for whatever reason) there is enough unused space for the GC in the firmware to provide free blocks timely on high write workloads. So while we reduce FS headroom, we get a 100% assured percentage of currently unused blocks for the firmware to play with. Since currently FW and controllers are much worse than file systems, this makes good sense.

-

can-ned_food over 6 years@chrylis Monitoring your /var/log for capacity was necessary even with Slackware 14 — which did not use SystemD.

can-ned_food over 6 years@chrylis Monitoring your /var/log for capacity was necessary even with Slackware 14 — which did not use SystemD. -

chrylis -cautiouslyoptimistic- over 6 years@can-ned_food But you could boot into single-user mode, and your syslog usually didn't try to persist the kernel log at DEBUG level.

-

Thorbjørn Ravn Andersen over 6 years@WesToleman The harddisk controller is not responsible for deciding where things go, it just maps sector numbers to physical locations. The operating system is - notably the file system.

-

camelccc over 6 yearsyou have clearly never taken a zfs pool to 100%, which is not something that should be done intentionally. It's a pain, you can't delete anything, you will have to truncate some files to get back write access at all, even to be able to delete anything.

-

iheggie over 6 yearsInterestingly enough no one has mentioned that that the bandwidth at the end cylinder is around half that of the start cylinder, so deliberately keeping your usage low would be of speed benefit. See en.wikipedia.org/wiki/… for references

-

iheggie over 6 yearsI would add three extra questions: 3. What are the most likely and worst case changes to disk consumption based on future growth predictions of your business, 4. cost to the business if you run out of disk space and 5. how much lead time do you need to significantly increase your disk capacity. One of my clients has 250TB of zfs raid onsite in their case they would need to know a few weeks ahead of significant changes as it takes around a day to add each larger disk into the raid array and retire the smaller one.

-

Eugen Rieck over 6 years@theggie Short-stroking has become rather obsolete - capacity (per $) is the single biggest advantage of HDDs over SSDs, so sacrificing some of that has become unusual. Bandwidth is also much less of a problem than latency: A simple 10-Disk RAID6 of spinning rust easily can hit 1GByte/s even on the innermost cylinders.

-

jpmc26 over 6 years@sampablokuper Solid piece of advice for you: academic priorities are vastly different from day to day operations priorities. That's why your college degree didn't actually prepare you for this issue. Academics rarely care this much about the day to day practical problems of these systems. Always check your info for sanity, but aside from that, trust the people who actually successfully run these systems over some pie in the sky paper. You also have the benefit of having crowd sourced your info, which greatly reduces the likelihood that you're getting garbage info.

jpmc26 over 6 years@sampablokuper Solid piece of advice for you: academic priorities are vastly different from day to day operations priorities. That's why your college degree didn't actually prepare you for this issue. Academics rarely care this much about the day to day practical problems of these systems. Always check your info for sanity, but aside from that, trust the people who actually successfully run these systems over some pie in the sky paper. You also have the benefit of having crowd sourced your info, which greatly reduces the likelihood that you're getting garbage info. -

ivan_pozdeev over 6 years"Bibliography" is a tad useless without in-text references.