How to capture all visited URLs on the command line?

Network traffic capturing is possible but messy. There are many applications running on my computer that communicate over HTML and they would fill a log up with their automated API handling and wouldn't reflect what I was visiting.

And as you correctly say, it won't show you HTTPS. The URL is an encrypted part of the request.

I would target the browser directly. These keep a decent enough history in a SQLite3 database which makes querying them pretty simple once you have the sqlite3 package installed (sudo apt-get install sqlite3). You can simply run:

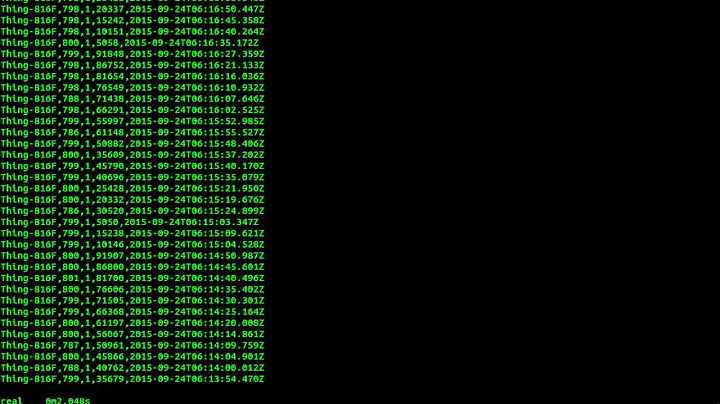

sqlite3 ~/.mozilla/firefox/*.default/places.sqlite "select url from moz_places order by last_visit_date desc limit 10;"

And that will output the last 10 URLs you visited.

Chrome has a similar setup and can be queried equally simply:

sqlite3 ~/.config/google-chrome/Default/History "select url from urls order by last_visit_time desc limit 10;"

This works but I had some database locking issues with Chrome. It seems much more reliable in Firefox. The only way around the database lock I found was to make a copy of the database. This works even when the main DB is locked up and shouldn't cause issues:

cp ~/.config/google-chrome/Default/History history.tmp

sqlite3 history.tmp "select url from urls order by last_visit_time desc limit 10;"

rm history.tmp

This approach might even be advisable for Firefox too. FF doesn't appear to lock (or takes shorter locks) but I'm not sure what would happen if you caught it mid-write.

To turn this into a live display, it's either something you would need to poll (it's not that involved a SQL query, so that might be fine) or use something like inotifywait to monitor the database file for changes.

Related videos on Youtube

redanimalwar

Updated on September 18, 2022Comments

-

redanimalwar over 1 year

I like to get all URLs I visit in the browser live captured on the command-line for piping them to another program. How would I be able to do this?

The format should be just one URL per line, nothing else, no tabs, no spaces etc.

This is what I have got so far. The tab removal is not working. I have seen a comment replying to someone (at the very bottom) who not had problems with piping it to grep that he should escape it. I not really understand this.

sudo httpry -F -i eth0 -f host,request-uri | tr -d 't'Also even if this works it will not work with https right? Is there another way to do this that includes https? I am willing to use browser plugins for firefox and chromium if they exist. Just want to send all visited URLs to a script.

Maybe I can parse the browsers historys live and pipe that?

-

redanimalwar about 10 yearsThanks, still like to know how to avoid this issues with chrome. Otherwise this not feels complete enough for me to accept this.

-

Oli about 10 years@redanimalwar You can copy the database file out for Chrome to circumvent the lock. I've tested this and it works.

-

redanimalwar about 10 yearsOk I guess since I have to watch the file for changes anyway to run the command all over again, i might as well copy it after it changed.