How to convert an emoticon specified by a U+xxxxx code to utf-8?

Solution 1

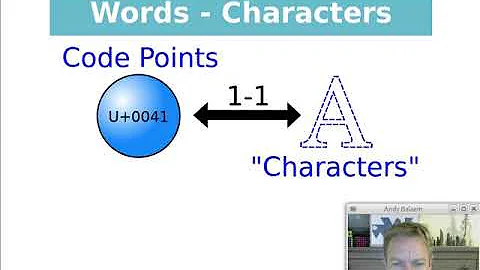

UTF-8 is a variable length encoding of Unicode. It is designed to be superset of ASCII. See Wikipedia for details of the encoding. \x00 \x01 \xF6 \x15 would be UCS-4BE or UTF-32BE encoding.

To get from the Unicode code point to the UTF-8 encoding, assuming the locale's charmap is UTF-8 (see the output of locale charmap), it's just:

$ printf '\U1F615\n'

😕

$ echo -e '\U1F615'

😕

$ confused_face=$'\U1F615'

The latter will be in the next version of the POSIX standard.

AFAIK, that syntax was introduced in 2000 by the stand-alone GNU printf utility (as opposed to the printf utility of the GNU shell), brought to echo/printf/$'...' builtins first by zsh in 2003, ksh93 in 2004, bash in 2010 (though not working properly there until 2014), but was obviously inspired by other languages.

ksh93 also supports it as printf '\x1f615\n' and printf '\u{1f615}\n'.

$'\uXXXX' and $'\UXXXXXXXX' are supported by zsh, bash, ksh93, mksh and FreeBSD sh, GNU printf, GNU echo.

Some require all the digits (as in \U0001F615 as opposed to \U1F615) though that's likely to change in future versions as POSIX will allow fewer digits. In any case, you need all the digits if the \UXXXXXXXX is to be followed by hexadecimal digits as in \U0001F615FOX, as \U1F615FOX would have been $'\U001F615F'OX.

Some expand to the characters in the current locale's encoding at the time the string is parsed or at the time it is expanded, some only in UTF-8 regardless of the locale. If the character is not available in the current locale's encoding, the behaviour varies between shells.

So, for best portability, best is to only use it in UTF-8 locales and use all the digits, and use it in $'...':

printf '%s\n' $'\U0001F615'

Note that:

LC_ALL=C.UTF-8; printf '%s\n' $'\U0001F615'

or:

{

LC_ALL=C.UTF-8

printf '%s\n' $'\U0001F615'

}

Will not work with all shells (including bash) because the $'\U0001F615' is parsed before LC_ALL is assigned. (also note that there's no guarantee that a system will have a locale called C.UTF-8)

You'd need:

LC_ALL=C.UTF-8; eval "confused_face=$'\U0001F615'"

Or:

LC_ALL=C.UTF-8

printf '%s\n' $'\U0001F615'

(not within a compound command or function).

For the reverse, to get from the UTF-8 encoding to the Unicode code-point, see this other question or that one.

$ unicode 😕

U+1F615 CONFUSED FACE

UTF-8: f0 9f 98 95 UTF-16BE: d83dde15 Decimal: 😕

😕

Category: So (Symbol, Other)

Bidi: ON (Other Neutrals)

$ perl -CA -le 'printf "%x\n", ord shift' 😕

1f615

Solution 2

Here's a way to convert from UTF-32 (big endian) to UTF-8

$ confused=$(echo -ne "\x0\x01\xF6\x15" | iconv -f UTF-32BE -t UTF-8)

$ echo $confused

😕

You'll notice your hex value 0x01F615 in there, padded with an extra leading 0 to fill 32 bits.

The Wikipedia page on UTF-8 explains the transformation from a Unicode codepoint to its UTF-8 representation very clearly. But trying to do it yourself in shell scripting might not be the best idea.

UTF-32 is fixed-width, and the correspondence between codepoint and UTF-32 representation is trivial - the value is the same.

Solution 3

Nice way to do it in your head or on paper:

Figure out how many bytes it will be: values under U+0080 are one byte, else under U+0800 are 2 bytes, else under U+10000 are 3 bytes, else 4 bytes. In your case, 4 bytes.

Convert hex to octal:

0373025.Starting at the end, peel off 2 octal digits at a time to get a sequence of octal values:

037030025.If you have fewer octal values than the expected number of bytes, add an extra 0 at the beginning:

000037030025.For all but the first, add on

0200to get:000023702300225.For the first, add

0300if the expected length is 2,0340if it's 3, or0360if it's 4, to get:360023702300225.

Now write as a string of octal escapes: \360\237\230\225. Optionally convert back to hex if you want.

Related videos on Youtube

Alex Ryan

Updated on September 18, 2022Comments

-

Alex Ryan over 1 year

Emoticons seem to be specified using a format of U+xxxxx

wherein each x is a hexadecimal digit.For example, U+1F615 is the official Unicode Consortium code for the "confused face" 😕

As I am often confused, I have a strong affinity for this symbol.

The U+1F615 representation is confusing to me because I thought the only encodings possible for unicode characters required 8, 16, 24 or 32 bits, whereas 5 hex digits require 5x4=20 bits.

I've discovered that this symbol seems to be represented by a completely different hex string in bash:

$echo -n 😕 | hexdump 0000000 f0 9f 98 95 0000004 $echo -e "\xf0\x9f\x98\x95" 😕 $PS1=$'\xf0\x9f\x98\x95 >' 😕 >I would have expected U+1F615 to convert to something like \x00 \x01 \xF6 \x15.

I don't see the relationship between these 2 encodings?

When I lookup a symbol in the official Unicode Consortium list, I would like to be able to use that code directly without having to manually convert it in this tedious fashion. i.e.

- finding the symbol on some web page

- copying it to the clipboard of the web browser

- pasting it in bash to echo through a hexdump to discover the REAL code.

Can I use this 20-bit code to determine what the 32-bit code is?

Does a relationship exist between these 2 numbers?

-

Stéphane Chazelas over 8 years@kasperd, thanks. Yes, it's worth noting. I've included that in the answer.

Stéphane Chazelas over 8 years@kasperd, thanks. Yes, it's worth noting. I've included that in the answer.