How to initialize weights in PyTorch?

Solution 1

Single layer

To initialize the weights of a single layer, use a function from torch.nn.init. For instance:

conv1 = torch.nn.Conv2d(...)

torch.nn.init.xavier_uniform(conv1.weight)

Alternatively, you can modify the parameters by writing to conv1.weight.data (which is a torch.Tensor). Example:

conv1.weight.data.fill_(0.01)

The same applies for biases:

conv1.bias.data.fill_(0.01)

nn.Sequential or custom nn.Module

Pass an initialization function to torch.nn.Module.apply. It will initialize the weights in the entire nn.Module recursively.

apply(fn): Applies

fnrecursively to every submodule (as returned by.children()) as well as self. Typical use includes initializing the parameters of a model (see also torch-nn-init).

Example:

def init_weights(m):

if isinstance(m, nn.Linear):

torch.nn.init.xavier_uniform(m.weight)

m.bias.data.fill_(0.01)

net = nn.Sequential(nn.Linear(2, 2), nn.Linear(2, 2))

net.apply(init_weights)

Solution 2

We compare different mode of weight-initialization using the same neural-network(NN) architecture.

All Zeros or Ones

If you follow the principle of Occam's razor, you might think setting all the weights to 0 or 1 would be the best solution. This is not the case.

With every weight the same, all the neurons at each layer are producing the same output. This makes it hard to decide which weights to adjust.

# initialize two NN's with 0 and 1 constant weights

model_0 = Net(constant_weight=0)

model_1 = Net(constant_weight=1)

- After 2 epochs:

Validation Accuracy

9.625% -- All Zeros

10.050% -- All Ones

Training Loss

2.304 -- All Zeros

1552.281 -- All Ones

Uniform Initialization

A uniform distribution has the equal probability of picking any number from a set of numbers.

Let's see how well the neural network trains using a uniform weight initialization, where low=0.0 and high=1.0.

Below, we'll see another way (besides in the Net class code) to initialize the weights of a network. To define weights outside of the model definition, we can:

- Define a function that assigns weights by the type of network layer, then

- Apply those weights to an initialized model using

model.apply(fn), which applies a function to each model layer.

# takes in a module and applies the specified weight initialization

def weights_init_uniform(m):

classname = m.__class__.__name__

# for every Linear layer in a model..

if classname.find('Linear') != -1:

# apply a uniform distribution to the weights and a bias=0

m.weight.data.uniform_(0.0, 1.0)

m.bias.data.fill_(0)

model_uniform = Net()

model_uniform.apply(weights_init_uniform)

- After 2 epochs:

Validation Accuracy

36.667% -- Uniform Weights

Training Loss

3.208 -- Uniform Weights

General rule for setting weights

The general rule for setting the weights in a neural network is to set them to be close to zero without being too small.

Good practice is to start your weights in the range of [-y, y] where

y=1/sqrt(n)

(n is the number of inputs to a given neuron).

# takes in a module and applies the specified weight initialization

def weights_init_uniform_rule(m):

classname = m.__class__.__name__

# for every Linear layer in a model..

if classname.find('Linear') != -1:

# get the number of the inputs

n = m.in_features

y = 1.0/np.sqrt(n)

m.weight.data.uniform_(-y, y)

m.bias.data.fill_(0)

# create a new model with these weights

model_rule = Net()

model_rule.apply(weights_init_uniform_rule)

below we compare performance of NN, weights initialized with uniform distribution [-0.5,0.5) versus the one whose weight is initialized using general rule

- After 2 epochs:

Validation Accuracy

75.817% -- Centered Weights [-0.5, 0.5)

85.208% -- General Rule [-y, y)

Training Loss

0.705 -- Centered Weights [-0.5, 0.5)

0.469 -- General Rule [-y, y)

normal distribution to initialize the weights

The normal distribution should have a mean of 0 and a standard deviation of

y=1/sqrt(n), where n is the number of inputs to NN

## takes in a module and applies the specified weight initialization

def weights_init_normal(m):

'''Takes in a module and initializes all linear layers with weight

values taken from a normal distribution.'''

classname = m.__class__.__name__

# for every Linear layer in a model

if classname.find('Linear') != -1:

y = m.in_features

# m.weight.data shoud be taken from a normal distribution

m.weight.data.normal_(0.0,1/np.sqrt(y))

# m.bias.data should be 0

m.bias.data.fill_(0)

below we show the performance of two NN one initialized using uniform-distribution and the other using normal-distribution

- After 2 epochs:

Validation Accuracy

85.775% -- Uniform Rule [-y, y)

84.717% -- Normal Distribution

Training Loss

0.329 -- Uniform Rule [-y, y)

0.443 -- Normal Distribution

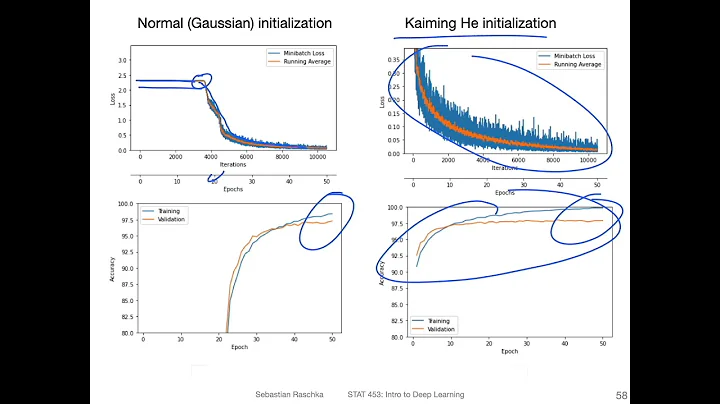

Solution 3

To initialize layers you typically don't need to do anything. PyTorch will do it for you. If you think about it, this makes a lot of sense. Why should we initialize layers, when PyTorch can do that following the latest trends.

Check for instance the Linear layer.

In the __init__ method it will call Kaiming He init function.

def reset_parameters(self):

init.kaiming_uniform_(self.weight, a=math.sqrt(3))

if self.bias is not None:

fan_in, _ = init._calculate_fan_in_and_fan_out(self.weight)

bound = 1 / math.sqrt(fan_in)

init.uniform_(self.bias, -bound, bound)

The similar is for other layers types. For conv2d for instance check here.

To note : The gain of proper initialization is the faster training speed. If your problem deserves special initialization you can do it afterwards.

Solution 4

import torch.nn as nn

# a simple network

rand_net = nn.Sequential(nn.Linear(in_features, h_size),

nn.BatchNorm1d(h_size),

nn.ReLU(),

nn.Linear(h_size, h_size),

nn.BatchNorm1d(h_size),

nn.ReLU(),

nn.Linear(h_size, 1),

nn.ReLU())

# initialization function, first checks the module type,

# then applies the desired changes to the weights

def init_normal(m):

if type(m) == nn.Linear:

nn.init.uniform_(m.weight)

# use the modules apply function to recursively apply the initialization

rand_net.apply(init_normal)

Solution 5

If you want some extra flexibility, you can also set the weights manually.

Say you have input of all ones:

import torch

import torch.nn as nn

input = torch.ones((8, 8))

print(input)

tensor([[1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1.],

[1., 1., 1., 1., 1., 1., 1., 1.]])

And you want to make a dense layer with no bias (so we can visualize):

d = nn.Linear(8, 8, bias=False)

Set all the weights to 0.5 (or anything else):

d.weight.data = torch.full((8, 8), 0.5)

print(d.weight.data)

The weights:

Out[14]:

tensor([[0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000],

[0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000],

[0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000],

[0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000],

[0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000],

[0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000],

[0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000],

[0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000, 0.5000]])

All your weights are now 0.5. Pass the data through:

d(input)

Out[13]:

tensor([[4., 4., 4., 4., 4., 4., 4., 4.],

[4., 4., 4., 4., 4., 4., 4., 4.],

[4., 4., 4., 4., 4., 4., 4., 4.],

[4., 4., 4., 4., 4., 4., 4., 4.],

[4., 4., 4., 4., 4., 4., 4., 4.],

[4., 4., 4., 4., 4., 4., 4., 4.],

[4., 4., 4., 4., 4., 4., 4., 4.],

[4., 4., 4., 4., 4., 4., 4., 4.]], grad_fn=<MmBackward>)

Remember that each neuron receives 8 inputs, all of which have weight 0.5 and value of 1 (and no bias), so it sums up to 4 for each.

Related videos on Youtube

Fábio Perez

Updated on March 29, 2022Comments

-

Fábio Perez about 2 years

How do I initialize weights and biases of a network (via e.g. He or Xavier initialization)?

-

Mateen Ulhaq about 3 yearsPyTorch often initializes the weights automatically.

Mateen Ulhaq about 3 yearsPyTorch often initializes the weights automatically. -

Farhang AmajiIf I had asked this question which is good but I'd have recieved 100 downvotes because I had not provided enough research efforts and code

Farhang AmajiIf I had asked this question which is good but I'd have recieved 100 downvotes because I had not provided enough research efforts and code

-

-

Yang Bo almost 6 yearsI found a

reset_parametersmethod in the source code of many modules. Should I override the method for weight initialization? -

Charlie Parker almost 6 yearswhat if I want to use a Normal distribution with some mean and std?

Charlie Parker almost 6 yearswhat if I want to use a Normal distribution with some mean and std? -

xjcl about 5 yearsWhat is the default initialization if I don't specify one?

xjcl about 5 yearsWhat is the default initialization if I don't specify one? -

dedObed over 4 yearsWhat is the task you optimize for? And how can an all zeros solution give zero loss?

-

Phani Rithvij over 4 yearsYou can comment over there at Fábio Perez's answer to keep the answers clean.

Phani Rithvij over 4 yearsYou can comment over there at Fábio Perez's answer to keep the answers clean. -

littleO almost 4 yearsThe default initialization doesn't always give the best results, though. I recently implemented the VGG16 architecture in Pytorch and trained it on the CIFAR-10 dataset, and I found that just by switching to

littleO almost 4 yearsThe default initialization doesn't always give the best results, though. I recently implemented the VGG16 architecture in Pytorch and trained it on the CIFAR-10 dataset, and I found that just by switching toxavier_uniforminitialization for the weights (with biases initialized to 0), rather than using the default initialization, my validation accuracy after 30 epochs of RMSprop increased from 82% to 86%. I also got 86% validation accuracy when using Pytorch's built-in VGG16 model (not pre-trained), so I think I implemented it correctly. (I used a learning rate of 0.00001.) -

prosti almost 4 yearsThis is because they haven't used Batch Norms in VGG16. It is true that proper initialization matters and that for some architectures you pay attention. For instance, if you use (nn.conv2d(), ReLU() sequence) you will init Kaiming He initialization designed for relu your conv layer. PyTorch cannot predict your activation function after the conv2d. This make sense if you evaluate the eignevalues, but typically you don't have to do much if you use Batch Norms, they will normalize outputs for you. If you plan to win to SotaBench competition it matters.

prosti almost 4 yearsThis is because they haven't used Batch Norms in VGG16. It is true that proper initialization matters and that for some architectures you pay attention. For instance, if you use (nn.conv2d(), ReLU() sequence) you will init Kaiming He initialization designed for relu your conv layer. PyTorch cannot predict your activation function after the conv2d. This make sense if you evaluate the eignevalues, but typically you don't have to do much if you use Batch Norms, they will normalize outputs for you. If you plan to win to SotaBench competition it matters. -

yasin.yazici about 3 years@ashunigion I think you misrepresent what Occam says: "entities should not be multiplied without necessity". He does not say you should pick up the simplest approach. If that was the case, then you should not have used a neural network in the first place.

-

Raghav Arora about 2 years

nn.init.xavier_uniformis now deprecated in favor ofnn.init.xavier_uniform_ -

Peiffap about 2 years@xjcl The default initialization for a

Linearlayer isinit.kaiming_uniform_(self.weight, a=math.sqrt(5)). -

Nephanth about 2 yearsIf you follow occam’s razor, you may also want to consider gaussian initialization, since that is the distribution with the highest entropy (for given variance), ie the "least informative"

![[PyTorch] Lab-09-2 Weight initialization](https://i.ytimg.com/vi/CJB0g_i7pYk/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAv9aut5jClpIkzG54GyY8QH8FUiA)