How to make a local tar file of a remote directory

Solution 1

You got it almost right, just run the tar on the remote host instead of locally. The command should look something like the following:

ssh remote_host tar cvfz - -T /directory/allfiles.txt > remote_files.tar.gz

Solution 2

If you have enough space on your local disk and your goal is to minimise the amount of date sent over the network maybe it is enough to enable the compression with scp or rsync :

scp -avrC remotehost:/path/to/files/file1 /files/file2 ... local/destination/path

Of course you can do a little script to loop over each file and do an scp compressed transfer, even without the use of tar. All is more cosy with rsync

rsync -avz --files-from=FILE remotehost:/path/to/files local/destination/path

You can connect via ssh to the remote host and write there

tar cvzf - -T list_of_filenames | ssh Local_Hostname tar xzf -

References:

from

man scp:-C Compression enable. Passes the -C flag to ssh(1) to enable compression.from

man rsync--files-from=FILE read list of source-file names from FILE -z, --compress compress file data during the transfer --compress-level=NUM explicitly set compression level

Related videos on Youtube

alle_meije

Updated on September 18, 2022Comments

-

alle_meije over 1 year

alle_meije over 1 yearThis link describes how to copy tarred files to minimise the amount of date sent over the network. I am trying to do something slightly different.

I have a number of remote files on different subdirectory levels:

remote:/directory/subdir1/file1.ext remote:/directory/subdir1/subsubdir11/file11.ext remote:/directory/subdir2/subsubdir21/file21.extAnd I have a file that lists all of them:

remote:/directory/allfiles.txtTo copy them most efficiently, on the remote site I could just do

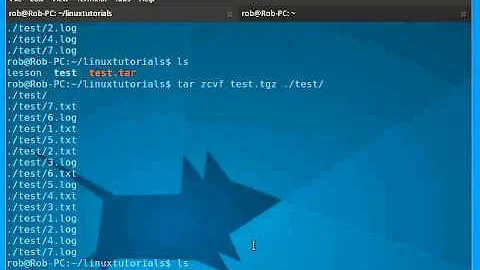

tar zcvf allfiles.tgz `cat /directory/allfiles.txt`but there is not enough space to do that.

I do have enough storage space on my local disc. Is there a way to

taran incoming stream from a remote server (usingscporsshfor the transfer)?Something like

/localdir$ tar zc - | ssh remote `cat /directory/allfiles.txt`I would guess - but that would only list the remote files on the local host. -

alle_meije almost 9 yearsExcellent, that's what I was hoping to get! I had thought about this but expected the redirection to be interpreted on the remote machine not the local. I guess the hyphen takes care of that?

alle_meije almost 9 yearsExcellent, that's what I was hoping to get! I had thought about this but expected the redirection to be interpreted on the remote machine not the local. I guess the hyphen takes care of that? -

alle_meije almost 9 yearsThanks for mentioning the

alle_meije almost 9 yearsThanks for mentioning the--files-fromoption for rsync, that is handy! The compression option ofscpis efficient for network traffic -- would the script you mention mean typing the password many times (or storing it in~ /.ssh)? -

Hastur almost 9 years

rsynccan be even better ofscp(check the options-Sfor sparse files), moreover it requires the authentication only once, and you can skip files just present. For thescpscripted solution you really will want to use the autmate SSH authentication. -

Alexis Wilke over 4 yearsYou may want to fix "date" with "data" in "[...] the amount of dat[e] sent [...]" — and I think that's the main reason to transform that data to a compressed tarball in the first place...

Alexis Wilke over 4 yearsYou may want to fix "date" with "data" in "[...] the amount of dat[e] sent [...]" — and I think that's the main reason to transform that data to a compressed tarball in the first place... -

Alexis Wilke over 4 yearsI don't think that the

Alexis Wilke over 4 yearsI don't think that the-vis a good idea. You're going to use extra bandwidth for that feature. You could instead verify the results with atar tvf remote_files.tar.gzonce the transfer is done. -

dirk over 4 yearsIf you really have to have to most minimal bits transfered over the network, sure. But really how much more bandwidth is '-v' going to take for showing the file progress? Data send over with ssh is probably also compressed too.

dirk over 4 yearsIf you really have to have to most minimal bits transfered over the network, sure. But really how much more bandwidth is '-v' going to take for showing the file progress? Data send over with ssh is probably also compressed too.

![How to create,extract,compress tar files in linux ubuntu [ Explained ]](https://i.ytimg.com/vi/nxor30XUqnU/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAI1HQ5BFSNoJH1hC_FqiK-sFhSAg)