How to parallelize the scp command?

Solution 1

Parallelizing SCP is counterproductive, unless both sides run on SSD's. The slowest part of SCP is wither the network, in which case parallelizing won't help at all, or disks on either side, which you'll make worse by parallelizing: seek time is going to kill you.

You say machineA is on SSD, so parallelizing per machine should be enough. The simplest way to do that is to wrap the first forloop in a subshell and background it.

( for el in "${PARTITION1[@]}"

do

scp david@${FILERS_LOCATION[0]}:$dir1/t1_weekly_1680_"$el"_200003_5.data $PRIMARY/. || scp david@${FILERS_LOCATION[1]}:$dir2/t1_weekly_1680_"$el"_200003_5.data $PRIMARY/.

done ) &

Solution 2

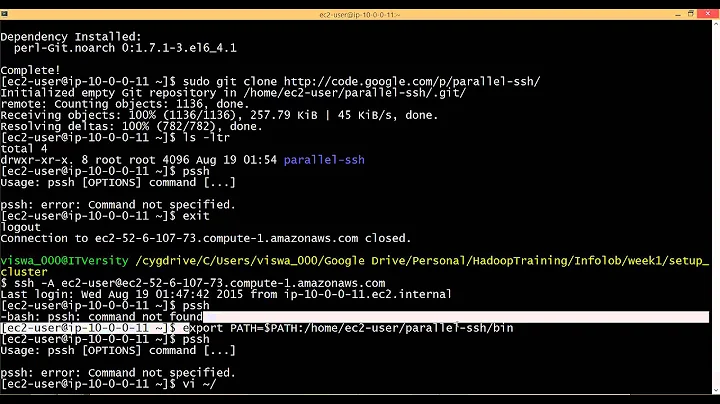

You could use GNU Parallel to help you run multiple tasks in parallel.

However, in your situation, it would appear that you're establishing a separate secure connection for each file transfer, which is likely quite inefficient indeed, especially if the other machines are not on a local network.

The best approach would be to use a tool that specifically does batch file transfer — for example, rsync, which can work over plain ssh, too.

If rsync is not available, as an alternative, you could use zip, or even tar and gzip or bzip2, and then scp the resulting archives (then connect with ssh, and do the unpacking).

Related videos on Youtube

arsenal

Updated on September 18, 2022Comments

-

arsenal over 1 year

arsenal over 1 yearI need to scp the files from

machineBandmachineCtomachineA. I am running my below shell script frommachineA. I have setup the ssh keys properly.If the files are not there in

machineB, then it should be there inmachineC. I need to move all the PARTITION1 AND PARTITION2 FILES into machineA respective folder as shown below in my shell script -#!/bin/bash readonly PRIMARY=/export/home/david/dist/primary readonly SECONDARY=/export/home/david/dist/secondary readonly FILERS_LOCATION=(machineB machineC) readonly MAPPED_LOCATION=/bat/data/snapshot PARTITION1=(0 3 5 7 9) PARTITION2=(1 2 4 6 8) dir1=$(ssh -o "StrictHostKeyChecking no" david@${FILERS_LOCATION[0]} ls -dt1 "$MAPPED_LOCATION"/[0-9][0-9][0-9][0-9][0-9][0-9][0-9][0-9] | head -n1) dir2=$(ssh -o "StrictHostKeyChecking no" david@${FILERS_LOCATION[1]} ls -dt1 "$MAPPED_LOCATION"/[0-9][0-9][0-9][0-9][0-9][0-9][0-9][0-9] | head -n1) length1=$(ssh -o "StrictHostKeyChecking no" david@${FILERS_LOCATION[0]} "ls '$dir1' | wc -l") length2=$(ssh -o "StrictHostKeyChecking no" david@${FILERS_LOCATION[1]} "ls '$dir2' | wc -l") if [ "$dir1" = "$dir2" ] && [ "$length1" -gt 0 ] && [ "$length2" -gt 0 ] then rm -r $PRIMARY/* rm -r $SECONDARY/* for el in "${PARTITION1[@]}" do scp david@${FILERS_LOCATION[0]}:$dir1/t1_weekly_1680_"$el"_200003_5.data $PRIMARY/. || scp david@${FILERS_LOCATION[1]}:$dir2/t1_weekly_1680_"$el"_200003_5.data $PRIMARY/. done for sl in "${PARTITION2[@]}" do scp david@${FILERS_LOCATION[0]}:$dir1/t1_weekly_1680_"$sl"_200003_5.data $SECONDARY/. || scp david@${FILERS_LOCATION[1]}:$dir2/t1_weekly_1680_"$sl"_200003_5.data $SECONDARY/. done fiCurrently, I am having 5 files in PARTITION1 AND PARTITION2, but in general it will have around 420 files, so that means, it will move the files one by one which I think might be pretty slow. Is there any way to speed up the process?

I am running Ubuntu 12.04

-

Matthew Ife over 10 yearsI dont think there is much benefit to you making this concurrent, its only two hosts. If you had two thousand there might be a good case to make for the extra complexity -- else you're falling into a trap of over-engineering this.

Matthew Ife over 10 yearsI dont think there is much benefit to you making this concurrent, its only two hosts. If you had two thousand there might be a good case to make for the extra complexity -- else you're falling into a trap of over-engineering this.

-

-

arsenal over 10 yearsmachineA is running on SSD's not sure about machineB and machineC.

arsenal over 10 yearsmachineA is running on SSD's not sure about machineB and machineC. -

arsenal over 10 yearsAnd if you are saying parallelizing won't help at all. The way I am doing currently is the only way which is efficient? Or we can improve it slightly?

arsenal over 10 yearsAnd if you are saying parallelizing won't help at all. The way I am doing currently is the only way which is efficient? Or we can improve it slightly? -

Dennis Kaarsemaker over 10 yearsRats, that means I'll have to give you an actual answer :)

-

arsenal over 10 yearsAnd similarly I can do it for second for loop as well? They both are moving into same machine but in different folders..

arsenal over 10 yearsAnd similarly I can do it for second for loop as well? They both are moving into same machine but in different folders.. -

Dennis Kaarsemaker over 10 yearssure, but why? there's nothing left to run in the foreground after that :) - btw, you'll also want to add a

waitat the end of the script. -

arsenal over 10 yearsI see what you meant, Then how do I run my other for loop in the background as well?

arsenal over 10 yearsI see what you meant, Then how do I run my other for loop in the background as well? -

Ole Tange over 10 yearsHow to use GNU Parallel: gist.github.com/rcoup/5358786 and gnu.org/software/parallel/man.html#example__parallelizing_rsync

Ole Tange over 10 yearsHow to use GNU Parallel: gist.github.com/rcoup/5358786 and gnu.org/software/parallel/man.html#example__parallelizing_rsync -

Ole Tange over 10 yearsI have had a situation where it was neither the disk bandwidth nor the network bandwidth that limited the performance. It was network latency. In that situation I got a factor of 3 performance boost by using GNU Parallel (see other answer).

Ole Tange over 10 yearsI have had a situation where it was neither the disk bandwidth nor the network bandwidth that limited the performance. It was network latency. In that situation I got a factor of 3 performance boost by using GNU Parallel (see other answer).