How to remove old robots.txt from google as old file block the whole site

This is from Google Webmaster Developers site https://developers.google.com/webmasters/control-crawl-index/docs/faq

How long will it take for changes in my robots.txt file to affect my search results?

First, the cache of the robots.txt file must be refreshed (we generally cache the contents for up to one day). Even after finding the change, crawling and indexing is a complicated process that can sometimes take quite some time for individual URLs, so it's impossible to give an exact timeline. Also, keep in mind that even if your robots.txt file is disallowing access to a URL, that URL may remain visible in search results despite that fact that we can't crawl it. If you wish to expedite removal of the pages you've blocked from Google, please submit a removal request via Google Webmaster Tools.

And here are specifications for robots.txt from Google https://developers.google.com/webmasters/control-crawl-index/docs/robots_txt

If your file's syntax is correct the best answer is just wait till Google updates your new robots file.

Related videos on Youtube

Learning

I have been using stackoverflow for few months & it is a blessing for learners and people who works as individual developer/designers in companies and many time get stuck as they don't have subject experts around. This is where stackoverflow comes to rescue. I appreciate all those who help others by replying to their question and most of the time with correct answers.

Updated on September 18, 2022Comments

-

Learning over 1 year

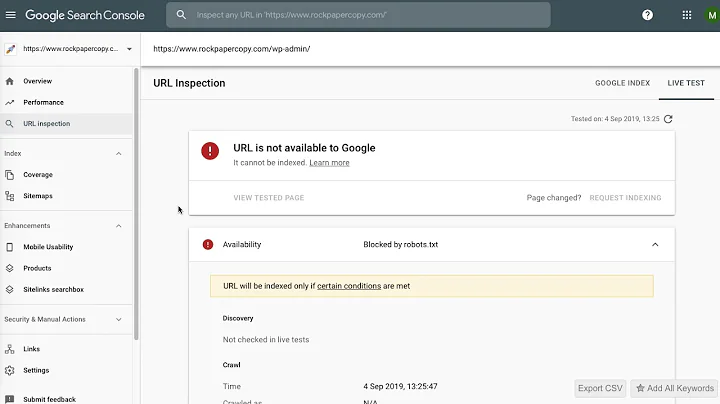

I have a website which still shows old robots.txt in the google webmaster tools.

User-agent: * Disallow: /Which is blocking Googlebot. I have removed old file updated new robots.txt file with almost full access & uploaded it yesterday but it is still showing me the old version of robots.txt Latest updated copy contents are below

User-agent: * Disallow: /flipbook/ Disallow: /SliderImage/ Disallow: /UserControls/ Disallow: /Scripts/ Disallow: /PDF/ Disallow: /dropdown/I submitted request to remove this file using Google webmaster tools but my request was denied

I would appreciate if someone can tell me how i can clear it from the google cache and make google read the latest version of robots.txt file.

-

Admin over 11 yearsHei…I didn’t know we could use * directive on Disallow. Nice guide by the way, I wanna post article about blocking content. Your post help me a lot.

Admin over 11 yearsHei…I didn’t know we could use * directive on Disallow. Nice guide by the way, I wanna post article about blocking content. Your post help me a lot.

-

-

Learning almost 12 yearsI do have GWM account and i have mentioned that Google Webmaster is still reading the old file not the new robots.tx file.