If 32-bit machines can only handle numbers up to 2^32, why can I write 1000000000000 (trillion) without my machine crashing?

Solution 1

I answer your question by asking you a different one:

How do you count on your fingers to 6?

You likely count up to the largest possible number with one hand, and then you move on to your second hand when you run out of fingers. Computers do the same thing, if they need to represent a value larger than a single register can hold they will use multiple 32bit blocks to work with the data.

Solution 2

You are correct that a 32-bit integer cannot hold a value greater than 2^32-1. However, the value of this 32-bit integer and how it appears on your screen are two completely different things. The printed string "1000000000000" is not represented by a 32-bit integer in memory.

To literally display the number "1000000000000" requires 13 bytes of memory. Each individual byte can hold a value of up to 255. None of them can hold the entire, numerical value, but interpreted individually as ASCII characters (for example, the character '0' is represented by decimal value 48, binary value 00110000), they can be strung together into a format that makes sense for you, a human.

A related concept in programming is typecasting, which is how a computer will interpret a particular stream of 0s and 1s. As in the above example, it can be interpreted as a numerical value, a character, or even something else entirely. While a 32-bit integer may not be able to hold a value of 1000000000000, a 32-bit floating-point number will be able to, using an entirely different interpretation.

As for how computers can work with and process large numbers internally, there exist 64-bit integers (which can accommodate values of up to 16-billion-billion), floating-point values, as well as specialized libraries that can work with arbitrarily large numbers.

Solution 3

First and foremost, 32-bit computers can store numbers up to 232-1 in a single machine word. Machine word is the amount of data the CPU can process in a natural way (ie. operations on data of that size are implemented in hardware and are generally fastest to perform). 32-bit CPUs use words consisting of 32 bits, thus they can store numbers from 0 to 232-1 in one word.

Second, 1 trillion and 1000000000000 are two different things.

- 1 trillion is an abstract concept of a number

- 1000000000000 is text

By pressing 1 once and then 0 12 times you're typing text. 1 inputs 1, 0 inputs 0. See? You're typing characters. Characters aren't numbers. Typewriters had no CPU or memory at all and they were handling such "numbers" pretty well, because it's just text.

Proof that 1000000000000 isn't a number, but text: it can mean 1 trillion (in decimal), 4096 (in binary) or 281474976710656 (in hexadecimal). It has even more meanings in different systems. Meaning of 1000000000000 is a number and storing that number is a different story (we'll get back to it in a moment).

To store the text (in programming it's called a string) 1000000000000 you need 14 bytes (one for each character plus a terminating NULL byte that basically means "the string ends here"). That's 4 machine words. 3 and a half would be enough, but as I said, operations on machine words are the fastest. Let's assume ASCII is used for text encoding, so in memory it will look like this: (converting ASCII codes corresponding to 0 and 1 to binary, each word in a separate line)

00110001 00110000 00110000 00110000

00110000 00110000 00110000 00110000

00110000 00110000 00110000 00110000

00110000 00000000 00000000 00000000

Four characters fit in one word, the rest is moved to the next one. The rest is moved to next word until everything (including first NULL byte) fits.

Now, back to storing numbers. It works just like with overflowing text, but they are fitted from right to left. It may sound complicated, so here's an example. For the sake of simplicity let's assume that:

- our imaginary computer uses decimal instead of binary

- one byte can hold numbers

0..9 - one word consists of two bytes

Here's an empty 2-word memory:

0 0

0 0

Let's store the number 4:

0 4

0 0

Now let's add 9:

1 3

0 0

Notice that both operands would fit in one byte, but not the result. But we have another one ready to use. Now let's store 99:

9 9

0 0

Again, we have used second byte to store the number. Let's add 1:

0 0

0 0

Whoops... That's called integer overflow and is a cause of many serious problems, sometimes very expensive ones.

But if we expect that overflow will happen, we can do this:

0 0

9 9

And now add 1:

0 1

0 0

It becomes clearer if you remove byte-separating spaces and newlines:

0099 | +1

0100

We have predicted that overflow may happen and we may need additional memory. Handling numbers this way isn't as fast as with numbers that fit in single words and it has to be implemented in software. Adding support for two-32-bit-word-numbers to a 32-bit CPU effectively makes it a 64-bit CPU (now it can operate on 64-bit numbers natively, right?).

Everything I have described above applies to binary memory with 8-bit bytes and 4-byte words too, it works pretty much the same way:

00000000 00000000 00000000 00000000 11111111 11111111 11111111 11111111 | +1

00000000 00000000 00000000 00000001 00000000 00000000 00000000 00000000

Converting such numbers to decimal system is tricky, though. (but it works pretty well with hexadecimal)

Solution 4

You are also able to write "THIS STATEMENT IS FALSE" without your computer crashing :) @Scott's answer is spot-on for certain calculation frameworks, but your question of "writing" a large number implies that it's just plain text, at least until it's interpreted.

Edit: now with less sarcasm more useful information on different ways a number can be stored in memory. I'll be describing these with higher abstraction i.e. in terms that a modern programmer may be writing code in before it's translated to machine code for execution.

Data on a computer has to be restricted to a certain type, and a computer definition of such type describes what operations can be performed on this data and how (i.e. compare numbers, concatenate text or XOR a boolean). You can't simply add text to a number, just like you can't multiply a number by text so some of these values can be converted between types.

Let's start with unsigned integers. In these value types, all bits are used to store information about digits; yours is an example of a 32-bit unsigned integer where any value from 0 to 2^32-1 can be stored. And yes, depending on the language or architecture of the platform used you could have 16-bit integers or 256-bit integers.

What if you want to get negative? Intuitively, signed integers is the name of the game. Convention is to allocate all values from -2^(n-1) to 2^(n-1)-1 - this way we avoid the confusion of having to deal with two ways to write +0 and -0. So a 32-bit signed integer would hold a value from -2147483648 to 2147483647. Neat, isn't it?

Ok, we've covered integers which are numbers without a decimal component. Expressing these is trickier: the non-integer part can sensibly only be somewhere between 0 and 1, so every extra bit used to describe it would increase its precision: 1/2, 1/4, 1/8... The problem is, you can't precisely express a simple decimal 0.1 as a sum of fractions that can only have powers of two in their denominator! Wouldn't it be much easier to store the number as an integer, but agree to put the radix (decimal) point instead? This is called fixed point numbers, where we store 1234100 but agree on a convention to read it as 1234.100 instead.

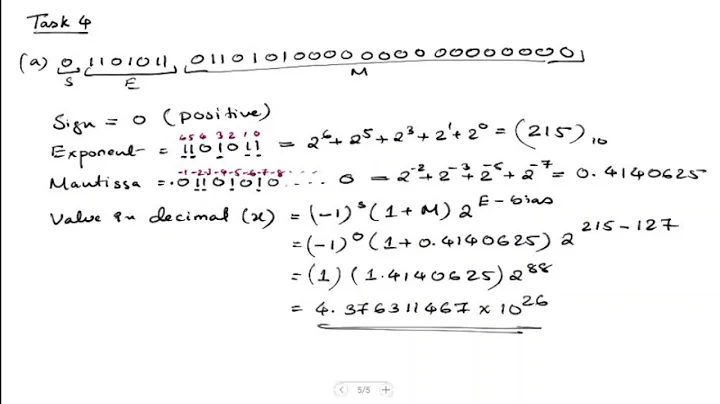

A relatively more common type used for calculations is floating point. The way it works is really neat, it uses one bit to store the sign value, then some to store exponent and significand. There are standards that define such allocations, but for a 32-bit float the maximum number you would be able to store is an overwhelming

(2 - 2^-23) * 2^(2^7 - 1) ≈ 3.4 * 10^38

This however comes at the cost of precision. JavaScript available in browsers uses 64-bit floats, and it still can't get things right. Just copy this into the address bar and press enter. Spoiler alert: the result is not going to be 0.3.

javascript:alert(0.1+0.2);

There are more alternative types like Microsoft .NET 4.5's BigInteger, which theoretically has no upper or lower bounds and has to be calculated in "batches"; but perhaps the more fascinating technologies are those that understand math, like Wolfram Mathematica engine, which can precisely work with abstract values like infinity.

Solution 5

The key is understanding how computers encode numbers.

True, if a computer insists on storing numbers using a simple binary representation of the number using a single word (4 bytes on a 32 bit system), then a 32 bit computer can only store numbers up to 2^32. But there are plenty of other ways to encode numbers depending on what it is you want to achieve with them.

One example is how computers store floating point numbers. Computers can use a whole bunch of different ways to encode them. The standard IEEE 754 defines rules for encoding numbers larger than 2^32. Crudely, computers can implement this by dividing the 32 bits into different parts representing some digits of the number and other bits representing the size of the number (i.e. the exponent, 10^x). This allows a much larger range of numbers in size terms, but compromises the precision (which is OK for many purposes). Of course the computer can also use more than one word for this encoding increasing the precision of the magnitude of the available encoded numbers. The simple decimal 32 version of the IEEE standard allows numbers with about 7 decimal digits of precision and numbers of up to about 10^96 in magnitude.

But there are many other options if you need the extra precision. Obviously you can use more words in your encoding without limit (though with a performance penalty to convert into and out of the encoded format). If you want to explore one way this can be done there is a great open-source add-in for Excel that uses an encoding scheme allowing hundreds of digits of precision in calculation. The add-in is called Xnumbers and is available here. The code is in Visual Basic which isn't the fastest possible but has the advantage that it is easy to understand and modify. It is a great way to learn how computers achieve encoding of longer numbers. And you can play around with the results within Excel without having to install any programming tools.

Related videos on Youtube

Ben Johnson mk2

Updated on September 18, 2022Comments

-

Ben Johnson mk2 over 1 year

32-bit computers can only store signed integers up to 231 - 1.

This is why we have run out of IPv4 addresses and have entered the 64-bit era.However, the number 231 - 1 (2,147,483,647) is not as large as the number 1 trillion (1,000,000,000,000) which I seem to be able to display fine without my machine crashing.

Can someone explain why this is?

-

JFA over 10 yearsThe question is flawed. 32-bit machines can handle numbers much larger than 2^32. They do it all the time, with 'long' and so on. They can only store up to 2^32 in one register, but the software is written to bypass this problem. Some modern languages don't even have a problem with the length of a given number.

JFA over 10 yearsThe question is flawed. 32-bit machines can handle numbers much larger than 2^32. They do it all the time, with 'long' and so on. They can only store up to 2^32 in one register, but the software is written to bypass this problem. Some modern languages don't even have a problem with the length of a given number. -

nhinkle over 10 yearsPlease keep comments on-topic, polite, and relevant to the technical aspects of the question. Nearly 50 joke comments already had to be removed, and we'd like to avoid having to lock the post. Thank you.

-

HelloGoodbye over 10 yearsThis question has been written in a way that is a bit sloppy. What do you mean by "write" and "display" the number 1000000000000? When you wrote the question you wrote the number 1000000000000, and your web browser displays it just fine, I assume, but this should be nothing strange to anyone that has ever used a computer before. The question asks for free interpretation.

-

Owen Johnson over 10 yearsSome languages (like python) can store really really big numbers as a array of the digits. With that, it doesn't matter how large your word or register is, you can have as many digits as you can store in memory. That's 10^2^32 for 32 bit machines or 10^2^64 for 64 bit machines!

-

Hagen von Eitzen over 10 yearsThe human consciousness is estimated to hold about 50 bits (I read somewhere). So the question is not "How can I write

Hagen von Eitzen over 10 yearsThe human consciousness is estimated to hold about 50 bits (I read somewhere). So the question is not "How can I write10^9without my PC crashing?" but rather "How can I write10^(18)without my brain crashing?" -

Koray Tugay about 9 years32 bit computers can only store UNSIGNED integers up to 2^32 - 1. 2^32 - 1 is not even equal to 2,147,483,647... 300 up-votes and nobody realised this?

Koray Tugay about 9 years32 bit computers can only store UNSIGNED integers up to 2^32 - 1. 2^32 - 1 is not even equal to 2,147,483,647... 300 up-votes and nobody realised this? -

Talespin_Kit almost 8 yearsalso its not trillion, its 4 billion

-

Memet Olsen about 6 yearsAs a note:

10^12is calledTrillionin US, English Canadian, Australian and modern British andBillionin Europe, older British and French Canadian.

-

-

Michael Petrotta over 10 yearsYou can do that in this reality. Try doing that in the Star Trek universe. Just stand back before, because of all the sparks and smoke.

-

MirroredFate over 10 yearsYour answer reads rather condescendingly. OP is clearly talking about the number, not the text:

large as the number 1 trillion (1000000000000). Also, you are almost talking about Arbitrary-precision arithmetic, but you never really mention any of the terms for what you are saying.... -

Phil over 10 yearsGood answer except for the details - 16bits of address space gave you 64kb, not 32mb, and machines like the 286 had 24-bit addresses (for 16mb). Also, with 64-bit addresses, you go well beyond terabytes - more like 16 exabytes - terabytes is around the kind of limits motherboards/CPUs of the present generation are imposing - not the size of the addresses.

-

gronostaj over 10 years32-bit refers to machine word size, not memory addresses. As Phil mentioned, 286 was 16-bit CPU but used 24 bits for addressing through memory segmentation. x86 CPUs are 32-bit, but use 36-bit addressing. See PAE.

-

Ruslan over 10 years@gronostaj well x86 are have 32-bit addressing from 386 to Pentium.

Ruslan over 10 years@gronostaj well x86 are have 32-bit addressing from 386 to Pentium. -

Elzo Valugi over 10 years"1 trillion" is also a string

-

gronostaj over 10 years@ElzoValugi It is. I had to find some way to present the concept of abstract number, as opposed to string representing a number. I believe "1 trillion" is a better and less ambiguous way to do it (see the proof in answer).

-

user1207217 over 10 yearsUpvote because this is the only CORRECT answer here - 32bit refers to 32bit memory addressing, not 32bit arithmetic.

user1207217 over 10 yearsUpvote because this is the only CORRECT answer here - 32bit refers to 32bit memory addressing, not 32bit arithmetic. -

Joe over 10 years@MirroredFate I disagree with 'is clearly talking about the number'. OP says 'displayed fine', which clearly is talking about the text '1000000000000' to me...

-

pabouk - Ukraine stay strong over 10 years@user1207217: ?? So according to your reasoning for example Z80 or 8080 are 16-bit processors (because of 16-bit memory addressing and memory bus)?

-

Jon Hanna over 10 years@pabouk, agreed; it clearly isn't about address size, and the Z80 was the first chip I thought of too (childhood memories), though we could add that they'd be calling some 16-bit x86 chips 20-bit, some 32-bit x86 chips 36-bit, and with some chips having different addressing in different cases, perhaps splitting the difference?

-

Tim B over 10 yearsActually that is mostly correct but not quite. A 32 point floating point number is unlikely to be able to accurately represent 1000000000000. It will represent a number very very close to the desired number but not exactly it.

-

V-X over 10 years@TimB: Have you heard about decimal32 format? It's part of IEEE 754-2008 standard. This format is capable of correct representation of this number:)

-

Tim B over 10 yearsTrue, that can. However that is not the format people mean when they say "float", which usually refers to a 32bit floating point number as stored and used by standard floating point processors in current computers.

-

jcora over 10 years"Characters aren't numbers." - they are

-

gronostaj over 10 years@yannbane 'A' is a character and not a number. '?' is a character and not a number. '1' is a character and not a number too. Characters are just symbols. They can represent digits or numbers, but definitely they aren't numbers. '1' can stand for one, ten, hundred, thousand and so on, it's just a symbol that stands for a digit that can be a number or its part. '10' (string of characters) can mean two or eight or ten or sixteen etc. but when you say you have ten apples, you're using a number ten and everybody knows what you mean. There's a huge difference between characters and numbers.

-

gronostaj over 10 years@yannbane Or if that doesn't convince you, then let's try strictly 'computerish' approach. Look at the ASCII table: if number 1 is the same thing as character '1', then apparently 1 equals 49 for your computer. This conclusion is ridiculous, but reasoning was correct, so assumptions must have been wrong: 1 and '1' aren't the same thing.

-

greggo over 10 years@TimB indeed. The closest number to that which can be represented as a float32 is 999999995904

-

Engineer over 10 yearsYeah, I was off on the details, sorry. I wasn't really trying to be too technical, just give the OP a general sense of what the bits meant, not go into memory addressing schemes, segment:offset addressing, and whatnot. I wish I'd have said "machine word size", tho. ;) I'm glad see I'm not the only one still scarred by the Z80 chip. Just don't bring up CP/M... Thanks for the upvotes.

Engineer over 10 yearsYeah, I was off on the details, sorry. I wasn't really trying to be too technical, just give the OP a general sense of what the bits meant, not go into memory addressing schemes, segment:offset addressing, and whatnot. I wish I'd have said "machine word size", tho. ;) I'm glad see I'm not the only one still scarred by the Z80 chip. Just don't bring up CP/M... Thanks for the upvotes. -

J0e3gan over 10 yearsFunny, @codename. How then do you count on your fingers to 32 or more (i.e. once 2^5 is exhausted)? ;) The analogy of moving to one's other hand is good...even if binary delays the need to move to one's other hand. What I would like to see is counting to 1,024 or more with the pedial dexterity to move to one's toes for further counting in binary - up to 1,048,575! :) That's potentially 20-bits of daughterboard power. :P

-

Andon M. Coleman over 10 yearsThat is not exactly how fixed-point works. It is actually a system where numbers are scaled and biased to produce the decimal point. In your example the scale is 1/1000, but there are also fixed-point numbers (especially in computer graphics) like this: 0 = 0.0, 255 = 1.0 - the scale is 1/255.

Andon M. Coleman over 10 yearsThat is not exactly how fixed-point works. It is actually a system where numbers are scaled and biased to produce the decimal point. In your example the scale is 1/1000, but there are also fixed-point numbers (especially in computer graphics) like this: 0 = 0.0, 255 = 1.0 - the scale is 1/255. -

Engineer over 10 years16bits allows 32mb of memory, if you use Signed Memory Addressing (patent pending) ;)

Engineer over 10 years16bits allows 32mb of memory, if you use Signed Memory Addressing (patent pending) ;) -

Keith Thompson over 10 years@TimB: But a 64-bit floating-point number can easily represent

1000000000000exactly. It's 10^12, or 2^12 * 5^12; 5^12 requires 28 bits of mantissa. -

doug65536 over 10 yearsNot true, the original Pentium processor had a 64-bit data bus for high memory bandwidth, even though it was a 32-bit processor. The 8088 was a 16-bit processor with an 8-bit data bus.

doug65536 over 10 yearsNot true, the original Pentium processor had a 64-bit data bus for high memory bandwidth, even though it was a 32-bit processor. The 8088 was a 16-bit processor with an 8-bit data bus. -

Kyle Strand over 10 yearsIn my mind I know 36, but I typically only use 16 of them.

-

Kyle Strand over 10 years"1 trillion" is not necessarily as unambiguous as you'd think... en.wikipedia.org/wiki/Long_and_short_scales#Comparison

-

Makach over 10 years@codename- easy, you assign one finger as a stack pointer. Once you run out of fingers you add the amount to the stack and restart counting.

-

ARNAB over 10 years'A computer uses bits to encode numbers, but that is not important.' In the context of the user asking about 32 bit words and how they are used to store numbers larger than 2^32-1 is is very important.

-

frodeborli over 10 yearsIt is not important how you encode numbers in the memory of your brain. You've got a finite number of representations; most have learnt 10 different symbols. Inside your brain, this is probably represented in the form of thousands of neurons and synapses. In a computer it is represented in the form of electricity or no electricity on a power line. From a programming perspective - or when learning maths, it is not important at all, except in the rare case where you're programming directly for a specific set of CPUs. He is asking about 32 bit vs 64 bit, not individual bits.

-

Zane over 10 yearsWhere did you learn that, @codename? I heard this first from Frederik Pohl, see e.g. here hjkeen.net/halqn/f_pohl3.htm

-

Cruncher over 10 years@Makach Your "stack pointer" can only have 2 states though(presumably you can't contort your finger into more than 2 states easily, and coordinate it with your other fingers). And since, now you only have 4 fingers you can only count to 15 on them. Once you change your "stack pointer" you start at 16, and can still only count up to 31.

-

Master Chief over 10 yearsI think this is not the answer to the relevant question. Answer by @Bigbio2002 is the correct one. Here "1000000000000" is not a number but a text, just like "adsfjhekgnoregrebgoregnkevnregj". What you are saying is true, but I strongly feel this is not the correct answer. And to see so many upvotes...

Master Chief over 10 yearsI think this is not the answer to the relevant question. Answer by @Bigbio2002 is the correct one. Here "1000000000000" is not a number but a text, just like "adsfjhekgnoregrebgoregnkevnregj". What you are saying is true, but I strongly feel this is not the correct answer. And to see so many upvotes... -

prosfilaes over 10 yearsThat's not really true; what 64 bit refers to is inconsistent, but systems with register widths of 64 bit are frequently called 64 bit. Wikipedia says "a 64-bit computer architecture generally has integer and addressing registers that are 64 bits wide". Yes, the modern x86 (or AMD-64) product line has huge special purpose registers, but they have 64 bit main registers and can access 48-52 bits of memory; the older x86 systems have 32 bit main registers and access 24-36 bits of memory, and the 8086 was called a 16-bit chip, had 16-bit wide registers and accessed 20 bits of memory.

-

mafu over 10 years@prosfilaes That's a lot of valuable information, I was referring to those (I failed to remember the details as well as you did tho). Feel free to edit this into the answer.

-

Secret over 10 years@MasterChief - Not necessarily. You could be looking at 1000000000000 in your OS's calculator app. Sure, the display is text, but it has to be stored as a number somehow so that you can add 1 to it.

-

Firee about 10 years@TimB So what kind of a number should a user attempt to type to crash his system

Firee about 10 years@TimB So what kind of a number should a user attempt to type to crash his system -

sawdust over 8 yearsThis "answer" is incorrect: calculators use BCD number representation in order to avoid truncation errors. I.E. 0.1 decimal cannot be accurately represented as a finite length binary number.

-

TOOGAM over 7 yearsI feel like pieces of my answer re-iterate some of the other answers to this question. However, this wasn't noticed when I first wrote the content since I wrote that for another question. Also, while I appreciate Animism's answer including some code in the C language, I felt my content provided some details about how Assembly works, which is closer to the CPU's actual actions/design. So my answer isn't trying to be the superior answer that is "better than" all the others, but just supplementary; adding another perspective with some additional insight

-

Clonkex almost 7 yearsI think the reference to IPv4 was to point out that the number of IPv4 addresses is effectively limited to the length of a signed 32-bit integer, whereas IPv6 uses 128 bits and can therefore have many orders of magnitude more addresses.

-

Kyle Strand almost 7 years@Clonkex Possibly, although that's definitely not the question is phrased.

-

Kyle Strand over 6 years@Firee The largest integer not representable as a 64-bit float is 179769313486231570814527423731704356798070567525844996598917476803157260780028538760589558632766878171540458953514382464234321326889464182768467546703537516986049910576551282076245490090389328944075868508455133942304583236903222948165808559332123348274797826204144723168738177180919299881250404026184124858369.

-

Kyle Strand over 6 yearsHere is a program that prints the previous float, then the maximum float plus 1 (the addition does not do anything), and then the previous float times 2 (which becomes "infinity"). Note that although floating-point math no longer works above this range, I was able to type the number into the comment field above without any ill effects.