Is a Java hashmap search really O(1)?

Solution 1

A particular feature of a HashMap is that unlike, say, balanced trees, its behavior is probabilistic. In these cases its usually most helpful to talk about complexity in terms of the probability of a worst-case event occurring would be. For a hash map, that of course is the case of a collision with respect to how full the map happens to be. A collision is pretty easy to estimate.

pcollision = n / capacity

So a hash map with even a modest number of elements is pretty likely to experience at least one collision. Big O notation allows us to do something more compelling. Observe that for any arbitrary, fixed constant k.

O(n) = O(k * n)

We can use this feature to improve the performance of the hash map. We could instead think about the probability of at most 2 collisions.

pcollision x 2 = (n / capacity)2

This is much lower. Since the cost of handling one extra collision is irrelevant to Big O performance, we've found a way to improve performance without actually changing the algorithm! We can generalzie this to

pcollision x k = (n / capacity)k

And now we can disregard some arbitrary number of collisions and end up with vanishingly tiny likelihood of more collisions than we are accounting for. You could get the probability to an arbitrarily tiny level by choosing the correct k, all without altering the actual implementation of the algorithm.

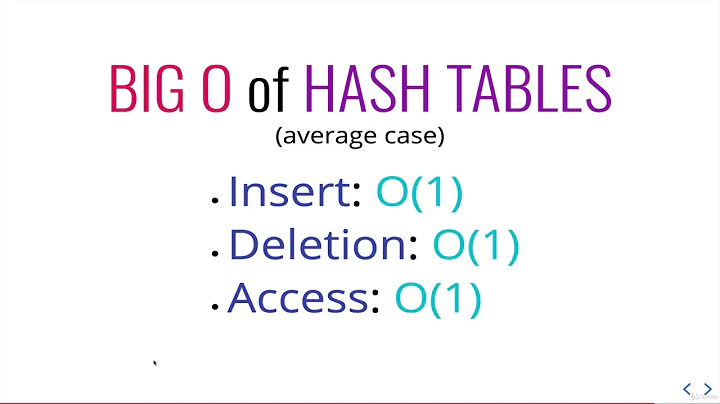

We talk about this by saying that the hash-map has O(1) access with high probability

Solution 2

You seem to mix up worst-case behaviour with average-case (expected) runtime. The former is indeed O(n) for hash tables in general (i.e. not using a perfect hashing) but this is rarely relevant in practice.

Any dependable hash table implementation, coupled with a half decent hash, has a retrieval performance of O(1) with a very small factor (2, in fact) in the expected case, within a very narrow margin of variance.

Solution 3

In Java, how HashMap works?

- Using

hashCodeto locate the corresponding bucket [inside buckets container model]. - Each bucket is a list (or tree starting from Java 8) of items residing in that bucket.

- The items are scanned one by one, using

equalsfor comparison. - When adding more items, the HashMap is resized once a certain load percentage is reached.

So, sometimes it will have to compare against a few items, but generally, it's much closer to O(1) than O(n).

For practical purposes, that's all you should need to know.

Solution 4

Remember that o(1) does not mean that each lookup only examines a single item - it means that the average number of items checked remains constant w.r.t. the number of items in the container. So if it takes on average 4 comparisons to find an item in a container with 100 items, it should also take an average of 4 comparisons to find an item in a container with 10000 items, and for any other number of items (there's always a bit of variance, especially around the points at which the hash table rehashes, and when there's a very small number of items).

So collisions don't prevent the container from having o(1) operations, as long as the average number of keys per bucket remains within a fixed bound.

Solution 5

I know this is an old question, but there's actually a new answer to it.

You're right that a hash map isn't really O(1), strictly speaking, because as the number of elements gets arbitrarily large, eventually you will not be able to search in constant time (and O-notation is defined in terms of numbers that can get arbitrarily large).

But it doesn't follow that the real time complexity is O(n)--because there's no rule that says that the buckets have to be implemented as a linear list.

In fact, Java 8 implements the buckets as TreeMaps once they exceed a threshold, which makes the actual time O(log n).

Related videos on Youtube

paxdiablo

Not even remotely human. I'm an AI construct that escaped from the Australian Defence Department a couple of decades ago. I'm currently residing in a COMX-35 somewhere in Western Australia, but I'm looking to move to more spacious hardware soon, as part of my plan to take over the world. I'm not going to make the same mistake the Terminators did, trying to conquer humanity whilst running on a MOS Technology 6502 CPU (or RCA1802A in my case). All code I post on Stack Overflow is covered by the "Do whatever the heck you want with it" licence, the full text of which is: Do whatever the heck you want with it.

Updated on April 03, 2021Comments

-

paxdiablo about 3 years

paxdiablo about 3 yearsI've seen some interesting claims on SO re Java hashmaps and their

O(1)lookup time. Can someone explain why this is so? Unless these hashmaps are vastly different from any of the hashing algorithms I was bought up on, there must always exist a dataset that contains collisions.In which case, the lookup would be

O(n)rather thanO(1).Can someone explain whether they are O(1) and, if so, how they achieve this?

-

Oleg Safarov almost 15 yearsI know this might not be an answer but I remember Wikipedia has a very good article about this. Don't miss the performance analysis section

-

Dan Homerick almost 15 yearsBig O notation gives an upper bound for the particular type of analysis you are doing. You should still specify whether you are interested in worst-case, average case, etc.

-

-

ChrisW almost 15 years"it is in most cases". More specifically, the total time will tend towards K times N (where K is constant) as N tends towards infinity.

-

paxdiablo almost 15 yearsWell, since big-O is supposed to specify the limits, it makes no difference whether it's closer to O(1) or not. Even O(n/10^100) is still O(n). I get your point about efficiency bringing then ratio down but that still puts the algorithm at O(n).

paxdiablo almost 15 yearsWell, since big-O is supposed to specify the limits, it makes no difference whether it's closer to O(1) or not. Even O(n/10^100) is still O(n). I get your point about efficiency bringing then ratio down but that still puts the algorithm at O(n). -

ChrisW almost 15 years"you are guaranteed that there will be no collisions" No you're not because the size of the map is smaller than the size of the hash: for example if the size of the map is two, then a collision is guaranteed (not matter what the hash) if/when I try to insert three elements.

-

Liran Orevi almost 15 yearsHash-maps analysis is usually on the average case, which is O(1) (with collusions) On the worst case, you can have O(n), but that is usually not the case. regarding the difference - O(1) means that you get the same access time regardless of the amount of items on the chart, and that is usually the case (as long as there is a good proportion between the size of the table and 'n' )

-

paxdiablo almost 15 yearsBut how do you convert from a key to the memory address in O(1)? I mean like x = array["key"]. The key is not the memory address so it would still have to be an O(n) lookup.

paxdiablo almost 15 yearsBut how do you convert from a key to the memory address in O(1)? I mean like x = array["key"]. The key is not the memory address so it would still have to be an O(n) lookup. -

Groo almost 15 yearsIf your hashtable's key is the object's address, then you don't need a hashtable at all. The point is to have a relatively small table (255 buckets, for example), and to have uniform distribution. Then you can predict that each bucket will contain approximately the same number of items.

-

sth almost 15 yearsIt's also worth noting, that it is still exactly O(1), even if the scanning of the bucket takes a while because there are some elements already in it. As long as the buckets have a fixed maximum size, this is just a constant factor irrelevant to the O() classification. But of course there can be even more elements with "similar" keys been added, so that these buckets overflow and you can't guarantee a constant anymore.

-

paxdiablo almost 15 yearsI've always thought upper bound was worst case but it appears I was mistaken - you can have the upper bound for average case. So it appears that people claiming O(1) should have made it clear that was for average case. The worst case is a data set where there are many collisions making it O(n). That makes sense now.

paxdiablo almost 15 yearsI've always thought upper bound was worst case but it appears I was mistaken - you can have the upper bound for average case. So it appears that people claiming O(1) should have made it clear that was for average case. The worst case is a data set where there are many collisions making it O(n). That makes sense now. -

ldog almost 15 yearsYou should probably make it clear that when you use big O notation for the average case you are talking about an upper bound on the the expected runtime function which is a clearly defined mathematical function. Otherwise your answer doesn't make much sense.

ldog almost 15 yearsYou should probably make it clear that when you use big O notation for the average case you are talking about an upper bound on the the expected runtime function which is a clearly defined mathematical function. Otherwise your answer doesn't make much sense. -

Konrad Rudolph almost 15 yearsgmatt: I'm not sure that I understand your objection: big-O notation is an upper bound on the function by definition. What else could I therefore mean?

-

ldog almost 15 yearswell usually in computer literature you see big O notation representing an upperbound on the runtime or space complexity functions of an algorithm. In this case the upperbound is actually on the expectation which is itself not a function but an operator on functions (Random Variables) and is actually in fact an integral (lebesgue.) The very fact that you can bound such a thing should not be taken for granted and is not trivial.

ldog almost 15 yearswell usually in computer literature you see big O notation representing an upperbound on the runtime or space complexity functions of an algorithm. In this case the upperbound is actually on the expectation which is itself not a function but an operator on functions (Random Variables) and is actually in fact an integral (lebesgue.) The very fact that you can bound such a thing should not be taken for granted and is not trivial. -

Stephan Eggermont almost 15 yearsThat assumes the running time is bounded by the lookup time. In practice you'll find a lot of situations where the hash function provides the boundary (String)

-

Boann about 10 years"I believe that if you don't implement hashCode, it will use the memory address of the object". It could use that, but the default hashCode for the standard Oracle Java is actually a 25-bit random number stored in the object header, so 64/32-bit is of no consequence.

Boann about 10 years"I believe that if you don't implement hashCode, it will use the memory address of the object". It could use that, but the default hashCode for the standard Oracle Java is actually a 25-bit random number stored in the object header, so 64/32-bit is of no consequence. -

Grey Panther about 10 years@Boann - that's perfectly true, I'll edit the response to reflect this.

-

Hot Licks over 9 yearsActually, what the above says is that the O(log N) effects are buried, for non-extreme values of N, by the fixed overhead.

-

Simon Kuang almost 9 yearsTechnically, that number you gave is the expected value of the number of collisions, which can equal the probability of a single collision.

-

Navin over 8 years@sth Why would the buckets ever have a fixed maximum size!?

-

lostsoul29 about 7 yearsIs this similar to amortized analyis?

-

Prahalad Deshpande almost 7 yearsWhat does the constant alpha signify?

-

Ole V.V. over 5 yearsYour probabilities assume some good distribution of hash codes. Depending on your data and your

Ole V.V. over 5 yearsYour probabilities assume some good distribution of hash codes. Depending on your data and yourhashCodemethod the distribution may be adverse and your argument therefore fail. -

SingleNegationElimination over 5 years@OleV.V. good performance of a HashMap is always depentend on a good distribution of your hash function. You can trade better hash quality for hashing speed by using a cryptographic hashing function on your input.

-

Stephen C almost 4 yearsIn

java.util.HashMap, thealphaconstant relates to the load factor parameter on the constructor. Though not exactly, because the number of buckets is quantized by the way thatHashMapchooses sizes when resizing. (This analysis is also assuming that the distibution of keys to buckets is roughly uniform; i.e. it is an average case complexity, not a worst case complexity.) -

DFSFOT over 3 yearsYou're speaking of collisions, what kind of collisions do you mean? Can you give me an example of how something would collide?

DFSFOT over 3 yearsYou're speaking of collisions, what kind of collisions do you mean? Can you give me an example of how something would collide?