Is it better to have one large parquet file or lots of smaller parquet files?

Solution 1

Aim for around 1GB per file (spark partition) (1).

Ideally, you would use snappy compression (default) due to snappy compressed parquet files being splittable (2).

Using snappy instead of gzip will significantly increase the file size, so if storage space is an issue, that needs to be considered.

.option("compression", "gzip") is the option to override the default snappy compression.

If you need to resize/repartition your Dataset/DataFrame/RDD, call the .coalesce(<num_partitions> or worst case .repartition(<num_partitions>) function. Warning: repartition especially but also coalesce can cause a reshuffle of the data, so use with some caution.

Also, parquet file size and for that matter all files generally should be greater in size than the HDFS block size (default 128MB).

1) https://forums.databricks.com/questions/101/what-is-an-optimal-size-for-file-partitions-using.html 2) http://boristyukin.com/is-snappy-compressed-parquet-file-splittable/

Solution 2

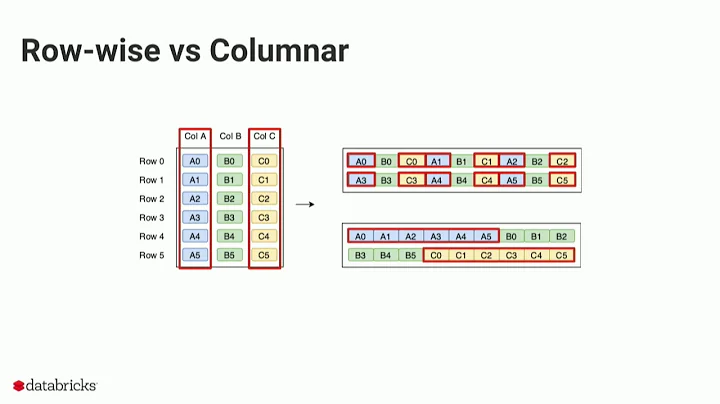

Notice that Parquet files are internally split into row groups

https://parquet.apache.org/documentation/latest/

So by making parquet files larger, row groups can still be the same if your baseline parquet files were not small/tiny. There is no huge direct penalty on processing, but opposite, there are more opportunities for readers to take advantage of perhaps larger/ more optimal row groups if your parquet files were smaller/tiny for example as row groups can't span multiple parquet files.

Also larger parquet files don't limit parallelism of readers, as each parquet file can be broken up logically into multiple splits (consisting of one or more row groups).

The only downside of larger parquet files is it takes more memory to create them. So you can watch out if you need to bump up Spark executors' memory.

row groups are a way for Parquet files to have vertical partitioning. Each row group has many row chunks (one for each column, a way to provide horizontal partitioning for the datasets in parquet).

Related videos on Youtube

ForeverConfused

Updated on December 31, 2020Comments

-

ForeverConfused over 3 years

I understand hdfs will split files into something like 64mb chunks. We have data coming in streaming and we can store them to large files or medium sized files. What is the optimum size for columnar file storage? If I can store files to where the smallest column is 64mb, would it save any computation time over having, say, 1gb files?

-

Explorer about 7 yearswe are using coalesce function with hive context with 50 executors for one of our file which is ~15GB and it runs like a charm.

-

jaltekruse about 3 years@garren-s This qualification that you need use snappy for your parquet files to be splittable is not necessary, regardless of the compression used parquet files are always splittable as long as they are large enough to contain multiple RowGroups ( parquet name for a partition within a file). The article you cite has a misleading title, but the text of the article and one of the follow up comments below it do clarify all compression types with parquet give you splittable files. It is however true that a CSV file will not be splittable unless you use a streaming compression like snappy.