Is the size of C "int" 2 bytes or 4 bytes?

Solution 1

I know it's equal to sizeof(int). The size of an int is really compiler dependent. Back in the day, when processors were 16 bit, an int was 2 bytes. Nowadays, it's most often 4 bytes on a 32-bit as well as 64-bit systems.

Still, using sizeof(int) is the best way to get the size of an integer for the specific system the program is executed on.

EDIT: Fixed wrong statement that int is 8 bytes on most 64-bit systems. For example, it is 4 bytes on 64-bit GCC.

Solution 2

This is one of the points in C that can be confusing at first, but the C standard only specifies a minimum range for integer types that is guaranteed to be supported. int is guaranteed to be able to hold -32767 to 32767, which requires 16 bits. In that case, int, is 2 bytes. However, implementations are free to go beyond that minimum, as you will see that many modern compilers make int 32-bit (which also means 4 bytes pretty ubiquitously).

The reason your book says 2 bytes is most probably because it's old. At one time, this was the norm. In general, you should always use the sizeof operator if you need to find out how many bytes it is on the platform you're using.

To address this, C99 added new types where you can explicitly ask for a certain sized integer, for example int16_t or int32_t. Prior to that, there was no universal way to get an integer of a specific width (although most platforms provided similar types on a per-platform basis).

Solution 3

There's no specific answer. It depends on the platform. It is implementation-defined. It can be 2, 4 or something else.

The idea behind int was that it was supposed to match the natural "word" size on the given platform: 16 bit on 16-bit platforms, 32 bit on 32-bit platforms, 64 bit on 64-bit platforms, you get the idea. However, for backward compatibility purposes some compilers prefer to stick to 32-bit int even on 64-bit platforms.

The time of 2-byte int is long gone though (16-bit platforms?) unless you are using some embedded platform with 16-bit word size. Your textbooks are probably very old.

Solution 4

The answer to this question depends on which platform you are using.

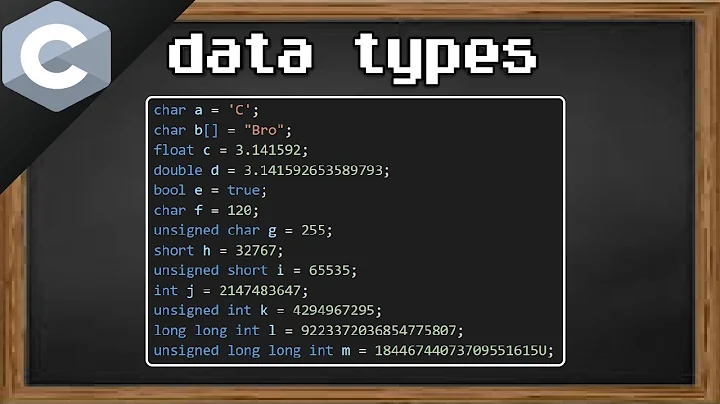

But irrespective of platform, you can reliably assume the following types:

[8-bit] signed char: -127 to 127

[8-bit] unsigned char: 0 to 255

[16-bit]signed short: -32767 to 32767

[16-bit]unsigned short: 0 to 65535

[32-bit]signed long: -2147483647 to 2147483647

[32-bit]unsigned long: 0 to 4294967295

[64-bit]signed long long: -9223372036854775807 to 9223372036854775807

[64-bit]unsigned long long: 0 to 18446744073709551615

Solution 5

C99 N1256 standard draft

http://www.open-std.org/JTC1/SC22/WG14/www/docs/n1256.pdf

The size of int and all other integer types are implementation defined, C99 only specifies:

- minimum size guarantees

- relative sizes between the types

5.2.4.2.1 "Sizes of integer types <limits.h>" gives the minimum sizes:

1 [...] Their implementation-defined values shall be equal or greater in magnitude (absolute value) to those shown [...]

- UCHAR_MAX 255 // 2 8 − 1

- USHRT_MAX 65535 // 2 16 − 1

- UINT_MAX 65535 // 2 16 − 1

- ULONG_MAX 4294967295 // 2 32 − 1

- ULLONG_MAX 18446744073709551615 // 2 64 − 1

6.2.5 "Types" then says:

8 For any two integer types with the same signedness and different integer conversion rank (see 6.3.1.1), the range of values of the type with smaller integer conversion rank is a subrange of the values of the other type.

and 6.3.1.1 "Boolean, characters, and integers" determines the relative conversion ranks:

1 Every integer type has an integer conversion rank defined as follows:

- The rank of long long int shall be greater than the rank of long int, which shall be greater than the rank of int, which shall be greater than the rank of short int, which shall be greater than the rank of signed char.

- The rank of any unsigned integer type shall equal the rank of the corresponding signed integer type, if any.

- For all integer types T1, T2, and T3, if T1 has greater rank than T2 and T2 has greater rank than T3, then T1 has greater rank than T3

Related videos on Youtube

Rajiv Prathap

Updated on July 08, 2022Comments

-

Rajiv Prathap almost 2 years

Does an Integer variable in C occupy 2 bytes or 4 bytes? What are the factors that it depends on?

Most of the textbooks say integer variables occupy 2 bytes. But when I run a program printing the successive addresses of an array of integers it shows the difference of 4.

-

Evan Mulawski almost 12 years

-

Keith Thompson almost 12 years

intis just one of several integer types. You asked about the size of "integer"; you probably meant to ask about the size ofint. -

Keith Thompson almost 12 yearsAnd you should find better textbooks. A textbook that says an

intis 2 bytes (a) probably refers to an old system, and (b) fails to make it clear that the size will vary from one system to another. The best book on C is "The C Programming Language" by Kernighan and Ritchie, though it assumes some programming experience. See also question 18.10 of the comp.lang.c FAQ. -

netcoder almost 12 yearsTry

netcoder almost 12 yearsTry#define int int64_ton a 64-bit platform, so neither. Just usesizeof. ;-) -

phuclv about 8 yearsPossible duplicate of What does the C++ standard state the size of int, long type to be?

phuclv about 8 yearsPossible duplicate of What does the C++ standard state the size of int, long type to be?

-

-

user541686 almost 12 years@RajivPrathap: Well, it's compiler-dependent, but the compiler decides whether or not it's also machine-dependent. :)

-

FatalError over 10 years@nevanking: On a two's complement machine (which is every machine I know of...), yes. But, C doesn't guarantee it to be the case.

-

Walt Sellers about 10 yearsIf you need the size for the preproccesor, you can check the predefined macros such as INT_MAX. If the value is not the one expected by your code, then the byte size of int is different on the current compiler/platform combination.

-

RGS almost 10 years@nevanking I'm completely new to C, but isn't it 32767 because otherwise it would be using another bit|byte? Imagine, I can hold 3 digits (0 or 1), so I can go from 000 to 111 (which is decimal 7). 7 is right before an exponent of 2. If I could go until 8 (1000) then I could use those 4 digits all the way up to 15! Such as 32767 is right before an exponent of 2, exhausting all the bits|bytes it has available.

RGS almost 10 years@nevanking I'm completely new to C, but isn't it 32767 because otherwise it would be using another bit|byte? Imagine, I can hold 3 digits (0 or 1), so I can go from 000 to 111 (which is decimal 7). 7 is right before an exponent of 2. If I could go until 8 (1000) then I could use those 4 digits all the way up to 15! Such as 32767 is right before an exponent of 2, exhausting all the bits|bytes it has available. -

nevan king almost 10 years@RSerrao I'm not a C expert either but AFAIK for positive numbers it's one less than the maximum negative number. So -8 to 7, -256 to 255 and so on. Negative numbers don't have to count the zero.

nevan king almost 10 years@RSerrao I'm not a C expert either but AFAIK for positive numbers it's one less than the maximum negative number. So -8 to 7, -256 to 255 and so on. Negative numbers don't have to count the zero. -

RGS almost 10 years@nevanking You are right! O.o I forgot about the negative numbers and when I wrote my comment, somewhere in the middle, I forgot you pointed out the negative number :D You are certainly sure! Most of all, it even makes sense!

RGS almost 10 years@nevanking You are right! O.o I forgot about the negative numbers and when I wrote my comment, somewhere in the middle, I forgot you pointed out the negative number :D You are certainly sure! Most of all, it even makes sense! -

Cody Gray almost 10 yearsSomeone edited your post to "fix" the ranges, but I'm not sure if your edit adequately reflects your intent. It assumes a two's complement implementation, which will be true in most cases, but not all. Since your answer specifically points out the implementation-dependence, I'm thinking the edit is probably wrong. If you agree, please be sure to revert the edit.

-

Cem Kalyoncu over 9 yearsNot just machine dependent, it also depends on the operating system running on the machine. For instance long in Win64 is 4 bytes whereas long in Linux64 is 8 bytes.

Cem Kalyoncu over 9 yearsNot just machine dependent, it also depends on the operating system running on the machine. For instance long in Win64 is 4 bytes whereas long in Linux64 is 8 bytes. -

phuclv almost 9 yearswrong. on most 64-bit systems int is still 4 bytes en.wikipedia.org/wiki/64-bit_computing#64-bit_data_models

phuclv almost 9 yearswrong. on most 64-bit systems int is still 4 bytes en.wikipedia.org/wiki/64-bit_computing#64-bit_data_models -

Akhil Nadh PC over 7 yearsMy system is 64 bit and size of int is 4B . Why it is so ?

Akhil Nadh PC over 7 yearsMy system is 64 bit and size of int is 4B . Why it is so ? -

12431234123412341234123 over 7 years"16 bits. In that case, int, is 2 bytes" can be wrong, if CHAR_BIT is 16, sizeof(int) can be 1 byte (or char).

-

sudo about 7 years@AkhilNadhPC There are papers about this. I'll bet people think 32 bits are enough, and using 32 instead of 64 allows for optimizations such as SSE.

sudo about 7 years@AkhilNadhPC There are papers about this. I'll bet people think 32 bits are enough, and using 32 instead of 64 allows for optimizations such as SSE. -

Paul R about 7 yearsUsing

%dfor asize_tparameter is UB. -

John Bode about 7 years@nevanking: only if you assume 2's complement representation for signed

John Bode about 7 years@nevanking: only if you assume 2's complement representation for signedint. C doesn't make that assumption. 1's complement and sign-magnitude systems can't represent-32768in 16 bits; rather, they have two representations for zero (positive and negative). That's why the minimum range for anintis[-32767..32767]. -

too honest for this site about 7 years

too honest for this site about 7 yearssizeof(int)can be any value from1. A byte is not required to be 8 bits and some machines don't have a 8 bit addressable unit (which basically is the definition of a byte in the standard). The answer is not correct without further information. -

autistic almost 6 yearsI second @toohonestforthissite. Using

autistic almost 6 yearsI second @toohonestforthissite. Usingsizeof(int)is not advised because alone it doesn't actually convey useful information; the problem is thatsizeofdetermines how many bytes in its operand, but doesn't tell you how many bits are in a byte. You need to multiply byCHAR_BITfor that. There may also be padding bits which means your "32-bit integer" might only be able to store 16-bit values anyway. I think what you meant to write is "usingINT_MINandINT_MAXis the best way to get the information you desire, notsizeof"... -

autistic almost 6 yearsIt's also worth noting that there are implementations where -0 is used in twos complement representation, since accessing the negative zero is technically UB you get an extra value, that value is

autistic almost 6 yearsIt's also worth noting that there are implementations where -0 is used in twos complement representation, since accessing the negative zero is technically UB you get an extra value, that value is-INT_MIN + 1forint, for example... so you get the extra value on the positive end of the spectrum... well, the manual says "CONFORMANCE/TWOSARITH causes negative zero, (2<sup>36</sup>)-1, to be considered a large unsigned integer by the generated code", though I really think they mean ones complement or sign and magnitude,else their implementation can't be compliant. Compiler devs nowadays? :/ -

yhyrcanus over 5 yearsI've been working on embedded systems for over 10 years. I've never come a cross a nibble addressable unit, or a byte/nibble system. Yes, you can have stuff shifted across nibbles etc, and only take up to 28 bits etc, but you're still secretly storing 32 bytes, and therefore, sizeof(int)==4 is more useful for dynamic memory allocation (ie malloc). It really depends on what question you're trying to answer.

-

Antti Haapala -- Слава Україні over 5 years@k06a your edit was incorrect. You've specifically changed the original ranges into 2-complement ranges - these are not the ones specified in the C standard.

Antti Haapala -- Слава Україні over 5 years@k06a your edit was incorrect. You've specifically changed the original ranges into 2-complement ranges - these are not the ones specified in the C standard. -

Antti Haapala -- Слава Україні over 5 years@CodyGray this has been fluctuating back and forth, having been 1's complement compliant for last 3 years and OP not saying anything so I reverted an edit that changed it to 2's complement with "fix ranges", since it says "you can reliably assume", which is still not exactly true.

Antti Haapala -- Слава Україні over 5 years@CodyGray this has been fluctuating back and forth, having been 1's complement compliant for last 3 years and OP not saying anything so I reverted an edit that changed it to 2's complement with "fix ranges", since it says "you can reliably assume", which is still not exactly true. -

gabbar0x about 5 years

The idea behind int was that it was supposed to match the natural "word" size on the given platform- This is what I was looking for. Any idea what the reason may be ? In a free world, int could occupy any number of consecutive bytes in the memory right ? 8, 16 whatever -

Andrei Damian-Fekete over 4 yearsThe link provided is a bad source of information because it assumes things that are not always true (e.g.

charis alwayssigned) -

Josh Desmond about 4 years"because CHAR_BIT was 9!" - They had 362880 bit computing back then!? Impressive.

Josh Desmond about 4 years"because CHAR_BIT was 9!" - They had 362880 bit computing back then!? Impressive. -

chux - Reinstate Monica over 2 years@yhyrcanus I worked with TMS34010. It is bit addressable.

chux - Reinstate Monica over 2 years@yhyrcanus I worked with TMS34010. It is bit addressable.

![#11 [Lập Trình C]. Kiểu Cấu Trúc Trong C | Struct Và Các Bài Toán Thường Gặp Với Struct](https://i.ytimg.com/vi/i1c-BG0LJmc/hqdefault.jpg?sqp=-oaymwEcCOADEI4CSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDzS2nRuWXZsO9i-PDWZm2KEJ6XbA)

![#37 [C++]. Toán Tử Bit Trong C++ | Bitwise Operators](https://i.ytimg.com/vi/sfa7X7TnBzk/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDlCBxhDGmuUYVrO-QERCFWAenlnA)

![#22 [Bài Tập C ( Mảng)]. Tính Tổng Các Hàng Và Các Cột Của Ma Trận](https://i.ytimg.com/vi/ybUk5pUTqFc/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLA-Majdk3uWHxA-_Ehmoj0Zy7jeHg)

![#42 [Bài Tập C (Hàm, Lý thuyết số )]. Ước Chung Lớn Nhất, Bội Chung Nhỏ Nhất](https://i.ytimg.com/vi/aw0c4kJpoEU/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLBDOA4dAWqo3ku_-w1vPXonJaSIcQ)

![#27 [Bài Tập C ( Xâu ký tự)]. Tìm Hiệu 2 Số Nguyên Lớn](https://i.ytimg.com/vi/jbiT1PthcdM/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLALi4HaFGRe9adyfkfE8rocHdCG_w)

![#2 [Bài Tập C ( Mảng)]. Kiểm Tra Mảng Tăng Dần | Liệt Kê Các Số Lớn Hơn Toàn Bộ Số Đứng Trước Nó](https://i.ytimg.com/vi/P-MLWm9HaVs/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDvm1Mro450ZIEO6WrLOiutFd8GCQ)

![#26 [Bài Tập C ( Xâu ký tự)]. Tổng 2 Số Nguyên Lớn](https://i.ytimg.com/vi/Cb82BWxBvUA/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAH85KfivWuU0OTv5hm_og8j2Rrng)

![#9[Bài Tập C (Hàm, Lý thuyết số )]. Liệt Kê Các Cặp Số Nguyên Tố Cùng Nhau](https://i.ytimg.com/vi/E6G69JWd7YU/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLALqoah1KHjLjWuRZSbnOjaKQNuJg)