Is there a difference between an empty robots.txt and no robots.txt at all?

Solution 1

Do crawlers behave differently in these two cases?

A robots.txt file that's empty is really no different from one that's not found, both do not disallow crawling.

You might however receive lots of 404 errors in your server logs when crawlers request the robots.txt file, as indicated in this question here.

So, is it safe to just delete an empty robots.txt?

Yes, with the above caveat.

Solution 2

No. There's no difference.

You'd get 404 errors in your server log, and if you're subscribed to things like Google Web Master tools it might tell you you've not got one, but in terms of the crawler robot behavior -- they are the same for any robot you care about.

Related videos on Youtube

Foo Bar

Updated on September 18, 2022Comments

-

Foo Bar over 1 year

Foo Bar over 1 yearOn a webserver I now have to admnistrate I noticed that the robots.txt is empty. I wondered if there's a difference between an empty robots.txt and no file at all.

Do crawlers behave differently in these two cases? So, is it safe to just delete an empty robots.txt?

-

PJ Brunet about 2 yearsIs it safe to delete? See my answer about auto-generated robots.txt files.

-

-

PJ Brunet about 2 yearsI could be wrong, but I don't remember seeing the 404 problem with Nginx and error.log

-

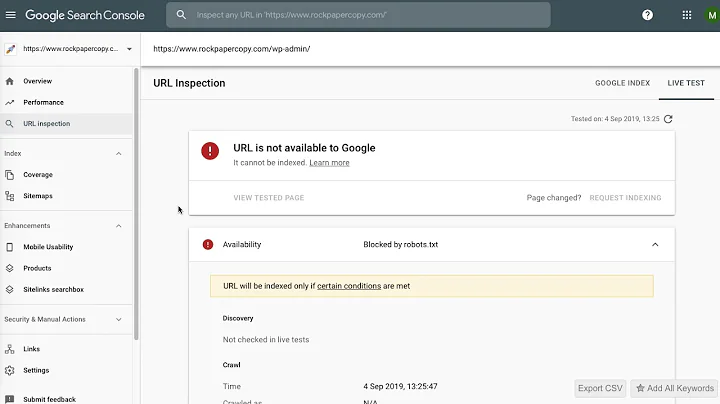

Stephen Ostermiller about 2 yearsRobots.txt doesn't have the ability to "send" bots anywhere. The default robots.txt with wordpress contains:

Stephen Ostermiller about 2 yearsRobots.txt doesn't have the ability to "send" bots anywhere. The default robots.txt with wordpress contains:Disallow: /wp-admin/which does just the opposite. -

PJ Brunet about 2 years@StephenOstermiller "Allow" tells robots where to go, does it not? I would consider that "sending." Also see my post here wordpress.stackexchange.com/questions/403753/…

-

Stephen Ostermiller about 2 yearsAn

Stephen Ostermiller about 2 yearsAnAllow:is an exception to aDisallow:directive. It says that even though crawling is not allowed in/wp-admin/it is allowed in/wp-admin/admin-ajax.php. Bots wouldn't typically try to crawl URLs that are listed asAllow:inrobots.txtbut they would consult robots.txt to see if they can crawl such URLs when they are found on your site. A blank robots.txt is going to allow crawling of/wp-admin/admin-ajax.phptoo. It isn't the robots.txt file that is sending bots to that URL, rather it is the code on the site itself (probably in JavaScript.)