Linux server dropping RX packets in __netif_receive_skb_core

I finally found the source of the problem: These are ethernet frames with an unknown ethertype. I thought those drops should go away with PROMISC mode, but obviously they don't.

In my case, it was ethertype 0x8912 and 0x88e1 frames sent by an AVM FritzBox router to detect poweline adapters. To confirm, I am blocking these frames via nftables with this ruleset in /etc/nftables.conf:

table netdev filter {

chain ingress {

type filter hook ingress device eno1 priority 0; policy accept;

meta protocol {0x8912, 0x88e1} drop

}

}

After that, the network drops are gone! Even if not blocked, these are harmless and will not interfere with my server. I will keep them blocked anyway in order to keep monitoring clean and see real interface drops / performance problems.

Further information regarding these frames can be found here:

- https://pablo.tools/blog/computers/dropped-packets/

- https://www.ip-phone-forum.de/threads/ethertype-0x88e1-deaktivieren.279715/ (German)

- https://avm.de/service/fritzbox/fritzbox-7590/wissensdatenbank/publication/show/249_Firewall-meldet-Angriffe-an-TCP-Port-80-bzw-53805-oder-unangeforderte-Pakete-vom-Typ-0x88e1/ (German)

- https://superuser.com/questions/1421591/disable-homeplug-feature-on-fritzbox

Related videos on Youtube

Arun George

Entrepreneur, System Engineer, Devops, Cloud, AWS, Python

Updated on September 18, 2022Comments

-

Arun George almost 2 years

I have an Ubuntu 18.04 server dropping received packets and I cannot figure out why.

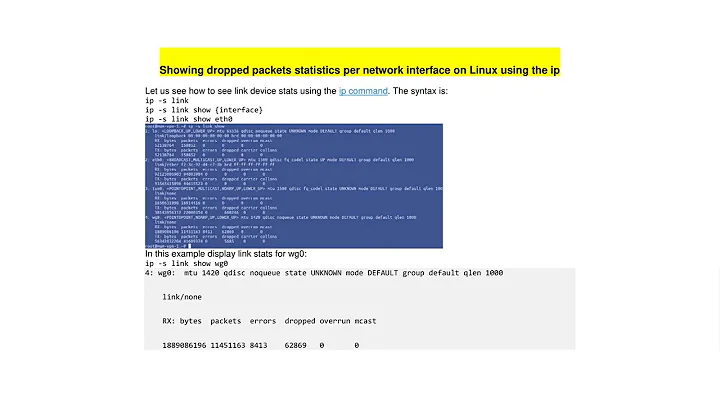

Here's a graph of dropped packets from netdata:

The server is running multiple docker containers and networks, therefore having several Linux bridges and veth interfaces. The problem relates to the physical interface, though. There are no VLANs configured.

The machine has no IPtables rules except the Docker-generated ones.

The NIC is an Intel I210 (

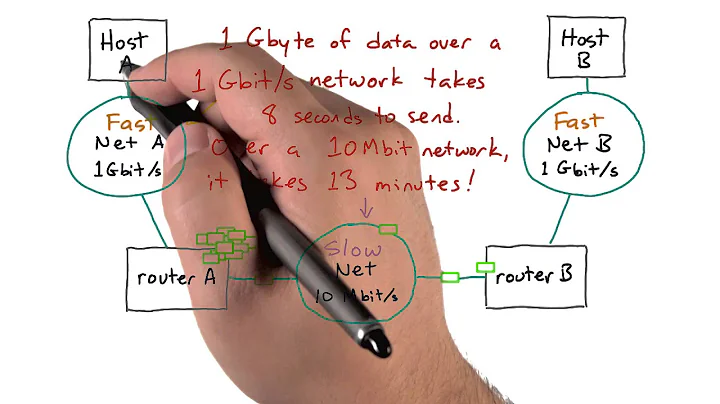

igbdriver).Copying data via TCP (rsync) is working at 1G line speed, therefore not a lot TCP packets can be harmed. (I would expect TCP drops to harm transmission performance greatly due to reduction of window size.)

# uname -a Linux epyc 5.3.0-51-generic #44~18.04.2-Ubuntu SMP Thu Apr 23 14:27:18 UTC 2020 x86_64 x86_64 x86_64 GNU/LinuxOne can see the RX-DRP on

eno1, which is the only physical interface that the machine has. It increases with ca. 2 packets/sec with very light traffic (admin ssh, few dns queries only).# netstat -ni Kernel Interface table Iface MTU RX-OK RX-ERR RX-DRP RX-OVR TX-OK TX-ERR TX-DRP TX-OVR Flg br-69eb0 1500 45768 0 0 0 41072 0 0 0 BMRU br-bf2c7 1500 71 0 0 0 85 0 0 0 BMRU br-f4e34 1500 187676 0 0 0 192128 0 0 0 BMRU docker0 1500 62739 0 0 0 70194 0 0 0 BMRU eno1 1500 55517866 0 271391 35 19796132 0 0 0 BMRU lo 65536 7381 0 0 0 7381 0 0 0 LRU veth078d 1500 40657 0 0 0 48148 0 0 0 BMRU veth231e 1500 2582 0 0 0 2323 0 0 0 BMRU veth2f4f 1500 19 0 0 0 164 0 0 0 BMRUNIC settings (ethtool)

I tried to disable all RX hardware offloading settings that I could edit, but it didn't help.

I increased the buffers, didn't help either.

# ethtool -S eno1 | grep rx rx_packets: 55580744 rx_bytes: 76852450760 rx_broadcast: 294019 rx_multicast: 228993 rx_crc_errors: 0 rx_no_buffer_count: 0 rx_missed_errors: 0 rx_long_length_errors: 0 rx_short_length_errors: 0 rx_align_errors: 0 rx_flow_control_xon: 0 rx_flow_control_xoff: 0 rx_long_byte_count: 76852450760 rx_smbus: 66009 os2bmc_rx_by_bmc: 19137 os2bmc_rx_by_host: 190 rx_hwtstamp_cleared: 0 rx_errors: 0 rx_length_errors: 0 rx_over_errors: 0 rx_frame_errors: 0 rx_fifo_errors: 35 rx_queue_0_packets: 16271369 rx_queue_0_bytes: 22437386945 rx_queue_0_drops: 0 rx_queue_0_csum_err: 0 rx_queue_0_alloc_failed: 0 rx_queue_1_packets: 5913593 rx_queue_1_bytes: 6458814275 rx_queue_1_drops: 0 rx_queue_1_csum_err: 1 rx_queue_1_alloc_failed: 0 rx_queue_2_packets: 29208019 rx_queue_2_bytes: 42357497354 rx_queue_2_drops: 35 rx_queue_2_csum_err: 0 rx_queue_2_alloc_failed: 0 rx_queue_3_packets: 4121883 rx_queue_3_bytes: 5366292094 rx_queue_3_drops: 0 rx_queue_3_csum_err: 0 rx_queue_3_alloc_failed: 0 # ethtool -k eno1 | grep -vE 'tx|fixed' Features for eno1: rx-checksumming: off scatter-gather: off tcp-segmentation-offload: off udp-fragmentation-offload: off generic-segmentation-offload: off generic-receive-offload: off rx-vlan-offload: off ntuple-filters: off receive-hashing: off rx-all: off hw-tc-offload: on # ethtool -g eno1 Ring parameters for eno1: Pre-set maximums: RX: 4096 RX Mini: 0 RX Jumbo: 0 TX: 4096 Current hardware settings: RX: 256 RX Mini: 0 RX Jumbo: 0 TX: 256Dropwatch

From this blogpost I found the tool dropwatch, which gives this output:

# sudo ./dropwatch -l kas Initalizing kallsyms db dropwatch> start Enabling monitoring... Kernel monitoring activated. Issue Ctrl-C to stop monitoring 6 drops at __netif_receive_skb_core+4a0 (0xffffffff979002d0) 1 drops at icmpv6_rcv+310 (0xffffffff97a2e6a0) 1 drops at ip_rcv_finish_core.isra.18+1b4 (0xffffffff97976644) 1 drops at __udp4_lib_rcv+a34 (0xffffffff979b0fc4) 3 drops at __udp4_lib_rcv+a34 (0xffffffff979b0fc4) 1 drops at unix_release_sock+1a7 (0xffffffff979f9977) 1 drops at unix_release_sock+1a7 (0xffffffff979f9977) 1 drops at sk_stream_kill_queues+4d (0xffffffff978eeffd) 2 drops at unix_stream_connect+2e5 (0xffffffff979fae75) 12 drops at __netif_receive_skb_core+4a0 (0xffffffff979002d0) 1 drops at sk_stream_kill_queues+4d (0xffffffff978eeffd) 1 drops at sk_stream_kill_queues+4d (0xffffffff978eeffd) 2 drops at __udp4_lib_rcv+a34 (0xffffffff979b0fc4) 2 drops at unix_stream_connect+2e5 (0xffffffff979fae75) 6 drops at ip_forward+1b5 (0xffffffff97978615) 1 drops at unix_release_sock+1a7 (0xffffffff979f9977) 1 drops at __udp4_lib_rcv+a34 (0xffffffff979b0fc4) 1 drops at sk_stream_kill_queues+4d (0xffffffff978eeffd) 1 drops at sk_stream_kill_queues+4d (0xffffffff978eeffd) 2 drops at unix_stream_connect+2e5 (0xffffffff979fae75) 2 drops at unix_stream_connect+2e5 (0xffffffff979fae75) 1 drops at unix_release_sock+1a7 (0xffffffff979f9977) 12 drops at __netif_receive_skb_core+4a0 (0xffffffff979002d0) 6 drops at ip_forward+1b5 (0xffffffff97978615) 1 drops at tcp_v6_rcv+16c (0xffffffff97a3829c) 2 drops at unix_stream_connect+2e5 (0xffffffff979fae75) 12 drops at __netif_receive_skb_core+4a0 (0xffffffff979002d0) 1 drops at sk_stream_kill_queues+4d (0xffffffff978eeffd) 2 drops at unix_stream_connect+2e5 (0xffffffff979fae75) ^CI read this as most drops happening in

__netif_receive_skb_core.The Red Hat Enterprise Linux Network Performance Tuning Guide says (chapter "The Adapter Queue"):

The

netif_receive_skb()kernel function will find the corresponding CPU for a packet, and enqueue packets in that CPU's queue. If the queue for that processor is full and already at maximum size, packets will be dropped.To tune this setting, first determine whether the backlog needs increasing. The/proc/net/softnet_statfile contains a counter in the 2rd column that is incremented when the netdev backlog queue overflows. If this value is incrementing over time, thennetdev_max_backlogneeds to be increased.Increasing

netdev_max_backlogdidn't help, but this lead me to the SoftIRQs:SoftIRQ

According to the Red Hat document, there are several interesting aspects to SoftIRQ:

# cat /proc/net/softnet_stat 00024f83 00000000 000000e8 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 000152c0 00000000 0000008d 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00010238 00000000 00000061 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00010d8c 00000000 00000081 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 000f3cb3 00000000 00000d83 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 0009e391 00000000 0000050d 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 0025265b 00000000 00001023 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00111a24 00000000 0000095a 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 008bcbf0 00000000 0000355d 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 004875d8 00000000 00002408 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 0001c93c 00000000 000000cc 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00025fdb 00000000 000000fa 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 0005d1e5 00000000 000005f2 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 000f9bfd 00000000 00000b9e 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 000448bc 00000000 00000407 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00044f25 00000000 00000415 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000As you can see, the 2nd column is always 0, not matching the above statement about

netif_receive_skb(), but I see constantly increasing values in the 3rd column.This is described in "SoftIRQ Misses". Again, citing the Red Hat document:

Occasionally, it is necessary to increase the time that SoftIRQs are allowed to run on the CPU. This is known as the

netdev_budget. The default value of the budget is 300. This value can be doubled if the 3rd column in/proc/net/softnet_statis increasing, which indicates that the SoftIRQ did not get enough CPU timeOne should increase

net.core.netdev_budgetto 600. Didn't change a thing.Other things I tried

The stats still show traffic for the BMC on a shared interface. I tried to move the BMC to the dedicated interface since, but it did not improve the situation.

This document by SuSE gives some legit reasons for packet drops and a method for confirmation that the drops are harmless: They should go away when setting the interface into PROMISC mode as they are caused by unknown protocols or wrong VLAN tags. I enabled promisc mode and packets still get dropped.

Suspecting oversized jumbo frames, I modified the MTU size to 9000. This didn't help.