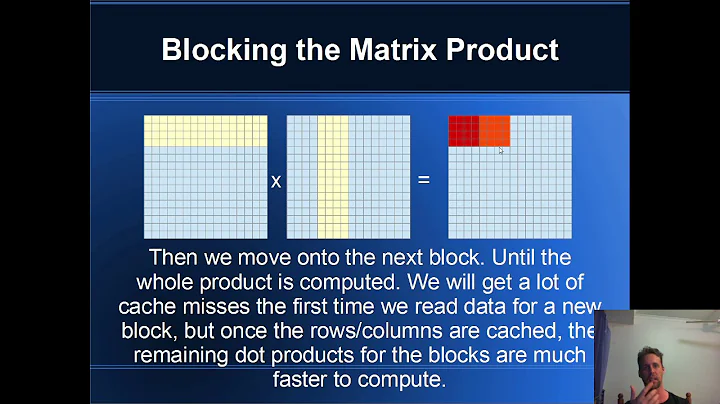

loop tiling/blocking for large dense matrix multiplication

The best results I've gotten are by adding one more for loop that blocks over your N, and by rearranging the loops. I also hoisted loop-invariant code, but the compiler's optimizer should hopefully do this automatically. The block size should be the cache line size divided by sizeof(float). This got it ~50% faster than the transposed approach.

If you have to pick just one of AVX or blocking, using AVX extensions (vfmadd###ps and haddps) is still substantially faster. Using both is best and straightforward to add given that you're already testing if the block size is a multiple of 64 / sizeof(float) == 16 floats == two 256-bit AVX registers.

- Transposed: 1,816,522 ticks

- Tiling: 892,431 (51% faster)

- AVX+tiling: 230,512 (87% faster)

Tiling:

void matrix_mult_wiki_block(const float*A , const float* B, float* C,

const int N, const int M, const int K) {

const int block_size = 64 / sizeof(float); // 64 = common cache line size

for(int i=0; i<N; i++) {

for(int j=0; j<K; j++) {

C[K*i + j] = 0;

}

}

for (int i0 = 0; i0 < N; i0 += block_size) {

int imax = i0 + block_size > N ? N : i0 + block_size;

for (int j0 = 0; j0 < M; j0 += block_size) {

int jmax = j0 + block_size > M ? M : j0 + block_size;

for (int k0 = 0; k0 < K; k0 += block_size) {

int kmax = k0 + block_size > K ? K : k0 + block_size;

for (int j1 = j0; j1 < jmax; ++j1) {

int sj = M * j1;

for (int i1 = i0; i1 < imax; ++i1) {

int mi = M * i1;

int ki = K * i1;

int kij = ki + j1;

for (int k1 = k0; k1 < kmax; ++k1) {

C[kij] += A[mi + k1] * B[sj + k1];

}

}

}

}

}

}

}

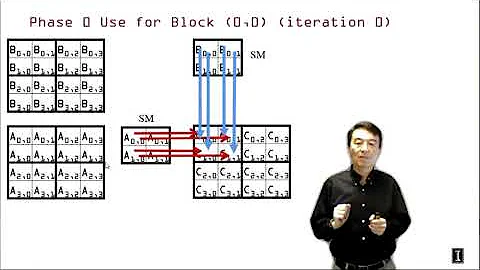

As for the Cannon reference, the SUMMA algorithm is a better one to follow.

In case anyone else is optimizing tall-skinny multiplications ({~1e9 x 50} x {50 x 50}, how I ended up here), the transposed approach is nearly identical in performance to the blocked approach up to n=18 (floats). n=18 is a pathological case (way worse than 17 or 19) and I don't quite see the cache access patterns that cause this. All larger n are improved with the blocked approach.

Related videos on Youtube

Leos313

Enthusiasm, initiative, curiosity, and dynamism have always pushed him during the academic education and work. He always sets goals, looking for something that allows him to grow personally, professionally, and especially culturally. He still doesn´t know why he is describing himself in the third person. Strong motivation for professional development in the following areas: Embedded System and Firmware development High-Performance Computing Hardware Accelerator Parallel Computing Machine learning |___|_O_|___| |___|___|_O_| |_O_|_O_|_O_| Some open-source works can be found here.

Updated on September 16, 2022Comments

-

Leos313 over 1 year

Leos313 over 1 yearI was wondering if someone could show me how to use loop tiling/loop blocking for large dense matrix multiplication effectively. I am doing C = AB with 1000x1000 matrices. I have followed the example on Wikipedia for loop tiling but I get worse results using tiling than without.

http://en.wikipedia.org/wiki/Loop_tiling

I have provided some code below. The naive method is very slow due to cache misses. The transpose method creates the transpose of B in a buffer. This method gives the fastest result (matrix multiplication goes as O(n^3) and transpose as O(n^2) so doing the transpose is at least 1000x faster). The wiki method without blocking is also fast and does not need a buffer. The blocking method is slower. Another problem with blocking is it has to update the block several times. This is a challenge for threading/OpenMP because it can cause race conditions if one is not careful.

I should point out that using AVX with a modification of the transpose method I get results faster than Eigen. However, my results with SSE are a bit slower than Eigen so I think I could use caching better.

Edit: I think I have an idea what I want to do. It comes from Cannon's algorithm from 1969.

http://en.wikipedia.org/wiki/Matrix_multiplication#Communication-avoiding_and_distributed_algorithmsI need to do block matrix multiplication and have each thread handle a sub-matrix of C rather than A and B. For example if I divide my matrices into four blocks. I would do:

+-+ +-+ +-+ +-+ +-+ +-+ | | | | | | | C1 C2 | | A1 A2 | | B1 B2 | | | = | | x | | | C3 C4 | | A3 A4 | | B3 B4 | | | | | | | +-+ +-+ +-+ +-+ +-+ +-+ thread 1: C1 = A1B1 + A2B3 thread 2: C2 = A1B2 + A2B4 thread 3: C3 = A3B1 + A4B3 thread 4: C4 = A3B2 + A4B4That removes the race condition. I'll have to think about this.

void matrix_mult_naive(const float*A , const float* B, float* C, const int N, const int M, const int K) { #pragma omp parallel for for(int i=0; i<N; i++) { for(int j=0; j<K; j++) { float tmp = 0; for(int l=0; l<M; l++) { tmp += A[M*i+l]*B[K*l+j]; } C[K*i + j] = tmp; } } } void matrix_mult_transpose(const float*A , const float* B, float* C, const int N, const int M, const int K) { float *B2 = (float*)aligned_malloc(M*K*sizeof(float), 32); transpose(B, B2, M, K, 1); #pragma omp parallel for for(int i=0; i<N; i++) { for(int j=0; j<K; j++) { float tmp = 0; for(int l=0; l<M; l++) { tmp += A[M*i+l]*B2[M*j+l]; } C[K*i + j] = tmp; } } aligned_free(B2); } void matrix_mult_wiki(const float*A , const float* B, float* C, const int N, const int M, const int K) { for(int i=0; i<N; i++) { for(int j=0; j<K; j++) { C[K*i + j] = 0; } } #pragma omp parallel for for(int i=0; i<N; i++) { for(int l=0; l<M; l++) { float a = A[M*i+l]; for(int j=0; j<K; j++) { C[K*i + j] += a*B[K*l+j]; } } } } void matrix_mult_wiki_block(const float*A , const float* B, float* C, const int N, const int M, const int K) { const int block_size = 8; //I have tried several different block sizes for(int i=0; i<N; i++) { for(int j=0; j<K; j++) { C[K*i + j] = 0; } } for(int l2=0; l2<M; l2+=block_size) { for(int j2=0; j2<K; j2+=block_size) { #pragma omp parallel for for(int i=0; i<N; i++) { for(int l=l2; l<min(M, l2+block_size); l++) { for(int j=j2; j<min(K, j2+block_size); j++) { C[K*i + j] += A[M*i+l]*B[K*l+j]; } } } } } }-

Jonathan Dursi about 11 yearsYou want the parallel for to go around the outer (tile) loops, not within them. The idea is for each core to be able to do its tile multiplication in fast local cache, and for several cores to be able to do that at the same time.

Jonathan Dursi about 11 yearsYou want the parallel for to go around the outer (tile) loops, not within them. The idea is for each core to be able to do its tile multiplication in fast local cache, and for several cores to be able to do that at the same time. -

Admin about 11 yearsThat creates a race condition. C[K*i +j] is being written to several times.

Admin about 11 yearsThat creates a race condition. C[K*i +j] is being written to several times. -

Admin about 11 yearsI mean for for example that for i=0, j=0 C[0] is written to multiple times in the block method.

Admin about 11 yearsI mean for for example that for i=0, j=0 C[0] is written to multiple times in the block method. -

Always Asking over 10 yearsHave you tried a different loop permutation? For matrix multiplication without tiling, lij order leads to fewer cache misses compared to ijl order that you are using.

-

Bing Bang about 8 yearsHave you tried row skipping? If you have t threads and nxn matrix, each thread i where 0 <= i < t starts by computing the ith row then skip to row t+i, then 2t+i etc until mt+i >= n for some m. If you have many cores on your cpu, this will be fast

-

-

American curl about 7 yearsCould you please explain why the for loop "for (int j0 = 0; j0 < M; j0 += block_size) " j0 needs to < N but not K?

American curl about 7 yearsCould you please explain why the for loop "for (int j0 = 0; j0 < M; j0 += block_size) " j0 needs to < N but not K?