Efficient 4x4 matrix multiplication (C vs assembly)

Solution 1

There is a way to accelerate the code and outplay the compiler. It does not involve any sophisticated pipeline analysis or deep code micro-optimisation (which doesn't mean that it couldn't further benefit from these). The optimisation uses three simple tricks:

The function is now 32-byte aligned (which significantly boosted performance),

Main loop goes inversely, which reduces comparison to a zero test (based on EFLAGS),

Instruction-level address arithmetic proved to be faster than the "external" pointer calculation (even though it requires twice as much additions «in 3/4 cases»). It shortened the loop body by four instructions and reduced data dependencies within its execution path. See related question.

Additionally, the code uses a relative jump syntax which suppresses symbol redefinition error, which occurs when GCC tries to inline it (after being placed within asm statement and compiled with -O3).

.text

.align 32 # 1. function entry alignment

.globl matrixMultiplyASM # (for a faster call)

.type matrixMultiplyASM, @function

matrixMultiplyASM:

movaps (%rdi), %xmm0

movaps 16(%rdi), %xmm1

movaps 32(%rdi), %xmm2

movaps 48(%rdi), %xmm3

movq $48, %rcx # 2. loop reversal

1: # (for simpler exit condition)

movss (%rsi, %rcx), %xmm4 # 3. extended address operands

shufps $0, %xmm4, %xmm4 # (faster than pointer calculation)

mulps %xmm0, %xmm4

movaps %xmm4, %xmm5

movss 4(%rsi, %rcx), %xmm4

shufps $0, %xmm4, %xmm4

mulps %xmm1, %xmm4

addps %xmm4, %xmm5

movss 8(%rsi, %rcx), %xmm4

shufps $0, %xmm4, %xmm4

mulps %xmm2, %xmm4

addps %xmm4, %xmm5

movss 12(%rsi, %rcx), %xmm4

shufps $0, %xmm4, %xmm4

mulps %xmm3, %xmm4

addps %xmm4, %xmm5

movaps %xmm5, (%rdx, %rcx)

subq $16, %rcx # one 'sub' (vs 'add' & 'cmp')

jge 1b # SF=OF, idiom: jump if positive

ret

This is the fastest x86-64 implementation I have seen so far. I will appreciate, vote up and accept any answer providing a faster piece of assembly for that purpose!

Solution 2

4x4 matrix multiplication is 64 multiplications and 48 additions. Using SSE this can be reduced to 16 multiplications and 12 additions (and 16 broadcasts). The following code will do this for you. It only requires SSE (#include <xmmintrin.h>). The arrays A, B, and C need to be 16 byte aligned. Using horizontal instructions such as hadd (SSE3) and dpps (SSE4.1) will be less efficient (especially dpps). I don't know if loop unrolling will help.

void M4x4_SSE(float *A, float *B, float *C) {

__m128 row1 = _mm_load_ps(&B[0]);

__m128 row2 = _mm_load_ps(&B[4]);

__m128 row3 = _mm_load_ps(&B[8]);

__m128 row4 = _mm_load_ps(&B[12]);

for(int i=0; i<4; i++) {

__m128 brod1 = _mm_set1_ps(A[4*i + 0]);

__m128 brod2 = _mm_set1_ps(A[4*i + 1]);

__m128 brod3 = _mm_set1_ps(A[4*i + 2]);

__m128 brod4 = _mm_set1_ps(A[4*i + 3]);

__m128 row = _mm_add_ps(

_mm_add_ps(

_mm_mul_ps(brod1, row1),

_mm_mul_ps(brod2, row2)),

_mm_add_ps(

_mm_mul_ps(brod3, row3),

_mm_mul_ps(brod4, row4)));

_mm_store_ps(&C[4*i], row);

}

}

Solution 3

Sandy Bridge an above extend the instruction set to support 8 element vector arithmetic. Consider this implementation.

struct MATRIX {

union {

float f[4][4];

__m128 m[4];

__m256 n[2];

};

};

MATRIX myMultiply(MATRIX M1, MATRIX M2) {

// Perform a 4x4 matrix multiply by a 4x4 matrix

// Be sure to run in 64 bit mode and set right flags

// Properties, C/C++, Enable Enhanced Instruction, /arch:AVX

// Having MATRIX on a 32 byte bundry does help performance

MATRIX mResult;

__m256 a0, a1, b0, b1;

__m256 c0, c1, c2, c3, c4, c5, c6, c7;

__m256 t0, t1, u0, u1;

t0 = M1.n[0]; // t0 = a00, a01, a02, a03, a10, a11, a12, a13

t1 = M1.n[1]; // t1 = a20, a21, a22, a23, a30, a31, a32, a33

u0 = M2.n[0]; // u0 = b00, b01, b02, b03, b10, b11, b12, b13

u1 = M2.n[1]; // u1 = b20, b21, b22, b23, b30, b31, b32, b33

a0 = _mm256_shuffle_ps(t0, t0, _MM_SHUFFLE(0, 0, 0, 0)); // a0 = a00, a00, a00, a00, a10, a10, a10, a10

a1 = _mm256_shuffle_ps(t1, t1, _MM_SHUFFLE(0, 0, 0, 0)); // a1 = a20, a20, a20, a20, a30, a30, a30, a30

b0 = _mm256_permute2f128_ps(u0, u0, 0x00); // b0 = b00, b01, b02, b03, b00, b01, b02, b03

c0 = _mm256_mul_ps(a0, b0); // c0 = a00*b00 a00*b01 a00*b02 a00*b03 a10*b00 a10*b01 a10*b02 a10*b03

c1 = _mm256_mul_ps(a1, b0); // c1 = a20*b00 a20*b01 a20*b02 a20*b03 a30*b00 a30*b01 a30*b02 a30*b03

a0 = _mm256_shuffle_ps(t0, t0, _MM_SHUFFLE(1, 1, 1, 1)); // a0 = a01, a01, a01, a01, a11, a11, a11, a11

a1 = _mm256_shuffle_ps(t1, t1, _MM_SHUFFLE(1, 1, 1, 1)); // a1 = a21, a21, a21, a21, a31, a31, a31, a31

b0 = _mm256_permute2f128_ps(u0, u0, 0x11); // b0 = b10, b11, b12, b13, b10, b11, b12, b13

c2 = _mm256_mul_ps(a0, b0); // c2 = a01*b10 a01*b11 a01*b12 a01*b13 a11*b10 a11*b11 a11*b12 a11*b13

c3 = _mm256_mul_ps(a1, b0); // c3 = a21*b10 a21*b11 a21*b12 a21*b13 a31*b10 a31*b11 a31*b12 a31*b13

a0 = _mm256_shuffle_ps(t0, t0, _MM_SHUFFLE(2, 2, 2, 2)); // a0 = a02, a02, a02, a02, a12, a12, a12, a12

a1 = _mm256_shuffle_ps(t1, t1, _MM_SHUFFLE(2, 2, 2, 2)); // a1 = a22, a22, a22, a22, a32, a32, a32, a32

b1 = _mm256_permute2f128_ps(u1, u1, 0x00); // b0 = b20, b21, b22, b23, b20, b21, b22, b23

c4 = _mm256_mul_ps(a0, b1); // c4 = a02*b20 a02*b21 a02*b22 a02*b23 a12*b20 a12*b21 a12*b22 a12*b23

c5 = _mm256_mul_ps(a1, b1); // c5 = a22*b20 a22*b21 a22*b22 a22*b23 a32*b20 a32*b21 a32*b22 a32*b23

a0 = _mm256_shuffle_ps(t0, t0, _MM_SHUFFLE(3, 3, 3, 3)); // a0 = a03, a03, a03, a03, a13, a13, a13, a13

a1 = _mm256_shuffle_ps(t1, t1, _MM_SHUFFLE(3, 3, 3, 3)); // a1 = a23, a23, a23, a23, a33, a33, a33, a33

b1 = _mm256_permute2f128_ps(u1, u1, 0x11); // b0 = b30, b31, b32, b33, b30, b31, b32, b33

c6 = _mm256_mul_ps(a0, b1); // c6 = a03*b30 a03*b31 a03*b32 a03*b33 a13*b30 a13*b31 a13*b32 a13*b33

c7 = _mm256_mul_ps(a1, b1); // c7 = a23*b30 a23*b31 a23*b32 a23*b33 a33*b30 a33*b31 a33*b32 a33*b33

c0 = _mm256_add_ps(c0, c2); // c0 = c0 + c2 (two terms, first two rows)

c4 = _mm256_add_ps(c4, c6); // c4 = c4 + c6 (the other two terms, first two rows)

c1 = _mm256_add_ps(c1, c3); // c1 = c1 + c3 (two terms, second two rows)

c5 = _mm256_add_ps(c5, c7); // c5 = c5 + c7 (the other two terms, second two rose)

// Finally complete addition of all four terms and return the results

mResult.n[0] = _mm256_add_ps(c0, c4); // n0 = a00*b00+a01*b10+a02*b20+a03*b30 a00*b01+a01*b11+a02*b21+a03*b31 a00*b02+a01*b12+a02*b22+a03*b32 a00*b03+a01*b13+a02*b23+a03*b33

// a10*b00+a11*b10+a12*b20+a13*b30 a10*b01+a11*b11+a12*b21+a13*b31 a10*b02+a11*b12+a12*b22+a13*b32 a10*b03+a11*b13+a12*b23+a13*b33

mResult.n[1] = _mm256_add_ps(c1, c5); // n1 = a20*b00+a21*b10+a22*b20+a23*b30 a20*b01+a21*b11+a22*b21+a23*b31 a20*b02+a21*b12+a22*b22+a23*b32 a20*b03+a21*b13+a22*b23+a23*b33

// a30*b00+a31*b10+a32*b20+a33*b30 a30*b01+a31*b11+a32*b21+a33*b31 a30*b02+a31*b12+a32*b22+a33*b32 a30*b03+a31*b13+a32*b23+a33*b33

return mResult;

}

Solution 4

I wonder if transposing one of the matrices may be beneficial.

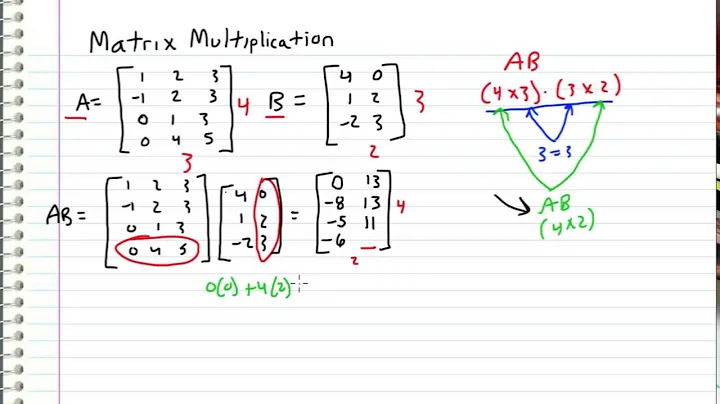

Consider how we multiply the following two matrices ...

A1 A2 A3 A4 W1 W2 W3 W4

B1 B2 B3 B4 X1 X2 X3 X4

C1 C2 C3 C4 * Y1 Y2 Y3 Y4

D1 D2 D3 D4 Z1 Z2 Z3 Z4

This would result in ...

dot(A,?1) dot(A,?2) dot(A,?3) dot(A,?4)

dot(B,?1) dot(B,?2) dot(B,?3) dot(B,?4)

dot(C,?1) dot(C,?2) dot(C,?3) dot(C,?4)

dot(D,?1) dot(D,?2) dot(D,?3) dot(D,?4)

Doing the dot product of a row and a column is a pain.

What if we transposed the second matrix before we multiplied?

A1 A2 A3 A4 W1 X1 Y1 Z1

B1 B2 B3 B4 W2 X2 Y2 Z2

C1 C2 C3 C4 * W3 X3 Y3 Z3

D1 D2 D3 D4 W4 X4 Y4 Z4

Now instead doing the dot product of a row and column, we are doing the dot product of two rows. This could lend itself to better use of the SIMD instructions.

Hope this helps.

Related videos on Youtube

Krzysztof Abramowicz

I am a Grid Computing student of the University of Amsterdam and a Computer Science passionate. Beside looking at my computer's screen, I admire the world through the lens of my camera, documenting my hikes and capturing my memories through photographs. When my mind is tired, I convert some calories into fresh mental energy during running or cycling. While being a social person, I also exchange energy with family, friends and colleagues, usually with predominance of emission over absorption.

Updated on September 08, 2020Comments

-

Krzysztof Abramowicz over 3 years

Krzysztof Abramowicz over 3 yearsI'm looking for a faster and trickier way to multiply two 4x4 matrices in C. My current research is focused on x86-64 assembly with SIMD extensions. So far, I've created a function witch is about 6x faster than a naive C implementation, which has exceeded my expectations for the performance improvement. Unfortunately, this stays true only when no optimization flags are used for compilation (GCC 4.7). With

-O2, C becomes faster and my effort becomes meaningless.I know that modern compilers make use of complex optimization techniques to achieve an almost perfect code, usually faster than an ingenious piece of hand-crafed assembly. But in a minority of performance-critical cases, a human may try to fight for clock cycles with the compiler. Especially, when some mathematics backed with a modern ISA can be explored (as it is in my case).

My function looks as follows (AT&T syntax, GNU Assembler):

.text .globl matrixMultiplyASM .type matrixMultiplyASM, @function matrixMultiplyASM: movaps (%rdi), %xmm0 # fetch the first matrix (use four registers) movaps 16(%rdi), %xmm1 movaps 32(%rdi), %xmm2 movaps 48(%rdi), %xmm3 xorq %rcx, %rcx # reset (forward) loop iterator .ROW: movss (%rsi), %xmm4 # Compute four values (one row) in parallel: shufps $0x0, %xmm4, %xmm4 # 4x 4FP mul's, 3x 4FP add's 6x mov's per row, mulps %xmm0, %xmm4 # expressed in four sequences of 5 instructions, movaps %xmm4, %xmm5 # executed 4 times for 1 matrix multiplication. addq $0x4, %rsi movss (%rsi), %xmm4 # movss + shufps comprise _mm_set1_ps intrinsic shufps $0x0, %xmm4, %xmm4 # mulps %xmm1, %xmm4 addps %xmm4, %xmm5 addq $0x4, %rsi # manual pointer arithmetic simplifies addressing movss (%rsi), %xmm4 shufps $0x0, %xmm4, %xmm4 mulps %xmm2, %xmm4 # actual computation happens here addps %xmm4, %xmm5 # addq $0x4, %rsi movss (%rsi), %xmm4 # one mulps operand fetched per sequence shufps $0x0, %xmm4, %xmm4 # | mulps %xmm3, %xmm4 # the other is already waiting in %xmm[0-3] addps %xmm4, %xmm5 addq $0x4, %rsi # 5 preceding comments stride among the 4 blocks movaps %xmm5, (%rdx,%rcx) # store the resulting row, actually, a column addq $0x10, %rcx # (matrices are stored in column-major order) cmpq $0x40, %rcx jne .ROW ret .size matrixMultiplyASM, .-matrixMultiplyASMIt calculates a whole column of the resultant matrix per iteration, by processing four floats packed in 128-bit SSE registers. The full vectorisation is possible with a bit of math (operation reordering and aggregation) and

mullps/addpsinstructions for parallel multiplication/addition of 4xfloat packages. The code reuses registers meant for passing parameters (%rdi,%rsi,%rdx: GNU/Linux ABI), benefits from (inner) loop unrolling and holds one matrix entirely in XMM registers to reduce memory reads. A you can see, I have researched the topic and took my time to implement it the best I can.The naive C calculation conquering my code looks like this:

void matrixMultiplyNormal(mat4_t *mat_a, mat4_t *mat_b, mat4_t *mat_r) { for (unsigned int i = 0; i < 16; i += 4) for (unsigned int j = 0; j < 4; ++j) mat_r->m[i + j] = (mat_b->m[i + 0] * mat_a->m[j + 0]) + (mat_b->m[i + 1] * mat_a->m[j + 4]) + (mat_b->m[i + 2] * mat_a->m[j + 8]) + (mat_b->m[i + 3] * mat_a->m[j + 12]); }I have investigated the optimised assembly output of the above's C code which, while storing floats in XMM registers, does not involve any parallel operations – just scalar calculations, pointer arithmetic and conditional jumps. The compiler's code seems to be less deliberate, but it is still slightly more effective than my vectorised version expected to be about 4x faster. I'm sure that the general idea is correct – programmers do similar things with rewarding results. But what is wrong here? Are there any register allocation or instruction scheduling issues I am not aware of? Do you know any x86-64 assembly tools or tricks to support my battle against the machine?

-

Basile Starynkevitch over 10 yearsRecent compilers can micro-optimize better than humans. Focus on algorithmic optimization!

Basile Starynkevitch over 10 yearsRecent compilers can micro-optimize better than humans. Focus on algorithmic optimization! -

Krzysztof Abramowicz over 10 yearsThis is exactly what I've done -- I used an alternative calculation to adapt the problem for SSE. It is actually a different algorithm. The problem is, probably, that now I also have to optimize it at the instruction level because, while focusing on the algorithm, I might have introduced data dependency problems, ineffective memory access patterns or some other black magic.

Krzysztof Abramowicz over 10 yearsThis is exactly what I've done -- I used an alternative calculation to adapt the problem for SSE. It is actually a different algorithm. The problem is, probably, that now I also have to optimize it at the instruction level because, while focusing on the algorithm, I might have introduced data dependency problems, ineffective memory access patterns or some other black magic. -

Brett Hale over 10 yearsYou might be better off using SSE intrinsics available through

<immintrin.h>- you can try other things like_mm_dp_pswith_MM_TRANSPOSE4_PS, without maintaining assembly. -

caf over 10 yearsIf you add the

restrictqualifier to the pointer arguments to the C function and compile with-O3, GCC will vectorise it. Without therestrictqualifiers, the compiler has to assume that the output matrix could be the same as one of the input matrices. -

Z boson over 10 years@BrettHale, I agree intrinsics are the way to do this but

Z boson over 10 years@BrettHale, I agree intrinsics are the way to do this but_mm_dp_psor_MM_TRANSPOSE4_PSwill be inefficient. See my answer and stackoverflow.com/questions/14967969/… -

Krzysztof Abramowicz over 10 yearsI have also an implementation utilizing SSE intrinsics which gives only about 10% speedup with -O2, while being almost two times slower when optimization is disabled. Since it's so optimization-dependant, I decided to touch pure assembly to make the most of the SSE approach. Unfortunately, this led to the slowest solution. Maybe I should go back to intrinsics and experiment more in this area.

Krzysztof Abramowicz over 10 yearsI have also an implementation utilizing SSE intrinsics which gives only about 10% speedup with -O2, while being almost two times slower when optimization is disabled. Since it's so optimization-dependant, I decided to touch pure assembly to make the most of the SSE approach. Unfortunately, this led to the slowest solution. Maybe I should go back to intrinsics and experiment more in this area. -

Z boson over 10 years@KrzysztofAbramowicz, AVX has been out for quite a while now. Have you considered doing this with AVX? I can add some code showing how to get twice the speed as SSE for 4x4 matrix multiplication. At least for multiplying an array of 4x4 matrices times a fixed one.

Z boson over 10 years@KrzysztofAbramowicz, AVX has been out for quite a while now. Have you considered doing this with AVX? I can add some code showing how to get twice the speed as SSE for 4x4 matrix multiplication. At least for multiplying an array of 4x4 matrices times a fixed one. -

Krzysztof Abramowicz over 10 yearsI found similar considerations in questions how to achieve 4 FLOPs per cycle and C code loop performance.

Krzysztof Abramowicz over 10 yearsI found similar considerations in questions how to achieve 4 FLOPs per cycle and C code loop performance. -

CoffeDeveloper about 9 yearshow does "restrict" translates to other compilers ? (clang, msvc, icc)

CoffeDeveloper about 9 yearshow does "restrict" translates to other compilers ? (clang, msvc, icc) -

Simply Beautiful Art over 4 yearsFrom a mathematical standpoint, it is possible to reduce this to 48 multiplications and 120 additions, which may be of interest.

Simply Beautiful Art over 4 yearsFrom a mathematical standpoint, it is possible to reduce this to 48 multiplications and 120 additions, which may be of interest.

-

-

Z boson over 10 yearsYou almost never want to do a dot product of two vectors with SSE. Instead you do do four dot products at once. You do the same thing you do with scalar code but instead you use SIMD registers. E.g. for four components vectors this means you do 4

Z boson over 10 yearsYou almost never want to do a dot product of two vectors with SSE. Instead you do do four dot products at once. You do the same thing you do with scalar code but instead you use SIMD registers. E.g. for four components vectors this means you do 4_mm_mul_psand 3_mm_add_psand this gives you four dot products. -

Sparky over 10 years@redrum: I see. Until now, I've been using combinations of "mulps" and "haddps" for dot products and matrix,vector multiplication. Looks like I have some more tweaking to do.

-

Z boson over 10 years

Z boson over 10 yearshaddhas its use sometimes but not in this case. I have never founddppsto be useful. -

Krzysztof Abramowicz over 10 yearsMany thanks for your answer. The code looks better than my previous experiment with SSE intrinsics for matrix multiplication. It also gives a better-looking assembly with

Krzysztof Abramowicz over 10 yearsMany thanks for your answer. The code looks better than my previous experiment with SSE intrinsics for matrix multiplication. It also gives a better-looking assembly with-O2and runs a bit faster than mine. But I am still wondering why I cannot achieve at least same results with pure assembly. -

Z boson over 10 yearsIf you're using GCC why are you not compiling with

Z boson over 10 yearsIf you're using GCC why are you not compiling with-O3? -

Krzysztof Abramowicz over 10 yearsMaybe because I've always been told that

Krzysztof Abramowicz over 10 yearsMaybe because I've always been told that-O3introduces aggressive optimisation techniques which may not boost performance, but may introduce additional cost, e.g. by increasing code size when unrolling loops or inlining functions. But you're right – first-O3, then low-level optimisation! :-) Fortunately, in my example it doesn't make much difference. -

Praxeolitic about 10 yearsI'm having trouble getting this to work. I'm calling it from C with this signature: void abramowicz_MM4x4(float *A, float *B, float *C); And then I have the assembly in another file named to match gcc name mangling: .globl _Z16abramowicz_MM4x4PfS_S _Z16abramowicz_MM4x4PfS_S: The call gives incorrect values. What might be going wrong?

Praxeolitic about 10 yearsI'm having trouble getting this to work. I'm calling it from C with this signature: void abramowicz_MM4x4(float *A, float *B, float *C); And then I have the assembly in another file named to match gcc name mangling: .globl _Z16abramowicz_MM4x4PfS_S _Z16abramowicz_MM4x4PfS_S: The call gives incorrect values. What might be going wrong? -

Praxeolitic about 10 yearsThe issue was that the order of the arguments are flipped. For anyone who is going to try this either flip A and B in the function signature in C or flip rdi and rsi in the asm.

Praxeolitic about 10 yearsThe issue was that the order of the arguments are flipped. For anyone who is going to try this either flip A and B in the function signature in C or flip rdi and rsi in the asm. -

user1095108 over 9 years@Zboson Would you mind explaining your statement a bit further please? Why would you do 4

_mm_mul_pss instead of_mm_mul_sss, if everything is the same as in the scalar case? -

Z boson over 9 years@user1095108, I mean the SIMD code if used efficiently looks almost the same as the scalar case. Consider the 3D dot product

Z boson over 9 years@user1095108, I mean the SIMD code if used efficiently looks almost the same as the scalar case. Consider the 3D dot productw = x1x2 + y1y2 +z1z1. The variables here can be scalars or SIMD vectors and the resultswis either a single number or the result of multiple dot products at once. Each case uses the same number of instructions: 3 mults and 2 adds. But the scalar case uses scalar instructions and the SIMD case the SIMD instructions. -

user1095108 over 9 years@Zboson I've implemented your trick and it works very well. If you do need a single dot product, not a matrix multiplication row, you'd use _mm_dp_ps or _mm_hadd_ps?

-

Z boson over 9 years@user1095108, I have never used

Z boson over 9 years@user1095108, I have never used_mm_dp_psor_mm_hadd_psfor a single dot product. I would try to reorganize my code so that I don't have to. Read this cdl.uni-saarland.de/papers/leissa_vecimp_tr.pdf. But Intel must have created_mm_dp_psfor a reason. I read a note on this by them a while back. If you can't change your code and have to calculate one dot product at a time then_mm_dp_psprobably has some benefit but from what I recall it was a small improvement and nothing close to the factor of 4 you can get from doing four at once. You could write code to test this. -

user1095108 over 9 years@Zboson You are right, of course, if you do need 4 at a time or can reorganize your code. It may be, that you don't/can't.

-

Z boson over 9 years@user1095108, this may be of interest to you stackoverflow.com/questions/14967969/…. It was my first post on SO.

Z boson over 9 years@user1095108, this may be of interest to you stackoverflow.com/questions/14967969/…. It was my first post on SO. -

peterh over 8 yearsElaborate... does it really answer the question?

peterh over 8 yearsElaborate... does it really answer the question? -

Jason Nelson over 8 yearsanyone have an intel ASM translation of above?

-

Peter Cordes over 7 yearsI don't think doing a gather of elements from four different input matrices and then a scatter back to four different result matrices would be faster than using load+broadcast like the OP's own answer does.

Peter Cordes over 7 yearsI don't think doing a gather of elements from four different input matrices and then a scatter back to four different result matrices would be faster than using load+broadcast like the OP's own answer does. -

Nicholas Frechette about 7 yearsI wrote an extended blog post on the subject located here. I also translated the assembly version into something usable by Visual Studio although some minor changes were made to my version to keep it binary exact. My non-assembly version is a bit faster though!

-

Peter Cordes over 6 years

Peter Cordes over 6 years.xmm[]and.ymm[]might be better union member names. Other than that, looks good. Quite a lot of shuffling, though. Might be worth storing to memory so you can broadcast-load. (Unless the compiler "optimizes" it back into shuffles...) -

Peter Cordes over 6 yearsOn Haswell and later,

Peter Cordes over 6 yearsOn Haswell and later,vbroadcastss ymm, [mem]is a single uop in the load port. On SnB/IvB, it's a load + port5 shuffle. But that still beats 2 port5 shuffles for vshufps + vperm2f128. (Or vinsertf128.) -

Peter Cordes over 6 yearsOh NVM, you're doing two separate in-lane broadcasts and the

Peter Cordes over 6 yearsOh NVM, you're doing two separate in-lane broadcasts and thepermute2f128is on the other operand. Yeah, that looks good. With-march=haswell, 4 of the mul/add pairs fold into FMAs: godbolt.org/g/9uEbhR. Hmm, those_mm256_permute2f128_ps(same,same, 0)are broadcasts, but compilers aren't turning them intovinsertf128. This is where you could maybe save shuffle-port uops with broadcast-128 loads for Haswell.