Manually converting unicode codepoints into UTF-8 and UTF-16

Solution 1

Wow. On the one hand I'm thrilled to know that university courses are teaching to the reality that character encodings are hard work, but actually knowing the UTF-8 encoding rules sounds like expecting a lot. (Will it help students pass the Turkey test?)

The clearest description I've seen so far for the rules to encode UCS codepoints to UTF-8 are from the utf-8(7) manpage on many Linux systems:

Encoding

The following byte sequences are used to represent a

character. The sequence to be used depends on the UCS code

number of the character:

0x00000000 - 0x0000007F:

0xxxxxxx

0x00000080 - 0x000007FF:

110xxxxx 10xxxxxx

0x00000800 - 0x0000FFFF:

1110xxxx 10xxxxxx 10xxxxxx

0x00010000 - 0x001FFFFF:

11110xxx 10xxxxxx 10xxxxxx 10xxxxxx

[... removed obsolete five and six byte forms ...]

The xxx bit positions are filled with the bits of the

character code number in binary representation. Only the

shortest possible multibyte sequence which can represent the

code number of the character can be used.

The UCS code values 0xd800–0xdfff (UTF-16 surrogates) as well

as 0xfffe and 0xffff (UCS noncharacters) should not appear in

conforming UTF-8 streams.

It might be easier to remember a 'compressed' version of the chart:

Initial bytes starts of mangled codepoints start with a 1, and add padding 1+0. Subsequent bytes start 10.

0x80 5 bits, one byte

0x800 4 bits, two bytes

0x10000 3 bits, three bytes

You can derive the ranges by taking note of how much space you can fill with the bits allowed in the new representation:

2**(5+1*6) == 2048 == 0x800

2**(4+2*6) == 65536 == 0x10000

2**(3+3*6) == 2097152 == 0x200000

I know I could remember the rules to derive the chart easier than the chart itself. Here's hoping you're good at remembering rules too. :)

Update

Once you have built the chart above, you can convert input Unicode codepoints to UTF-8 by finding their range, converting from hexadecimal to binary, inserting the bits according to the rules above, then converting back to hex:

U+4E3E

This fits in the 0x00000800 - 0x0000FFFF range (0x4E3E < 0xFFFF), so the representation will be of the form:

1110xxxx 10xxxxxx 10xxxxxx

0x4E3E is 100111000111110b. Drop the bits into the x above (start from the right, we'll fill in missing bits at the start with 0):

1110x100 10111000 10111110

There is an x spot left over at the start, fill it in with 0:

11100100 10111000 10111110

Convert from bits to hex:

0xE4 0xB8 0xBE

Solution 2

The descriptions on Wikipedia for UTF-8 and UTF-16 are good:

Procedures for your example string:

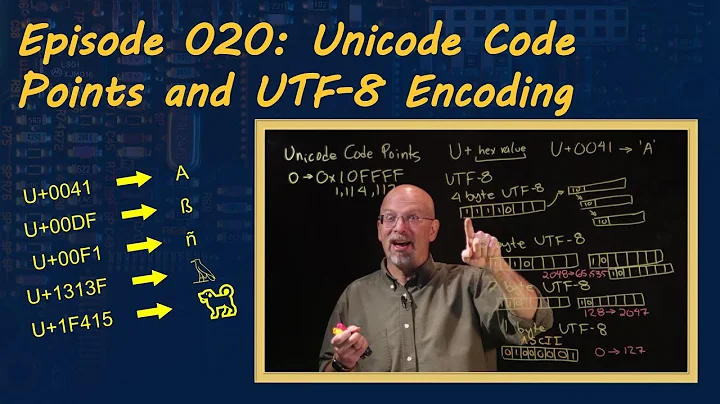

UTF-8

UTF-8 uses up to 4 bytes to represent Unicode codepoints. For the 1-byte case, use the following pattern:

1-byte UTF-8 = 0xxxxxxxbin = 7 bits = 0-7Fhex

The initial byte of 2-, 3- and 4-byte UTF-8 start with 2, 3 or 4 one bits, followed by a zero bit. Follow on bytes always start with the two-bit pattern 10, leaving 6 bits for data:

2-byte UTF-8 = 110xxxxx 10xxxxxxbin = 5+6(11) bits = 80-7FFhex

3-byte UTF-8 = 1110xxxx 10xxxxxx 10xxxxxxbin = 4+6+6(16) bits = 800-FFFFhex

4-byte UTF-8 = 11110xxx 10xxxxxx 10xxxxxx 10xxxxxxbin = 3+6+6+6(21) bits = 10000-10FFFFhex††Unicode codepoints are undefined beyond 10FFFFhex.

Your codepoints are U+006D, U+0416 and U+4E3D requiring 1-, 2- and 3-byte UTF-8 sequences, respectively. Convert to binary and assign the bits:

U+006D = 1101101bin = 01101101bin = 6Dhex

U+0416 = 10000 010110bin = 11010000 10010110bin = D0 96hex

U+4E3D = 0100 111000 111101bin = 11100100 10111000 10111101bin = E4 B8 BDhex

Final byte sequence:

6D D0 96 E4 B8 BD

or if nul-terminated strings are desired:

6D D0 96 E4 B8 BD 00

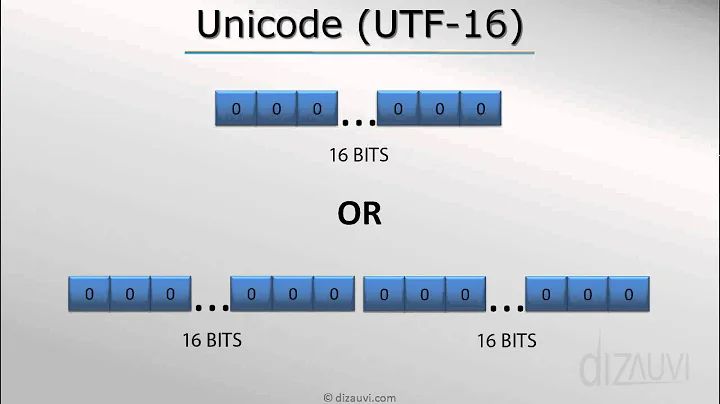

UTF-16

UTF-16 uses 2 or 4 bytes to represent Unicode codepoints. Algorithm:

U+0000 to U+D7FF uses 2-byte 0000hex to D7FFhex

U+D800 to U+DFFF are invalid codepoints reserved for 4-byte UTF-16

U+E000 to U+FFFF uses 2-byte E000hex to FFFFhexU+10000 to U+10FFFF uses 4-byte UTF-16 encoded as follows:

- Subtract 10000hex from the codepoint.

- Express result as 20-bit binary.

- Use the pattern 110110xxxxxxxxxx 110111xxxxxxxxxxbin to encode the upper- and lower- 10 bits into two 16-bit words.

Using your codepoints:

U+006D = 006Dhex

U+0416 = 0416hex

U+4E3D = 4E3Dhex

Now, we have one more issue. Some machines store the two bytes of a 16-bit word least significant byte first (so-called little-endian machines) and some store most significant byte first (big-endian machines). UTF-16 uses the codepoint U+FEFF (called the byte order mark or BOM) to help a machine determine if a byte stream contains big- or little-endian UTF-16:

big-endian = FE FF 00 6D 04 16 4E 3D

little-endian = FF FE 6D 00 16 04 3D 4E

With nul-termination, U+0000 = 0000hex:

big-endian = FE FF 00 6D 04 16 4E 3D 00 00

little-endian = FF FE 6D 00 16 04 3D 4E 00 00

Since your instructor didn't give a codepoint that required 4-byte UTF-16, here's one example:

U+1F031 = 1F031hex - 10000hex = F031hex = 0000111100 0000110001bin =

1101100000111100 1101110000110001bin = D83C DC31hex

Solution 3

The following program will do the necessary work. It may not be "manual" enough for your purposes, but at a minimum you can check your work.

#!/usr/bin/perl

use 5.012;

use strict;

use utf8;

use autodie;

use warnings;

use warnings qw< FATAL utf8 >;

no warnings qw< uninitialized >;

use open qw< :std :utf8 >;

use charnames qw< :full >;

use feature qw< unicode_strings >;

use Encode qw< encode decode >;

use Unicode::Normalize qw< NFD NFC >;

my ($x) = "mЖ丽";

open(U8,">:encoding(utf8)","/tmp/utf8-out");

print U8 $x;

close(U8);

open(U16,">:encoding(utf16)","/tmp/utf16-out");

print U16 $x;

close(U16);

system("od -t x1 /tmp/utf8-out");

my $u8 = encode("utf-8",$x);

print "utf-8: 0x".unpack("H*",$u8)."\n";

system("od -t x1 /tmp/utf16-out");

my $u16 = encode("utf-16",$x);

print "utf-16: 0x".unpack("H*",$u16)."\n";

Related videos on Youtube

RSM

Updated on December 12, 2020Comments

-

RSM over 3 years

I have a university programming exam coming up, and one section is on unicode.

I have checked all over for answers to this, and my lecturer is useless so that’s no help, so this is a last resort for you guys to possibly help.

The question will be something like:

The string 'mЖ丽' has these unicode codepoints

U+006D,U+0416andU+4E3D, with answers written in hexadecimal, manually encode the string into UTF-8 and UTF-16.Any help at all will be greatly appreciated as I am trying to get my head round this.

-

Admin almost 13 yearsDid you try to write a program that converts a UTF-8 string to a UTF-16 string and then manually compare the output (UTF-16) and input (UTF-8)?

Admin almost 13 yearsDid you try to write a program that converts a UTF-8 string to a UTF-16 string and then manually compare the output (UTF-16) and input (UTF-8)? -

RSM almost 13 yearsI have played around with several coded converters, but im still at a miss as to how i actually do it manually with a pen and paper, as thats all we will have in the exam.

-

-

RSM almost 13 yearsBrilliant thanks for your help. The content on the course is good, cannot say the same for the lecturers teaching it tho. So for example. I have 'm' which is unicode codepoint 'u+006D' which i think lies in: 0x00000000 - 0x0000007F: 0xxxxxxx. Im still a little confused as where to go from here

-

sarnold almost 13 years@Ryan, the ascii range (

0--127aka0x0--0x7F) is easy -- they self-represent. So youru+006Dis just01101101b. :) (Which I got with my calculator, because I'm lazy. Hex-to-bin by hand isn't horrible though, you can write a translation table by hand at the start of the test and re-use it each time you need it. :) -

RSM almost 13 years@sarnold is it always 01101101 for everthing that lies between 0x0 - 0x7F? or was there more of a process to go from U+006D to 01101101b. Where did you get the b from?

-

sarnold almost 13 years@Ryan, sorry :) The

01101101bwas specific to your inputu+006D. That's just0x6Din binary. (I included thebat the end to signifybinary-- numbers starting with a leading0are sometimes meant to be octal.) Everything between0and0x7Frequires only seven bits to represent, so the leading0in the rules is just re-stating that the ascii range remains unchanged in UTF-8, one of its prime selling points. -

RSM almost 13 yearsAlso the answer needs to be hexadecimal

-

RSM almost 13 yearsfor example, the unicode codepoint 'U+4E3E' has the utf-8 hexidecimal value of 'e4 b8 be'. How on earth does one get from U+4E3E to e4 b8 be? :|

-

sarnold almost 13 years@Ryan, I added a specific example

-

RSM almost 13 yearsWow, what a brilliant answer, thats amazing, thank you so much, you taught me over the internet what my lecturer couldn't do in a year. Any hints for 16 and 32 utfs? Brilliant, thankyou so so much. will give you top answer definatly

-

sarnold almost 13 years@Ryan, Wikipedia's article on UTF-16 is pretty good about the conversion, it even looks easier than UTF-8 but with the caveat that there is both big-endian and little-endian variants of UTF-16. UTF-32 looks like a simple subset of UCS-4, where the not-yet-defined codepoints are ignored in UTF-32. (Sounds like committee-speak more than useful knowledge, to me.)

-

RSM almost 13 yearsBrilliant, thanks again. brilliant. Love the Stack Overflow community

-

sarnold almost 13 yearsClever; I hadn't heard Perl introduced

>:encoding()-style support yet. Thanks! -

mgouin over 7 yearsThanks for the Turkey test reference! I was not aware of that!

mgouin over 7 yearsThanks for the Turkey test reference! I was not aware of that! -

masaers almost 7 yearsThanks for spelling out the symmetry 5 bit + 1 byte, 4 bits + 2 byte, ..., 1 bit + 5 bytes. Didn't spot that one myself, but now I will never forget UTF-8 encoding!

-

Patryk Golebiowski almost 7 yearsThis answer is wrong, UTF-8 cannot use more than 4 bytes anymore (it was different some time ago).

Patryk Golebiowski almost 7 yearsThis answer is wrong, UTF-8 cannot use more than 4 bytes anymore (it was different some time ago). -

sarnold almost 7 years@PatrykGołębiowski. thanks, I can't believe I've been wrong for so long.

-

MarcusJ over 6 yearsUnicode is limited to 0x1FFFFF, which is 21 bits. there are no 5 or 6 byte codepoint sequences in Unicode...

-

sarnold over 6 years@MarcusJ, thanks for the reminder, I never got around to removing the obsolete data after Patryk notified me.

-

artm over 2 yearsgreat answer, very detailed