mdadm software RAID isn't assembled at boot during initramfs stage

You could mirror the drives with btrfs itself, instead of creating that fs on top of the software raid:

mkfs.btrfs -d raid1 /dev/sdc /dev/sdd

Otherwise try:

umount /dev/md0 if mounted

mdadm --stop /dev/md0

mdadm --assemble --scan

mv /etc/mdadm/mdadm.conf /etc/mdadm/mdadm.conf.bak

/usr/share/mdadm/mkconf > /etc/mdadm/mdadm.conf

If cat /proc/mdstat shows the correct output now then create your filesystem and mount it, use blkid to get the UUID for /dev/md0 and edit /etc/fstab accordingly.

If you are still having issues you could try this before proceeding with the above mentioned instructions:

mdadm --zero-superblock /dev/sdc /dev/sdd

mdadm --create /dev/md0 --level=1 --raid-devices=2 /dev/sdc1 /dev/sdd1

I tested this on a system running Debian Jessie with 3.16.0-4-amd64 kernel, and I wrote gpt partition tables to the two block devices that I mirrored together. The array is properly assembled at boot and mounted as specified.

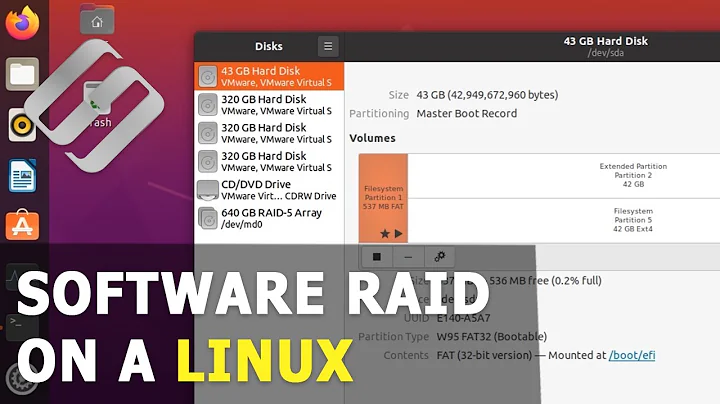

Related videos on Youtube

Sun Wukong

Updated on September 18, 2022Comments

-

Sun Wukong over 1 year

First, I prefer to mention I've found and read this.

I'm running Debian Jessie with a standard 3.16 kernel. I've manually defined a RAID1 array. But it is not assembled automatically at boot time. And thus, systemd falls back to some degraded shell after trying to mount the FS described in /etc/fstab. If that line in fstab is commented, then the boot process goes to the end BUT the RAID array isn't available. Manually assembling it doesn't trigger any error. Then mounting the FS is straightforward.

When manually assembled, the array looks like this :

root@tinas:~# cat /proc/mdstat Personalities : [raid1] md0 : active (auto-read-only) raid1 sdc1[0] sdd1[1] 1953382464 blocks super 1.2 [2/2] [UU] bitmap: 0/15 pages [0KB], 65536KB chunk unused devices: <none>Here's an extract of the blkid command :

/dev/sdd1: UUID="c8c2cb23-fbd2-4aae-3e78-d9262f9e425b" UUID_SUB="8647a005-6569-c76f-93ee-6d4fedd700c3" LABEL="tinas:0" TYPE="linux_raid_member" PARTUUID="81b1bbfe-fad7-4fd2-8b73-554f13fbb26b" /dev/sdc1: UUID="c8c2cb23-fbd2-4aae-3e78-d9262f9e425b" UUID_SUB="ee9c2905-0ce7-2910-2fed-316ba20ec3a9" LABEL="tinas:0" TYPE="linux_raid_member" PARTUUID="11d681e5-9021-42c0-a858-f645c8c52708" /dev/md0: UUID="b8a72591-040e-4ca1-a663-731a5dcbebc2" UUID_SUB="a2d4edfb-876a-49c5-ae76-da5eac5bb1bd" TYPE="btrfs"Info from fdisk :

root@tinas:~# fdisk -l /dev/sdc Disque /dev/sdc : 1,8 TiB, 2000398934016 octets, 3907029168 secteurs Unités : secteur de 1 × 512 = 512 octets Taille de secteur (logique / physique) : 512 octets / 4096 octets taille d'E/S (minimale / optimale) : 4096 octets / 4096 octets Type d'étiquette de disque : gpt Identifiant de disque : C475BEB1-5452-4E87-9638-2E5AA29A3A73 Device Start End Sectors Size Type /dev/sdc1 2048 3907029134 3907027087 1,8T Linux RAIDHere, I'm not sure if the type value is correct 'Linux RAID' as I've read that 0xFD is expected but that value doesn't seem available through fdisk with GPT partition table.

Thanks for your help

Edit :

From journalctl -xb I can find one trace :

Apr 14 15:14:46 tinas mdadm-raid[211]: Generating udev events for MD arrays...done. Apr 14 15:35:03 tinas kernel: [ 1242.505742] md: md0 stopped. Apr 14 15:35:03 tinas kernel: [ 1242.513200] md: bind<sdd1> Apr 14 15:35:03 tinas kernel: [ 1242.513545] md: bind<sdc1> Apr 14 15:35:04 tinas kernel: [ 1242.572177] md: raid1 personality registered for level 1 Apr 14 15:35:04 tinas kernel: [ 1242.573369] md/raid1:md0: active with 2 out of 2 mirrors Apr 14 15:35:04 tinas kernel: [ 1242.573708] created bitmap (15 pages) for device md0 Apr 14 15:35:04 tinas kernel: [ 1242.574869] md0: bitmap initialized from disk: read 1 pages, set 0 of 29807 bits Apr 14 15:35:04 tinas kernel: [ 1242.603079] md0: detected capacity change from 0 to 2000263643136 Apr 14 15:35:04 tinas kernel: [ 1242.607065] md0: unknown partition table Apr 14 15:35:04 tinas kernel: [ 1242.665646] BTRFS: device fsid b8a72591-040e-4ca1-a663-731a5dcbebc2 devid 1 transid 8 /dev/md0/proc/mdstat I just realized that right after boot the raid1 module isn't loaded !

root@tinas:~# cat /proc/mdstat Personalities : unused devices: <none> root@tinas:~#Thus, I added the

raid1module to /etc/modules, issued anupdate-initramfs -u.Here's the according log :

avril 15 12:23:21 tinas mdadm-raid[204]: Generating udev events for MD arrays...done. avril 15 12:23:22 tinas systemd-modules-load[186]: Inserted module 'raid1' avril 15 12:23:22 tinas kernel: md: raid1 personality registered for level 1But the array is still not assembled:

root@tinas:~# cat /proc/mdstat Personalities : [raid1] unused devices: <none>Would that be because the raid1 module seems to be loaded after udev events are generated ?

Interesting link but too general

I tried

dpkg-reconfigure mdadm: nothing new...If anyone knows how to get some traces from udev, it would be great. I uncommented the

udev_log = infoline in/etc/udev/udev.confbut can't see anything new...search fr raid loaded modules

root@tinas:~# grep -E 'md_mod|raid1' /proc/modules raid1 34596 0 - Live 0xffffffffa01fa000 md_mod 107672 1 raid1, Live 0xffffffffa0097000raid1 is loaded because I added it to

/etc/modules, otherwise, before, it was loaded.uname -r

root@tinas:~# uname -r 3.16.0-4-amd64/etc/mdadm/mdadm.conf

root@tinas:~# cat /etc/mdadm/mdadm.conf # mdadm.conf # # Please refer to mdadm.conf(5) for information about this file. # # by default (built-in), scan all partitions (/proc/partitions) and all # containers for MD superblocks. alternatively, specify devices to scan, using # wildcards if desired. #DEVICE partitions containers # auto-create devices with Debian standard permissions CREATE owner=root group=disk mode=0660 auto=yes # automatically tag new arrays as belonging to the local system HOMEHOST <system> # instruct the monitoring daemon where to send mail alerts MAILADDR root # definitions of existing MD arrays ARRAY /dev/md/0 metadata=1.2 UUID=a930b085:1e1a615b:93e209e6:08314607 name=tinas:0 # This configuration was auto-generated on Fri, 15 Apr 2016 11:10:41 +0200 by mkconfI just noticed something weird : the last line of /etc/mdadm/madm.conf is autogenerated by the command

mdadm -Esand shows a device called /dev/md/0 while when I manually assemble the array, I get the /dev/md0 which I used when creating the array withmdadm --create...Also, I got those info from a verbose

update-initramsfs:Adding module /lib/modules/3.16.0-4-amd64/kernel/drivers/md/raid10.ko I: mdadm: using configuration file: /etc/mdadm/mdadm.conf I: mdadm: will start all available MD arrays from the initial ramdisk. I: mdadm: use `dpkg-reconfigure --priority=low mdadm` to change this.Thus I tried it but it just fails the same : no array after reboot.

In /etc/mdadm/madm.conf , I changed the ARRAY device name from /dev/md/0 to ARRAY /dev/md0

I also noticed that while in initramfs busybox, after issuing mdadm --assemble --scan the ARRAY is created as /dev/md0 and that it is marked as active (auto-read-only)Sunday 17th

I just realized the initramfs stuff. I knew the kernel was using some ram-disk but didn't know much more. My understandings now is that this initramfs should contain all the data required to assemble the RAID array at boot in userland. Thus, the importance to update this static file /boot/initrd.img-version to reflect all the changes that matter.

So I suspected that my /boot/initrd.img-3.16.0-4-amd64 file was messy and tried to create a new one issuing this command :

# update-initramfs -t -c -v -k 3.16.0-4-amd64

Please note I've got only one kernel there and thus only one corresponding initramfs.But after a reboot, I again faced the initramfs shell because the kernel failed to mount the /dev/md0 FS used in /etc/fstab.

Wednesday 20th

I had already checked the state of the server while in busybox:

- raid1 module is loaded

- Nothing interesting in dmesg

- /run/mdadm/map exists but is empty

- journalctl -xb shows that :

- systemd reports a time-out trying to mount the FS on the array that has not been assembled

- systemd then reports a dependency failure when it tries to fsck that FS

Here is my manual intervention then :

mdadm --assemble --scan/proc/mdstatclaims that the device /dev/md0 is active and auto-read-only. So I issue :mdadm --readwrite /dev/md0before exiting busybox.

-

Sun Wukong about 8 yearsForgot to mention that fdisk shows exactly the same data (except GUID) for /dev/sdd

-

user9517 about 8 yearsAre there any relevant messages in your logs ?

-

Sun Wukong about 8 yearshere's what I can find about mdadm-raid in journalctl -xb : tinas mdadm-raid[211]: Generating udev events for MD arrays...done.

-

Sun Wukong about 8 yearsThanks for your help. It matches what I've done before. I did try again, this time choosing MBR for the partition table type and 0xDA (non-fs data) for partition type, according to (raid.wiki.kernel.org/index.php/RAID_setup). But got the same results. But I just realized that the raid1 module wasn't loaded at boot time and I feel that's the problem...

-

neofug about 8 yearsThe modules md_mod and raid1 are loaded into the 3.16 kernel. What is the output of

neofug about 8 yearsThe modules md_mod and raid1 are loaded into the 3.16 kernel. What is the output ofgrep -E 'md_mod|raid1' /proc/modulesanduname -a. Also can you post the contents of your /etc/mdadm/mdadm.conf ? -

Sun Wukong about 8 yearsThx. I completed with the data you asked for.

-

Sun Wukong about 8 yearsBy the way thanks for the tip about btrfs able to manage RAID1 by itself. I didn't know that. Now it's very tempting because I feel glued with this problem ;-) but I prefer to investigate more and find out the problem/solution

-

neofug about 8 yearsThis page may contain some helpful information for debugging the problem from the initramfs shell: wiki.debian.org/InitramfsDebug , you can check what is loaded in a given initial ram disk

neofug about 8 yearsThis page may contain some helpful information for debugging the problem from the initramfs shell: wiki.debian.org/InitramfsDebug , you can check what is loaded in a given initial ram disklsinitramfs /boot/initrd.img-3.16.0-4-amd64 -

Rwky over 5 yearsZeroing the superblock and re-running the create command worked for me, when I ran the create command it showed one of the partitions as having an ext4 superblock instead of a raid superblock.