Pacemaker DRBD resource not getting promoted to master on any node

Make sure that your DRBD device is healthy. If you # cat /proc/drbd and look at it's state, do you see the following: cs:Connected, ro:Secondary/Secondary, and most importantly, ds:UpToDate/UpToDate?

Without UpToDate data, the resource agent for DRBD will NOT promote a device. If you've just created the device's metadata, and haven't forced a single node into the Primary role yet, you'll see your disk state is: ds:Inconsistent/Inconsistent. You'll need to run the following to tell DRBD which node should become the SyncSource for the cluster: # drbdadm primary r0 --force

That's the only time you should have to force DRBD into Primary under normal circumstances; so forget the --force flag after that ;)

Related videos on Youtube

Infi

Updated on September 18, 2022Comments

-

Infi almost 2 years

First off, I'm no linux specialist, I've been following tutorials and been working with the help of google, this worked out fine until now, but currently I'm stuck with a problem.

I'm using CentOS 6.5 and DRBD version 8.4.4.I have two nodes running pacemaker, so far everything has been working, I set up DRBD and I can manually set a node as primary and mount the DRBD resource, so that is also working.

now I created a pacemaker resource to control DRBD but it fails to promote any of the two nodes to master which also prevents it getting mounted.

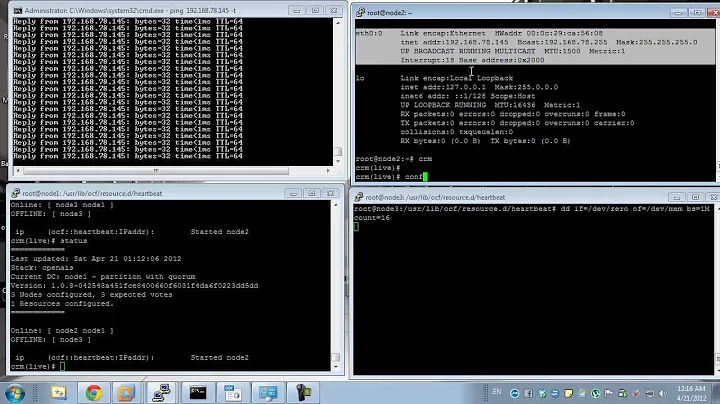

pcs status looks like this:

Cluster name: hydroC Last updated: Wed Jun 25 14:19:49 2014 Last change: Wed Jun 25 14:02:25 2014 via crm_resource on hynode1 Stack: cman Current DC: hynode1 - partition with quorum Version: 1.1.10-14.el6_5.3-368c726 2 Nodes configured 4 Resources configured Online: [ hynode1 hynode2 ] Full list of resources: ClusterIP (ocf::heartbeat:IPaddr2): Started hynode1 Master/Slave Set: MSdrbdDATA [drbdDATA] Slaves: [ hynode1 hynode2 ] ShareDATA (ocf::heartbeat:Filesystem): StoppedShareData remains stopped because there is no master

I initially followed this tutorial:

http://clusterlabs.org/doc/en-US/Pacemaker/1.1-pcs/html/Clusters_from_Scratch/_configure_the_cluster_for_drbd.htmlthis is how the pacemaker config looks:

Cluster Name: hydroC Corosync Nodes: Pacemaker Nodes: hynode1 hynode2 Resources: Resource: ClusterIP (class=ocf provider=heartbeat type=IPaddr2) Attributes: ip=10.0.0.100 cidr_netmask=32 Operations: monitor interval=30s (ClusterIP-monitor-interval-30s) Master: MSdrbdDATA Meta Attrs: master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify =true Resource: drbdDATA (class=ocf provider=linbit type=drbd) Attributes: drbd_resource=r0 Operations: monitor interval=60s (drbdDATA-monitor-interval-60s) Resource: ShareDATA (class=ocf provider=heartbeat type=Filesystem) Attributes: device=/dev/drbd3 directory=/share/data fstype=ext4 Operations: monitor interval=60s (ShareDATA-monitor-interval-60s) Stonith Devices: Fencing Levels: Location Constraints: Ordering Constraints: promote MSdrbdDATA then start ShareDATA (Mandatory) (id:order-MSdrbdDATA-Share DATA-mandatory) Colocation Constraints: ShareDATA with MSdrbdDATA (INFINITY) (with-rsc-role:Master) (id:colocation-Sha reDATA-MSdrbdDATA-INFINITY) Cluster Properties: cluster-infrastructure: cman dc-version: 1.1.10-14.el6_5.3-368c726 no-quorum-policy: ignore stonith-enabled: falseI've since tried different things like setting a location constraint or using different resource settings... I took this from another tutorial:

Master: MSdrbdDATA Meta Attrs: master-max=1 master-node-max=1 clone-max=2 notify=true target-role =Master is-managed=true clone-node-max=1 Resource: drbdDATA (class=ocf provider=linbit type=drbd) Attributes: drbd_resource=r0 drbdconf=/etc/drbd.conf Meta Attrs: migration-threshold=2 Operations: monitor interval=60s role=Slave timeout=30s (drbdDATA-monitor-int erval-60s-role-Slave) monitor interval=59s role=Master timeout=30s (drbdDATA-monitor-in terval-59s-role-Master) start interval=0 timeout=240s (drbdDATA-start-interval-0) stop interval=0 timeout=240s (drbdDATA-stop-interval-0)but the result stays the same, none of the nodes gets promoted to master.

I'd appreciate any help guiding me to the solution, thanks in advance.

-

tlo almost 10 yearsJust to be sure: You did start the resource ShareDATA? The DRBD resource will only get (automatically) promoted to master if there is a reason (another resource depending on it or explicitly configured).

tlo almost 10 yearsJust to be sure: You did start the resource ShareDATA? The DRBD resource will only get (automatically) promoted to master if there is a reason (another resource depending on it or explicitly configured). -

Infi almost 10 yearsTo my knowledge ShareDATA doesn't start because there is no MSdrbdDATA Master, if I try to manually start ShareDATA via 'crm_resource --force-start' it gives me the same error as if I would try to mount the DRBD resource manually, I can't mount it because there is no DRBD primary.

-

tlo almost 10 years"gives me the same error" - what exactly?

tlo almost 10 years"gives me the same error" - what exactly? -

Infi almost 10 years

[root@hynode1 ~]# crm_resource --resource shareDATA --force-start Operation start for shareDATA (ocf:heartbeat:Filesystem) returned 1 > stderr: INFO: Running start for /dev/drbd3 on /share/data > stderr: /dev/drbd3: Wrong medium type > stderr: mount: block device /dev/drbd3 is write-protected, mounting read-only > stderr: mount: Wrong medium type > stderr: ERROR: Couldn't mount filesystem /dev/drbd3 on /share/data -

tlo almost 10 yearshm, I would guess that MSdrbdDATA or the constraints have an error because shareDATA should not try to do anything if MSdrbdDATA is not master. Anything in the log? I'm sure that you need to define role="Slave" and role="Master" for the monitor operation for a DRBD resource. But you did this in one of your attempts...

tlo almost 10 yearshm, I would guess that MSdrbdDATA or the constraints have an error because shareDATA should not try to do anything if MSdrbdDATA is not master. Anything in the log? I'm sure that you need to define role="Slave" and role="Master" for the monitor operation for a DRBD resource. But you did this in one of your attempts... -

Infi almost 10 yearshmm I'm not sure what I should look for, I searched for errors in /var/log/messages, but there's nothing from today ... btw the resource is now called "shareDATA" and not "ShareDATA" because I was redoing everything from scratch, so there is no lowercase/uppercase problem before anyone asks, and yes I have currently configured the monitor operations for both roles as above.

-

Matt Kereczman almost 7 yearsWhat does the DRBD device look like:

Matt Kereczman almost 7 yearsWhat does the DRBD device look like:cat /proc/drbd

-