Python: Fast way of MinMax scaling an array

Solution 1

The answer by MadPhysicist can be optimized to avoid unneeded allocation of temporary data:

x -= x.min()

x /= x.ptp()

Inplace operators (+=, -=, etc...) don't eat your memory (so swapping on disk is less likely to occur). Of course, this destroys your initial x so it's only OK if you don't need x afterwards...

Also, the idea he proposed to concatenate multi data in higher dimension matrices, is a good idea if you have many many channels, but again it should be tested whether this BIG matrix generates disk swapping or not, compared to small matrices processed in sequence.

Solution 2

It's risky to use ptp, i.e. max - min, as it can in theory be 0, leading to an exception. It's safer to use minmax_scale as it doesn't have this issue. First, pip install scikit-learn.

from sklearn.preprocessing import minmax_scale

minmax_scale(array)

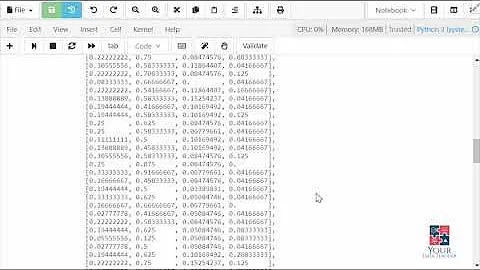

If using an sklearn Pipeline, use MinMaxScaler instead.

Related videos on Youtube

Comments

-

Wise almost 2 years

I use the following way to scale an n-dimensional array between 0 and 1:

x_scaled = (x-np.amin(x))/(np.amax(x)-np.amin(x))But it's very slow for large datasets. I have thousands of relatively large arrays which I need to process. Is there a faster method to this in python?

Edit: My arrays are in shape (24,24,24,9). For MinMax scaler in scikit, the input array has to have a certain shape which mine doesn't so I can't use it. In the documentation it says:

Parameters: X : array-like, shape [n_samples, n_features]-

Sreeram TP about 6 yearswhat about using

Sreeram TP about 6 yearswhat about usingMinMaxScalerfrom sklearn.? -

pault about 6 yearsTheres

pault about 6 yearsTheressklearn.preprocessing.MinMaxScaler. and alsosklearn.preprocessing.minmax_scale -

Mad Physicist about 6 yearsDon't compute min twice?

Mad Physicist about 6 yearsDon't compute min twice? -

MaxU - stop genocide of UA about 6 yearswhat is the shape of your data set?

MaxU - stop genocide of UA about 6 yearswhat is the shape of your data set? -

pault about 6 yearsCan you show us the output of

pault about 6 yearsCan you show us the output ofsklearn.preprocessing.minmax_scale(x)? Is there an error message? Wrong answer? -

Wise about 6 years@MaxU The shape is (samples,24,24,24,9)

-

Mad Physicist about 6 yearsWhat is the meaning of the shape of your array?

Mad Physicist about 6 yearsWhat is the meaning of the shape of your array? -

Wise about 6 years@MadPhysicist It is a shape used for Keras convolutional layers, 3d images of 24,24,24 with 9 channels.

-

Mad Physicist about 6 years@Wise. Would you be OK with looking at is as a 24*24*24-by-9 array then?

Mad Physicist about 6 years@Wise. Would you be OK with looking at is as a 24*24*24-by-9 array then? -

Mad Physicist about 6 years@Wise. Please clarify your question with some context. The fact that you are unhappy with all the solutions proposed so far means that your question is incomplete. Instead of leaking important details one by one and wasting everyone's time, please indicate where and how you are getting the multiple arrays, what you are trying to do with them, and the issues you have had with the approaches you tried so far.

Mad Physicist about 6 years@Wise. Please clarify your question with some context. The fact that you are unhappy with all the solutions proposed so far means that your question is incomplete. Instead of leaking important details one by one and wasting everyone's time, please indicate where and how you are getting the multiple arrays, what you are trying to do with them, and the issues you have had with the approaches you tried so far. -

MaxU - stop genocide of UA about 6 yearsdid you check keras.layers.normalization.BatchNormalization already?

MaxU - stop genocide of UA about 6 yearsdid you check keras.layers.normalization.BatchNormalization already? -

Wise about 6 years@MaxU No I haven't. I use them, but I don't know how it affects the input data?

-

MaxU - stop genocide of UA about 6 years@Wise, same here... I didn't start my "Keras journey" yet...

MaxU - stop genocide of UA about 6 years@Wise, same here... I didn't start my "Keras journey" yet...

-

-

Wise about 6 yearsThanks, my problem is that I wanna minmax scale a large number of arrays, so it's not one large array, but numerous arrays which are relatively large.

-

Mad Physicist about 6 years@Wise. Then concatenate them and apply the function along a particular axis. Please make your question clear and complete.

Mad Physicist about 6 years@Wise. Then concatenate them and apply the function along a particular axis. Please make your question clear and complete. -

sciroccorics about 6 years@MaxU: You're right for this specific case where full broadcasting is used. But using inplace operators is often a life-saver ;-)

sciroccorics about 6 years@MaxU: You're right for this specific case where full broadcasting is used. But using inplace operators is often a life-saver ;-) -

Asclepius over 5 yearsThis answer is dangerous because

Asclepius over 5 yearsThis answer is dangerous becauseptp()can in theory return 0. -

Asclepius over 5 yearsThis answer is dangerous because

Asclepius over 5 yearsThis answer is dangerous becauseptp()can in theory return 0. -

Mad Physicist over 5 years@A-B-B. Same as manually computing the difference. The question is about how to speed up the code, not catch all the corner cases. That being said, your comment is extremely useful.

Mad Physicist over 5 years@A-B-B. Same as manually computing the difference. The question is about how to speed up the code, not catch all the corner cases. That being said, your comment is extremely useful.