Reset weights in Keras layer

Solution 1

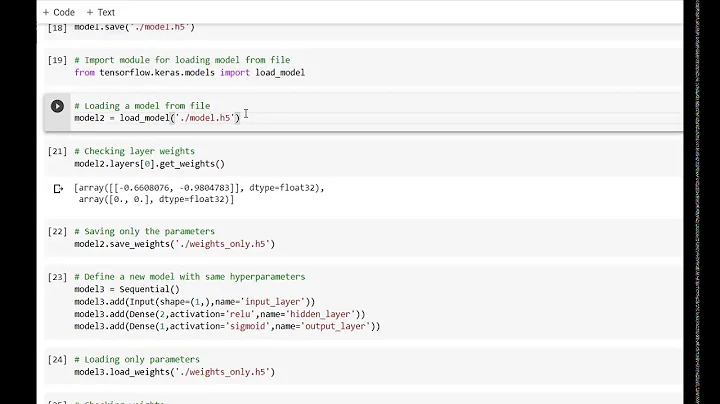

Save the initial weights right after compiling the model but before training it:

model.save_weights('model.h5')

and then after training, "reset" the model by reloading the initial weights:

model.load_weights('model.h5')

This gives you an apples to apples model to compare different data sets and should be quicker than recompiling the entire model.

Solution 2

Reset all layers by checking for initializers:

def reset_weights(model):

import keras.backend as K

session = K.get_session()

for layer in model.layers:

if hasattr(layer, 'kernel_initializer'):

layer.kernel.initializer.run(session=session)

if hasattr(layer, 'bias_initializer'):

layer.bias.initializer.run(session=session)

Update: kernel_initializer is kernel.initializer now.

Solution 3

If you want to truly re-randomize the weights, and not merely restore the initial weights, you can do the following. The code is slightly different depending on whether you're using TensorFlow or Theano.

from keras.initializers import glorot_uniform # Or your initializer of choice

import keras.backend as K

initial_weights = model.get_weights()

backend_name = K.backend()

if backend_name == 'tensorflow':

k_eval = lambda placeholder: placeholder.eval(session=K.get_session())

elif backend_name == 'theano':

k_eval = lambda placeholder: placeholder.eval()

else:

raise ValueError("Unsupported backend")

new_weights = [k_eval(glorot_uniform()(w.shape)) for w in initial_weights]

model.set_weights(new_weights)

Solution 4

I have found the clone_model function that creates a cloned network with the same architecture but new model weights.

Example of use:

model_cloned = tensorflow.keras.models.clone_model(model_base)

Comparing the weights:

original_weights = model_base.get_weights()

print("Original weights", original_weights)

print("========================================================")

print("========================================================")

print("========================================================")

model_cloned = tensorflow.keras.models.clone_model(model_base)

new_weights = model_cloned.get_weights()

print("New weights", new_weights)

If you execute this code several times, you will notice that the cloned model receives new weights each time.

Solution 5

Tensorflow 2 answer:

for ix, layer in enumerate(model.layers):

if hasattr(model.layers[ix], 'kernel_initializer') and \

hasattr(model.layers[ix], 'bias_initializer'):

weight_initializer = model.layers[ix].kernel_initializer

bias_initializer = model.layers[ix].bias_initializer

old_weights, old_biases = model.layers[ix].get_weights()

model.layers[ix].set_weights([

weight_initializer(shape=old_weights.shape),

bias_initializer(shape=old_biases.shape)])

Original weights:

model.layers[1].get_weights()[0][0]

array([ 0.4450057 , -0.13564804, 0.35884023, 0.41411972, 0.24866664,

0.07641453, 0.45726687, -0.04410008, 0.33194816, -0.1965386 ,

-0.38438258, -0.13263905, -0.23807487, 0.40130925, -0.07339832,

0.20535922], dtype=float32)

New weights:

model.layers[1].get_weights()[0][0]

array([-0.4607593 , -0.13104361, -0.0372932 , -0.34242013, 0.12066692,

-0.39146423, 0.3247317 , 0.2635846 , -0.10496247, -0.40134245,

0.19276887, 0.2652442 , -0.18802321, -0.18488845, 0.0826562 ,

-0.23322225], dtype=float32)

Related videos on Youtube

Tor

Updated on July 09, 2022Comments

-

Tor almost 2 years

I'd like to reset (randomize) the weights of all layers in my Keras (deep learning) model. The reason is that I want to be able to train the model several times with different data splits without having to do the (slow) model recompilation every time.

Inspired by this discussion, I'm trying the following code:

# Reset weights for layer in KModel.layers: if hasattr(layer,'init'): input_dim = layer.input_shape[1] new_weights = layer.init((input_dim, layer.output_dim),name='{}_W'.format(layer.name)) layer.trainable_weights[0].set_value(new_weights.get_value())However, it only partly works.

Partly, becuase I've inspected some layer.get_weights() values, and they seem to change. But when I restart the training, the cost values are much lower than the initial cost values on the first run. It's almost like I've succeeded resetting some of the weights, but not all of them.

-

Tor about 7 yearsI ended up doing something similar. Saving to disk and loading takes a lot of time, so I just keep the weights in a variable: weights = model.get_weights() I get the initial weights like this before running the first training. Then, before each subsequentt training, I reload the initial weights and run jkleint's shuffle method, as mentioned in the link that I posted. Seems to work smoothly..

-

BallpointBen about 6 yearsFor the full code snippet of @Tor's suggestion:

weights = model.get_weights(),model.compile(args),model.fit(args),model.set_weights(weights) -

bendl over 5 yearsNot quite as portable but works well for tensorflow backend!

-

guillefix over 5 yearsNice and simple solution!

-

Bersan over 5 years

Bersan over 5 yearsCannot evaluate tensor using `eval()`: No default session is registered. -

SuperNES over 5 yearsThis is the best approach in my view.

-

Xiaohong Deng about 5 yearsIs it outdated? Now

Xiaohong Deng about 5 yearsIs it outdated? Nowkernel_initializerhas no attributerun. In my casekernel_initializeris a VarianceScaling object -

Andrew almost 5 yearsBased on this, I've started making a lambda function when I initialize my model. I build the model, then do something like

weights = model.get_weights();reset_model = lambda model: model.set_weights(weights), that way I can just callreset_model(model)later. -

tkchris almost 5 years@XiaohongDeng try

kernel.initializer.run(session=session)instead. I had the same problem -

Bersan over 4 years

Bersan over 4 yearsAttributeError: module 'tensorflow_core.keras.backend' has no attribute 'get_session'using tensorflow.keras -

Mendi Barel over 4 yearsYes, it won't work in tf2, look here toward the end for tf2: github.com/keras-team/keras/issues/341

-

isobretatel over 3 yearsRuntimeError: You must compile your model before training/testing. Use

model.compile(optimizer, loss). -

Agustin Barrachina over 2 yearsThis has an obvious issue, all models will have the same starting weights. What we want (I think) is weights to be randomly initialized again.

Agustin Barrachina over 2 yearsThis has an obvious issue, all models will have the same starting weights. What we want (I think) is weights to be randomly initialized again.