SciPy LeastSq Goodness of Fit Estimator

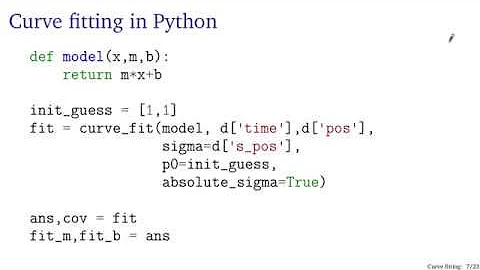

If you call leastsq like this:

import scipy.optimize

p,cov,infodict,mesg,ier = optimize.leastsq(

residuals,a_guess,args=(x,y),full_output=True)

where

def residuals(a,x,y):

return y-f(x,a)

then, using the definition of R^2 given here,

ss_err=(infodict['fvec']**2).sum()

ss_tot=((y-y.mean())**2).sum()

rsquared=1-(ss_err/ss_tot)

What is infodict['fvec'] you ask? It's the array of residuals:

In [48]: optimize.leastsq?

...

infodict -- a dictionary of optional outputs with the keys:

'fvec' : the function evaluated at the output

For example:

import scipy.optimize as optimize

import numpy as np

import collections

import matplotlib.pyplot as plt

x = np.array([821,576,473,377,326])

y = np.array([255,235,208,166,157])

def sigmoid(p,x):

x0,y0,c,k=p

y = c / (1 + np.exp(-k*(x-x0))) + y0

return y

def residuals(p,x,y):

return y - sigmoid(p,x)

Param=collections.namedtuple('Param','x0 y0 c k')

p_guess=Param(x0=600,y0=200,c=100,k=0.01)

p,cov,infodict,mesg,ier = optimize.leastsq(

residuals,p_guess,args=(x,y),full_output=True)

p=Param(*p)

xp = np.linspace(100, 1600, 1500)

print('''\

x0 = {p.x0}

y0 = {p.y0}

c = {p.c}

k = {p.k}

'''.format(p=p))

pxp=sigmoid(p,xp)

# You could compute the residuals this way:

resid=residuals(p,x,y)

print(resid)

# [ 0.76205302 -2.010142 2.60265297 -3.02849144 1.6739274 ]

# But you don't have to compute `resid` -- `infodict['fvec']` already

# contains the info.

print(infodict['fvec'])

# [ 0.76205302 -2.010142 2.60265297 -3.02849144 1.6739274 ]

ss_err=(infodict['fvec']**2).sum()

ss_tot=((y-y.mean())**2).sum()

rsquared=1-(ss_err/ss_tot)

print(rsquared)

# 0.996768131959

plt.plot(x, y, '.', xp, pxp, '-')

plt.xlim(100,1000)

plt.ylim(130,270)

plt.xlabel('x')

plt.ylabel('y',rotation='horizontal')

plt.grid(True)

plt.show()

Related videos on Youtube

Richard

Scientist. Developer. Tinkerer. I develop high-performance algorithms for investigating big data problems, especially those involving graphs. My background is in computational science, physics, and ecology.

Updated on June 14, 2022Comments

-

Richard almost 2 years

Richard almost 2 yearsI have a data surface that I'm fitting using SciPy's

leastsqfunction.I would like to have some estimate of the quality of the fit after

leastsqreturns. I'd expected that this would be included as a return from the function, but, if so, it doesn't seem to be clearly documented.Is there such a return or, barring that, some function I can pass my data and the returned parameter values and fit function to that will give me an estimate of fit quality (R^2 or some such)?

Thanks!

-

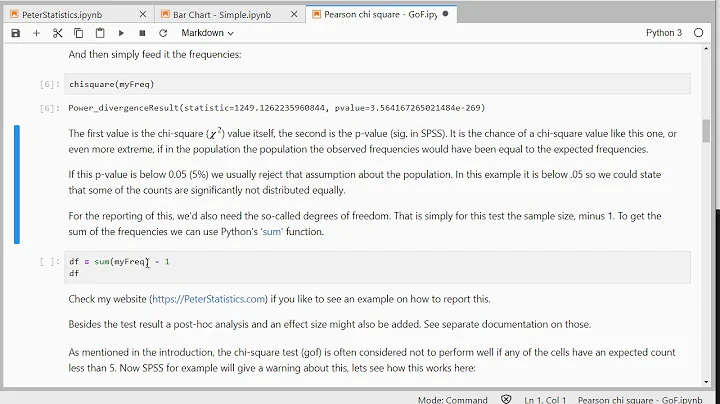

Admin over 12 yearsIs the above definition of rsquared correct? For each bin, the value of residual^2 should be divided to variance^2 and then at the end, sum up all the results.

Admin over 12 yearsIs the above definition of rsquared correct? For each bin, the value of residual^2 should be divided to variance^2 and then at the end, sum up all the results. -

astromax almost 11 years@ user1016260 There aren't any errors in the above example, but yes, it should be taken into account in that way. My question is about doing this for different models with different numbers of parameters. Basically with this R^2 method there is no way that the number of parameters are being taken into account. With something like a reduced chi-square, it does. Wouldn't that be best to use for models? This may not be appropriate to discuss here.

-

Irene over 9 yearsI would like to know the criteria to choose those parameters and not others. Because I tried this example with different values for the parameters, and the function is always converging and producing the same Rsquared. But if I use too big values for x0/y0, then there is no convergence. My point is, why we need to specify those initial params? How to choose them properly? Do they have to fall in the represented space or "near" the curve? Thanks

-

unutbu over 9 years@iamgin:

leastsquses the Levenberg–Marquardt algorithm. Per the link, "In cases with only one minimum, an uninformed standard guess ... will work fine; in cases with multiple minima, the algorithm converges only if the initial guess is already somewhat close to the final solution." -

Irene over 9 yearsThat is a very fine and revealing answer applicable to other algorithms, thank you very much. But one more thing, what are your "c" and "k", the two parameters needed to define a sigmoid curve? Slope and intercept maybe?

-

unutbu over 9 years@iamgin: The sigmoid function used above has the form

y = c / (1 + np.exp(-k*(x-x0))) + y0. Whenxis huge theexp(...)is essentially zero soytends towardc + y0. Whenxis huge and negative,exp(...)is huge,c / (huge)tends to 0, soytends towardy0. Soy0is the lower limit -- in the picture around 140, andc + y0is the upper limit -- around 250. Socis the span or difference 250 - 140 ~ 110. -

FaCoffee almost 8 yearsWhere is the p-value supposed to be? E.g., how do we justify the value of R squared obtained as such?

FaCoffee almost 8 yearsWhere is the p-value supposed to be? E.g., how do we justify the value of R squared obtained as such? -

unutbu almost 8 years@CF84: The standard p-value formula only applies to linear ordinary least squares. "Caution must be exercised [in the nonlinear case] since the validity of the approximation will depend on the nonlinearity of the model, the variance and distribution of the errors, and the data itself" (ODRpack95 User Guide PDF, Section 4B).

-

unutbu almost 8 years@CF84: (cont'd) In general I think it is better to apply the bootstrap method. This will give you a confidence interval for the parameters. To translate that into a p-value is a math problem whose solution depends on the above-mentioned cautionary factors.