Set max file limit on a running process

Solution 1

As documented here, the prlimit command, introduced with util-linux 2.21 allows you to read and change the limits of running processes.

This is a followup to the writable /proc/<pid>/limits, which was not integrated in mainline kernel. This solution should work.

If you don't have prlimit(1) yet, you can find the code to a minimalistic version in the prlimit(2) manpage.

Solution 2

On newer kernels (2.6.32+) on CentOS/RHEL you can change this at runtime with /proc/<pid>/limits:

cd /proc/7671/

[root@host 7671]# cat limits | grep nice

Max nice priority 0 0

[root@host 7671]# echo -n "Max nice priority=5:6" > limits

[root@host 7671]# cat limits | grep nice

Max nice priority 5 6

Solution 3

On newer version of util-linux-ng you can use prlimit command, for more infomation read this link https://superuser.com/questions/404239/setting-ulimit-on-a-running-process

Solution 4

You can try ulimit man ulimit with the -n option however the mag page does not most OS's do not allow this to be set.

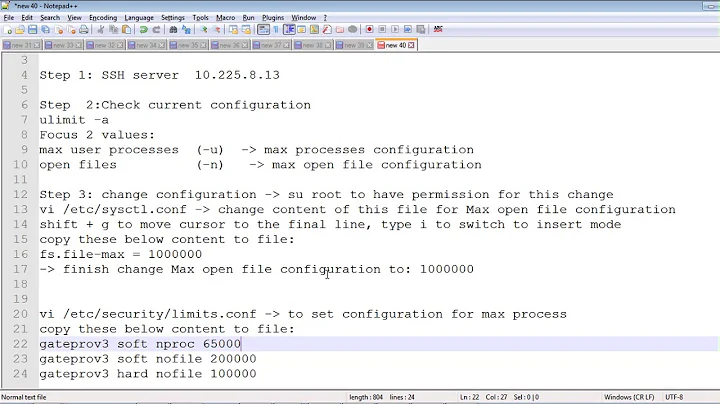

You can set a system wide file descriptions limit using sysctl -w fs.file-max=N and make the changes persist post boot up in /etc/sysctl.conf

However I would also suggest looking at the process to see if it really needs to have so many files open at a given time, and if you can in fact close some files down and be more efficient in the process.

Related videos on Youtube

kāgii

President of touchlab, Android-focused consulting company. Run Droidcon NYC and the big meetup.

Updated on September 17, 2022Comments

-

kāgii over 1 year

I have a long running process that is eventually going to hit the max open file limit. I know how to change that after it fails, but is there a way to change that for the running process, from the command line?

-

kāgii over 13 yearsThe open file thing is a bug, but I haven't been able to figure it out. While I'd love to do so, production periodically crashes mid-day.

-

clee almost 12 yearsThat rules! Had no idea you could write to the limits file.

-

Poma almost 12 yearsdoesn't work for me on ubuntu 12.04

-

Totor about 11 yearsThis does not work on my 3.2 kernel. I guess your distribution has a specific unofficial patch for this because I see no trace of this patch in the kernel's fs/proc/base.c.

-

Sig-IO over 8 yearsCould be... I know it's present in RHEL / CentOS kernels. It doesn't seem to be present in Debian kernels.

-

try-catch-finally almost 7 years

ulimitdoes not apply settings to running processes. -

Totor over 6 years+1 for the minimalistic version in the manpage !

-

John about 5 yearsWarning: "memcached" will segfault if you change "nolimit" on it's process after it encountered a limit. Not always a good idea to use the writeable limits

-

Hontvári Levente about 4 yearsOpenJDK 11 java is also segfaulted (but I only tried once).

-

Mikko Rantalainen over 2 yearsKernel doesn't provide interface for a process to be informed about changed limits. Some programs query limits during the process startup and may crash or misbehave if the limits are changed during the runtime. Increasing the limits at runtime should be okay for all correctly written programs, though.

-

Mikko Rantalainen over 2 yearsModern Linux kernel provides

prlimit()for setting the limits at runtime. Writing to/proc/<pid>/limitswas a suggested patch that never got traction in official kernel.