Simplest way to shrink transaction log files on a mirrored production database

Solution 1

Do a transaction log backup, with whatever method you feel most comfortable with.

This will cause the transaction logs that have already been committed to the database to be deleted from disk. Ideally, you should actually create a database maintenance task to do this for you on a recurring basis for precisely this reason - to eliminate the old transaction logs so you don't fill up your disk.

Per the other bit of your question... no, not really. Yes, they perform that function, but not only that function.

Databases are not backed up (or written to) in the traditional fashion that other files are, because the database file itself is constantly in use and constantly changing. So a single "point in time" back up would either require taking the database offline to "freeze it" in a consistent state or result in different parts of the backup containing different data than was there when the backup started.

What transaction logs are is records of every "transaction" the database performed. Rather than writing to the database file every time a record is changed, updated, added, removed, etc., those actions are written to a separate file, a transaction log, and then committed into the database file when the SQL server determines it's safe to do so without bringing any activity to a halt. So transaction logs are, in effect, where changes to the database go before they actually become changes to the database [file].

So, if you need to get back to a given database state, or point in time, the transaction logs are "replayed." Essentially, not copying the file data, but going to the most recent point-in-time state found for the database and then doing all the same things that got the database to the specified [later] state. But, it's important to note that at any given time, your transaction logs will contain transactions that will not have been committed to the database yet. So they're more than just the ability to perform a point-in-time restore. They contain [some] changes that are being made, or will shortly be made to the database.

This is why you're forced to do a backup before purging the transaction logs - once that backup's done, the system has a point-in-time copy of the database to reference for any future restores, and is able to determine which transactions have been committed to the database, and which have not. And with that information, the system knows which obsolete transaction logs to delete for you and which not to.

This can, however, take some time, depending on the size of your transaction logs. If you've never done one before, brace yourself it'll take a while.

Solution 2

This is an older question, but there are some things that were not explained well and this will hopefully shed some light. The how to shrink was answered, mostly, but this will explain the "why" part of what you are actually doing.

Backups, specifically log backups, do several things. The data is written to disk as a backup set to be used or discarded later as needed. Each subsequent backup is added to that set, in a Fully logged DB. Truncating the log or starting a new backup set breaks the chain and inhibits, or in many cases, negates your ability to restore to a point in time before the chain was broken.

The data in the log is not actually deleted, nor is the log file shrunk during a backup. The active VLFs are marked inactive, except for those that cannot be fully committed - they remain active. Inactive VLFs are able to be rewritten, making your log circular, in effect like a snake eating its own tail. A checkpoint is issued, as part of the backup, which tells the DB to start writing to the beginning of the log, once the current VLF fills up. If you perform a shrink at this point, you will gain back all the space at the end of the log, up to the point of the active VLF.

The reason a second backup seems to "do the trick" is because the active VLF typically fills up during this time and the log is being written from the beginning. When you take the second backup, the active VLF is written to disk as part of the backup set (or not), and the VLF is marked inactive. Since this was the tail end of the log due to the previous shrink, performing a shrink now frees up all the space to the beginning of the log, up to the currently active VLF.

All of this assumes a couple of things, 1.) you don't have massive VLFs that take hours or days to fill up and 2.) your database is fairly inactive and there aren't a bunch of transactions being written to the log. If either of these conditions are an issue for you, shrinking your log will also be an issue.

All of this is true for both unmirrored and mirrored databases. The difference is that you only need to perform the maintenance on the primary in a mirrored scenario, assuming your mirror is built identically.

Solution 3

The mirroring feature uses the log to keep track of things until it knows that the other server has those changes. So, no, the ldf is not just for point-in-time recovery. (It's also important for some replication schemes, but you aren't doing that.) Even TRUNCATE_ONLY will not throw away logged changes for stuff that SQL might need. The classic example is some large or long-running transaction. If you are halfway through an hour-long transaction and a DBA runs TRUNCATE_ONLY, your stuff won't be purged from the LDF. The LDF may continue to grow or experience other problems. If the DBA kills your connection, waits for the rollback to finish and then runs TRUNCATE_ONLY, then the log should free up.

Have you tried using:

select log_reuse_wait_desc from sys.databases where name = 'mydb'

to see why the log is so large? Microsoft documents that system table here.

You can also run :

dbcc opentran()

This is kind of old-school, but it should show you any long-running transactions in that database. Microsoft documents that command here.

I would do is make sure that there is a log backup happening on a schedule.

I would make sure that I give the TRUNCATE_ONLY command a little time to work, sometimes it takes a while for SQL to start writting to a VLF towards the front of the LDF file. If the last VLF in the LDF file is the one that is being written to, then SQL can't shorten the file. Failing that, I'd do a full BACKUP DATABASE (with COPY_ONLY, or wait until the regular backup happens, if that isn't too far off in the future). Sometimes that seems to kick start things, but it might just be that a backup distracts me while waiting for the current VLF to move to the front of the LDF file. After the current VLF moves towards the front of the LDF file, you should be able to use dbcc shrinkfile() and get the expected result. I'd recommend trying to remove small chunks first, rather than trying to do it all in one shot.

Also, you want to avoid doing this on a regular basis, as getting into a repetitive shrink-autogrow-shrink-autogrow cycle can be a performance killer. (It can lead to fragmented files and the actual growth process can take a surprising amount of time, during which no transaction would be able to commit. Grow your files large enough so that they don't autogrow. Autogrow should be a failsafe thing and not relied on.

Related videos on Youtube

AJAY

Updated on September 18, 2022Comments

-

AJAY almost 2 years

AJAY almost 2 yearsI have to remove one element from array in document 'dsrNote'

which has "_id" : ObjectId("58a5594bb77d2f1dd49e2986")

{ "_id": ObjectId("58a558efb77d2f1dd49e2983"), "userId": ObjectId("586356b199248d1517a6758b"), "dsrNote": [ { "activity": "ajay kumar dogra", "_id": ObjectId("58a5594bb77d2f1dd49e2986") }, { "activity": "ajay kumar", "_id": ObjectId("58a55969b77d2f1dd49e2987") } ], }In above document i need to remove first object from dsrNote array

i have tried this, but it is not working

collectionName.update({ "userId": "586356b199248d1517a6758b" }, { $pull: { "dsrNote": { "_id": "58a5594bb77d2f1dd49e2986" } } }).exec(function (err, data) {});-

Israel Zinc over 7 yearsWhat's the error message?

-

RaR over 7 years

{ $pull: { "dsrNote": { "_id": 58 a5594bb77d2f1dd49e2986 } } }-> why_idvalue is not enclosed by double quotes? Is that a typo? -

AJAY over 7 yearsDouble quotes is just formatted issue while posting , no error coming

AJAY over 7 yearsDouble quotes is just formatted issue while posting , no error coming -

AJAY over 7 years@israel.zinc no error coming

AJAY over 7 years@israel.zinc no error coming

-

-

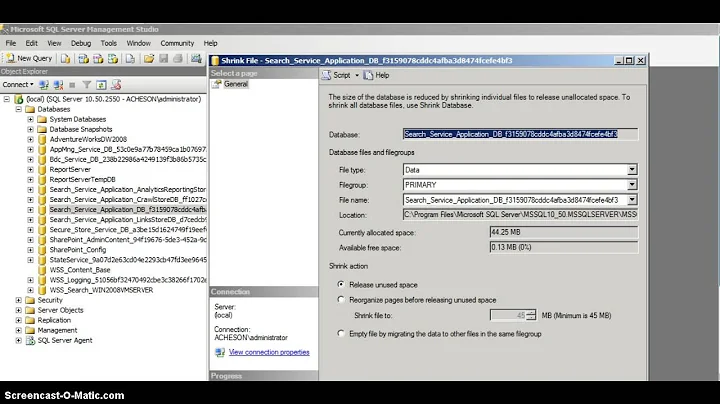

MGOwen almost 12 years@HopelessNOOb Thanks, this answer explains a lot; but backing up the log didn't actually free up any space. I even did Shrink afterwards (just right click Shrink, Files, then Log) and it still did nothing, though it said 99% of the log was now free to be removed. Any idea what I'm doing wrong?

-

MGOwen almost 12 years@Chris McKeown: Thanks. If I've just done a full database backup, is there any real advantage to keeping that log data? It's no use at all, right?

-

Chris McKeown almost 12 years@MGOwen You mean keeping the log data from before you performed a full backup? You could use that (in conjunction with a previous full backup) to restore to a point in time in the past. If you want to continue to be able to restore to a point in time, you need to keep backing up the transaction log which will also keep the log file size down. If you're not bothered about restoring to a point in time, switch the recovery mode to Simple and just do regular full and diff backups.

-

MGOwen almost 12 years@Chris McKeown: I think I might prefer Simple, but I tried changing to simple, and it said I can't do that if I'm mirroring.

-

adaptr almost 12 yearsThe Simple recovery model tells SQL Server to truncate the log after every checkpoint, and not just after a manual log backup. This means that the log is no longer guaranteed to contain all the data the mirror needs to stay in sync, so you can't use Simple.

-

MGOwen almost 12 years@adaptr Ah, so simple is irrelevant to my question, as I am mirroring. Back to my original question, then: How do I flush my backed up log (when mirroring)?

-

MGOwen almost 12 yearsOK, after doing 2 backups through SSMS (not TSQL) of just the log, creating a totally new backup set, the Shrink-Files-Log dialog in SSMS finally actually worked, freeing some disk space. Not sure why 2 backups where needed, or why TSQL didn't work, and there certainly was no difference in the reported "space available to be reclaimed" in the shrink dialog, but problem solved for now. Thanks all.