Slow disk I/O in KVM with LVM and md raid5

Solution 1

Not sure that my note covers all the problem but with this storage configuration you could not get proper alignment.

Lets see,

- You can align partition boundaries according to RAID stripe size, it is OK.

You can set file-system optimization parameters accordingly, it is also OK.

But in order to align LVM properly you need the RAID stripes to fit LVM extent.

LVM extent size is always power of 2. Thus your RAID stripe size needs to be power of 2. In order to get it you need a number of disks in RAID5 to be equal to 2^N+1 = 3, 5, 9 ...

With 4 disks in RAID5 it is impossible.

Since software RAID5 has no protected write-back cache It may significantly vulnerable with "partial stripe write penalty".

May be you also have another causes restricting write performance but the first I would have done - migration to RAID10. With RAID10 on all 6 disks you are able to get read performance and guest storage capacity comparable to your initial setup ... and no headache with alignment ;).

Solution 2

I've done extensive testing on KVM and caching performance (you can read here, here and here) and many of the recommendation that you find on the Internet is obsolete of plain wrong. But let's proceed one step at a time...

- RAID5 needs a much smaller chunk size (in the order of 32-64K) than yours (512K, as seen from pastebin) and a BBU (for writeback caching) to be viable for anything different that sequential read/write patterns.

- Avoid RAID5 for any workloads that need IOPS and/or issues a significant portion of random writes. This is EXACTLY what a guest OS need (IOPS and random write speed), so the choice of RAID5 (without and hardware BBU for enabling array-wide writeback caching) is the wrong one. Use RAID10 instead.

- Absolutely enable cache=writeback for your guests. Cache=none is slightly better only on sequential reads/writes or absolutely irregular and cache-unfriendly patterns.

- Use virtio drivers inside your guests each time it is possible.

Related videos on Youtube

FrontSlash

Updated on September 18, 2022Comments

-

FrontSlash over 1 year

I have been battling with a kvm setup under debian for two weeks now, specifically with guest I/O disk performance.

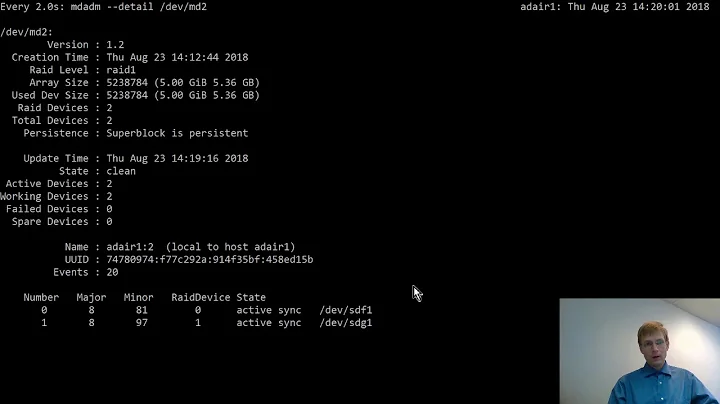

The system:

-Supermicro 1018d-73mtf (X10SL7-F motherboard) -16GB ECC/UB -Intel Xeon E3-1240v3 -6xWD Red 750GB 6Gb/sOn this i am running a Debian Wheezy on two of the disks, the other four disks are setup with md for raid5 with LVM on top for guest storage. Performance directly on the raid5 (measured by creating a LV and mounting it and running bonnie++ and dd tests) is fine, giving me ~220/170MB/s read/write, but on guests i get decent reads and 40-50MB/s writes, tested on both Windows (Server 2012) and Linux (Debian). I have read up on aligning disks and partitions and recreated the raid and lvm setup by the book, but havnt recieved any performance boosts.

When measuring loads with atop during writes directly from the host i can see that the disks and lvm are getting high loads, but measuring while a guest is writing shows the disks at ~20-30% and lvm getting "red" (100%).

The normal tweaks of KVM/host system have been done, setting scheduler to deadline, setting stripe caches for the raid, cache=none on the guests, reflashing the SAS controller card to IT-mode (LSI 2308) and i am out of ideas, here is a pastebin with relevant information about the setup in the hopes of someone noticing something i've done wrong http://pastebin.com/vuykxeVg.

If you need anything else il paste it.

Edits:

This is basicly how the drives, md and lvm is set up, with some changes because i am running 3 disks + spare. http://dennisfleurbaaij.blogspot.se/2013/01/setting-up-linux-mdadm-raid-array-with.html

Screenshots of atop during host and guest writetests (bonnie++)

-

Admin over 10 yearsCan you show me the output of cat /sys/block/sda/queue/scheduler?

Admin over 10 yearsCan you show me the output of cat /sys/block/sda/queue/scheduler? -

Admin over 10 yearssda? Sure but its not used for the guests: noop deadline [cfq] For the actual raid-disks its: noop [deadline] cfq

Admin over 10 yearssda? Sure but its not used for the guests: noop deadline [cfq] For the actual raid-disks its: noop [deadline] cfq -

Admin over 10 yearsI meant you should replace sda with your internal disk inside the guest.

Admin over 10 yearsI meant you should replace sda with your internal disk inside the guest. -

Admin over 10 yearsOn the linux guest i have been running noop, just did another bonnie++ test and got slightly better performance than i did previously on the linux vm, from 50 to 65MB/s seq writes, without changing anything

Admin over 10 yearsOn the linux guest i have been running noop, just did another bonnie++ test and got slightly better performance than i did previously on the linux vm, from 50 to 65MB/s seq writes, without changing anything -

Admin over 10 yearsWhat is RAID(5?!) doing inside VM o_O? (Following snapshot link for Guest)

Admin over 10 yearsWhat is RAID(5?!) doing inside VM o_O? (Following snapshot link for Guest) -

Admin over 10 yearsThe atop reading was done on the host while writes were being done within the guest, otherwise i wouldnt be able to spot performance problems on the raid or the lvm in this case (which might be a falsepositive)

Admin over 10 yearsThe atop reading was done on the host while writes were being done within the guest, otherwise i wouldnt be able to spot performance problems on the raid or the lvm in this case (which might be a falsepositive) -

Admin over 10 yearsPlease provide bonnie+ command line parameters

Admin over 10 yearsPlease provide bonnie+ command line parameters -

Admin over 10 yearsIs lxc an option for you (instead of using KVM)?

Admin over 10 yearsIs lxc an option for you (instead of using KVM)? -

Admin over 10 years@Veniamin, bonnie++ -u root -d /tmp

Admin over 10 years@Veniamin, bonnie++ -u root -d /tmp -

Admin over 10 years@Nils, no, the main point of this server is to have Windows 2012 running on it, the linux guest was just to test if windows was the culprit

Admin over 10 years@Nils, no, the main point of this server is to have Windows 2012 running on it, the linux guest was just to test if windows was the culprit -

Admin over 10 yearsYou can see that writes are still misaligned for Guest case: notable read activity on sd[cde] and KiB/w far from ideal 512K. What I can not understand at all - KiB/w for LVM/MD devices equals to 100K. Even if a subsystem is not aware of a stripe size then 128K or some other 2^N would be expectable.

Admin over 10 yearsYou can see that writes are still misaligned for Guest case: notable read activity on sd[cde] and KiB/w far from ideal 512K. What I can not understand at all - KiB/w for LVM/MD devices equals to 100K. Even if a subsystem is not aware of a stripe size then 128K or some other 2^N would be expectable. -

Admin about 9 yearsThe first thing i see wrong with your setup is the stripe (chunk) size. As far as my experience goes, you won't get much out of a stripe this large. For normal use I'd try with way smaller (8k-16k?), to see what it does.

Admin about 9 yearsThe first thing i see wrong with your setup is the stripe (chunk) size. As far as my experience goes, you won't get much out of a stripe this large. For normal use I'd try with way smaller (8k-16k?), to see what it does.

-

-

FrontSlash over 10 yearsMind you that only 3 of the disks are active in the raid5, the fourth is spare, as seen in the mdstat output

-

FrontSlash over 10 yearsUsing latest virtio, cache settings have all been tried and cache=none gave the best performance

-

Veniamin over 10 yearsOk, did you perform partition aligning and FS optimization inside VM?

-

FrontSlash over 10 yearsYep, see the pastebin it has parted info about the windows part

-

David Corsalini over 10 yearsThat's because people use KVM on distributions that don't do proper testing and integration, like Debian.

-

Veniamin over 10 yearsWindows is not aware that your stripe is 1MB, nor is virtio driver. I would try RAID10 at least to finaly commit the issue with partial stripe write.

-

FrontSlash over 10 yearsMy stripe isnt 1MB, i've done the same tests with a raid1 mounted in windows and debian with no improvement, might that you missed that i have tested this with both linux and windows, linux with properly aligned sectors (mkfs.ext4 –m 0 -b 4096 -E stripe-width=256,stride=128) Stripe-width based on the fact that there are two data disks and stride being 128 since stripe-size/block-size.

-

Veniamin over 10 yearsstripe-width=256=4k*256=1M - exactly. If you want to enjoy with RAID5 - be sure to increase "stripe_cache_size" parameter of mdraid, default value of 265K does not work at all with your jumbo stripes. Finished.

-

Nils over 10 years@FrontSlash So the "do not use caching" is propably your best path here - if you do not want to abandon KVM and/or Debian.

-

Nils over 10 years@dyasny are there other distributions that do proper testing and integration?

-

FrontSlash over 10 years@Nils, your link to "do not use caching" is broken, was it anything other than setting cache=none for the guest?

-

David Corsalini over 10 years@Nils of course. KVM and QEMU and developed on Fedora and get heavy QA on RHEL, everybody else simply ports them over, sometimes quite poorly

-

Nils over 10 years@FrontSlash The link is working perfectly - even on a different browser and android. The core of "no use caching" was to disable caching on the KVM server and enable it within the VM.

-

FrontSlash over 10 yearsthe stripe_cache_size has been tested with several values, none giving much results, set at 16384 for now