Software raid mdadm not adding spare

I'm unclear on whether you actually replaced the failed drive(s)? Because your symptoms would make sense to me if you'd re-added the faulty drive, in which case there's a good chance the drive has locked up. If you did re-add the faulty drive, are there subsequent errors in /var/log/messages or dmesg?

(Incidentally, I'd strongly recommend against ever re-addeding a faulty drive to a RAID array. If the fault corrupted data on the platter you may find that when you add it back to the array, the resync leaves the corrupted file on the disc, and next time you read the files, it'll be a crapshoot as to whether you get good or bad data, depending on which disk responds first; I have seen this happen in the wild.)

Related videos on Youtube

Comments

-

Tauren almost 2 years

I just discovered the same problem on two brand new and identical servers installed only about 9 months ago. I was unable to write to the disk on both of them, because the system had marked it read-only. Logs indicated there was some sort of disk error on both.

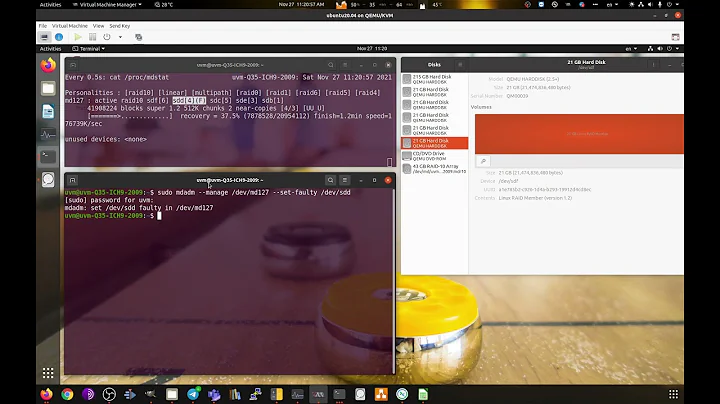

Note that I'm running KVM with several guests on each of these servers. The guests were all running fine, but the problem was in the KVM host. This probably doesn't matter, but maybe it pertains. Both systems have only two drives with software raid1 and LVM on top. Each KVM guest has its own LVM partition as well.

Both systems were showing a degraded RAID1 array when looking at

/proc/mdstat.So I rebooted one of the systems, and it told me I needed to manually run

fsck. So I did so. It appeared to fix the problems and a reboot brought the system back up normally. The same process worked on the 2nd server as well.Next I ran

mdadm --manage /dev/md0 --add /dev/sdb1to add the failed drive back into the array. This worked fine on both servers. For the next hour or so, looking at/proc/mdstatshowed progress being made on the drives syncing. After about an hour, one system finished, and/proc/mdstatshowed everything working nicely with[UU].However, on the other system, after about 1.5 hours, the system load skyrocketed and nothing was responsive. A few minutes later, everything came back. But looking at

/proc/mdstatnow shows the following:root@bond:/etc# cat /proc/mdstat Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid1 sda1[2] sdb1[1] 293033536 blocks [2/1] [_U] unused devices: <none>As you can see, it appears to be no longer syncing. The percentage completed, time remaining, etc. is no longer show. However, running

mdadm --detail /dev/md0shows this:root@bond:/etc# mdadm --detail /dev/md0 /dev/md0: Version : 00.90 Creation Time : Mon Nov 30 20:04:44 2009 Raid Level : raid1 Array Size : 293033536 (279.46 GiB 300.07 GB) Used Dev Size : 293033536 (279.46 GiB 300.07 GB) Raid Devices : 2 Total Devices : 2 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Fri Sep 10 23:38:33 2010 State : clean, degraded Active Devices : 1 Working Devices : 2 Failed Devices : 0 Spare Devices : 1 UUID : 4fb7b768:16c7d5b3:2e7b5ffd:55e4b71d Events : 0.5104310 Number Major Minor RaidDevice State 2 8 1 0 spare rebuilding /dev/sda1 1 8 17 1 active sync /dev/sdb1The bottom line seems to indicate that the spare is rebuilding. Why is it a spare? The system is reporting both devices as clean. It has stayed like this for hours. The drives are small and fast 300GB 10K RPM VelociRaptors, so I would think it would have sync'ed by now. Attempting to re-add says the device is busy:

root@bond:/etc# mdadm /dev/md0 --re-add /dev/sda mdadm: Cannot open /dev/sda: Device or resource busyRunning dmesg on the "good" server shows this at the end:

[ 4084.439822] md: md0: recovery done. [ 4084.487756] RAID1 conf printout: [ 4084.487759] --- wd:2 rd:2 [ 4084.487763] disk 0, wo:0, o:1, dev:sda1 [ 4084.487765] disk 1, wo:0, o:1, dev:sdb1On the "bad" server, those last 4 lines are repeated hundreds of times. On the "good" server, they only show once.

Are the drives still syncing? Will this "rebuild" finish? Do I just need to be more patient? If not, what should I do now?

UPDATE:

I just rebooted, and the drive started syncing all over again. After almost 2 hours, the same thing happened as described above (still get a [_U]). However, I was able to see the dmesg logs before the RAID1 conf printout chunks consumed it all:

[ 6348.303685] sd 1:0:0:0: [sdb] Unhandled sense code [ 6348.303688] sd 1:0:0:0: [sdb] Result: hostbyte=DID_OK driverbyte=DRIVER_SENSE [ 6348.303692] sd 1:0:0:0: [sdb] Sense Key : Medium Error [current] [descriptor] [ 6348.303697] Descriptor sense data with sense descriptors (in hex): [ 6348.303699] 72 03 11 04 00 00 00 0c 00 0a 80 00 00 00 00 00 [ 6348.303707] 22 ee a4 c7 [ 6348.303711] sd 1:0:0:0: [sdb] Add. Sense: Unrecovered read error - auto reallocate failed [ 6348.303716] end_request: I/O error, dev sdb, sector 586065095 [ 6348.303753] ata2: EH complete [ 6348.303776] raid1: sdb: unrecoverable I/O read error for block 586065024 [ 6348.305625] md: md0: recovery done.So maybe the question I should be asking is "How do I run fsck on a spare disk in a raid set?"

-

Rodger almost 14 yearsTo reply to your update: that's a fatal hardware failure on sdb. Pull it and replace it. fsck won't do anything for it.

-

Tauren almost 14 yearsthanks, will do. How can you tell this is a fatal error? I plan to run the WD diags on it to confirm this once I can schedule some downtime.

-

Rodger almost 14 years"raid1: sdb: unrecoverable I/O read error for block 586065024" - It couldn't correct the problem by remapping to a different block, so you've lost a block on the disk. This would tend to indicate the disk is on the way out. You could followup with smartmon tools to see if the SMART data for the drive confirms that.

-

cco over 12 years-1 for giving bad advice on the raid-1. There is no need to try to save a failed HDD, and Raid1 is designed to survive down to 1 disk. Just get a new disk. Add it in to the Raid array. If you want to make sure you can boot from that disk then install grub on it as well. I would recomend that you install grub on both of the disks if they are in a raid1, that way you can easily recover if one drive dies and you have to power off to replace the disk.