MDADM raid "lost" after reboot

Solution 1

Well, it turns out that in a last hooray, I tried to re-run the "create" command I previously use to build the array in the first place and.....guess who got is data back!!

Let say I'm gonna backup all that good stuff and restart my array from scratch. Thanks everyone for the help!

Solution 2

Unfortunately your raid array is gone. I understand that you provided fdisk -l output after you tried another solution, that unfortunately wiped one of the HD. However also the other one is in a bad shape. After crating a RAID array, you should always generate mdadm.conf file, but this is not the crucial point here, since mdadm should be able to reconstruct a RAID array, starting from superblock, that are missing on two of the three HD. I am not quite sure what happened, but my suspect is that you just needed to reassemble it due to missing mdadm.conf, while you started to issue crazy commands, that unfortunately destroyed your array

Related videos on Youtube

Patrick Pruneau

Always in search of better knowledge and working method!

Updated on September 18, 2022Comments

-

Patrick Pruneau over 1 year

I am kind of scared right now, so I hope you can bring light on my problem!

A few weeks ago, I bought a new 2TB drive and decided to setup a software RAID 5 with MDADM on my HTPC (drive

sdb,sdcandsde). So I've quickly search on Google and found this tutorialI then proceed to follow the instruction, create a new array, watch

/proc/mdstatfor the status, etc. and after a couple of hours my array was complete! Joy everywhere, everything was good, and my files were happily accessible.BUT!!

Yesterday, I had to shutdown my HTPC to change a fan. After reboot, oh my oh my, my RAID wasn't mounting. And since I'm quite a "noob" with mdadm, I'm totally lost.

When I'm doing an

fdisk -l, here is the result :xxxxx@HTPC:~$ sudo fdisk -l /dev/sdb /dev/sdc /dev/sde Disk /dev/sdb: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: FD6454FC-BD66-4AB5-8970-28CF6A25D840 Device Start End Sectors Size Type /dev/sdb1 2048 3907028991 3907026944 1.8T Linux RAID Disk /dev/sdc: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: F94D03A1-D7BE-416C-8336-75F1F47D2FD1 Device Start End Sectors Size Type /dev/sdc1 2048 3907029134 3907027087 1.8T Linux filesystem Disk /dev/sde: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytesI'm more than confuse! For some reason, not only 2 of the 3 drives have a partition, those partitions are the one that I deleted in the first place when I followed the tutorial. The reason that

/dev/sdb1shows as "Linux RAID" is after following another solution on superuser (New mdadm RAID vanish after reboot) without success.And here is the result after executing a

mdadm --assemble:xxxxx@HTPC:/etc/mdadm$ sudo mdadm --assemble --scan -v mdadm: looking for devices for /dev/md0 mdadm: No super block found on /dev/dm-1 (Expected magic a92b4efc, got 00000000) mdadm: no RAID superblock on /dev/dm-1 mdadm: No super block found on /dev/dm-0 (Expected magic a92b4efc, got 0000040e) mdadm: no RAID superblock on /dev/dm-0 mdadm: cannot open device /dev/sr0: No medium found mdadm: No super block found on /dev/sdd1 (Expected magic a92b4efc, got 00000401) mdadm: no RAID superblock on /dev/sdd1 mdadm: No super block found on /dev/sdd (Expected magic a92b4efc, got d07f4513) mdadm: no RAID superblock on /dev/sdd mdadm: No super block found on /dev/sdc1 (Expected magic a92b4efc, got 00000401) mdadm: no RAID superblock on /dev/sdc1 mdadm: No super block found on /dev/sdc (Expected magic a92b4efc, got 00000000) mdadm: no RAID superblock on /dev/sdc mdadm: No super block found on /dev/sdb1 (Expected magic a92b4efc, got 00000401) mdadm: no RAID superblock on /dev/sdb1 mdadm: No super block found on /dev/sdb (Expected magic a92b4efc, got 00000000) mdadm: no RAID superblock on /dev/sdb mdadm: No super block found on /dev/sda1 (Expected magic a92b4efc, got f18558c3) mdadm: no RAID superblock on /dev/sda1 mdadm: No super block found on /dev/sda (Expected magic a92b4efc, got 70ce7eb3) mdadm: no RAID superblock on /dev/sda mdadm: /dev/sde is identified as a member of /dev/md0, slot 2. mdadm: no uptodate device for slot 0 of /dev/md0 mdadm: no uptodate device for slot 1 of /dev/md0 mdadm: added /dev/sde to /dev/md0 as 2 mdadm: /dev/md0 assembled from 1 drive - not enough to start the array.I already checked with smartmontools and all the drives are "healty". Is there anything that could be done to save my data? After some research, it seems that tutorial wasn't the best one but....hell, everything was working for a time.

UPDATE: By sheer luck, I found the exact command I used to create the array in my bash_history!

sudo mdadm --create --verbose /dev/md0 --level=5 --raid-devices=3 /dev/sdb /dev/sdc /dev/sdeMaybe, just maybe, should I run it again so that my RAID is bring back to life? My only concern is getting back "some" data on those drive. I'll make a blank sheet setup after that.

-

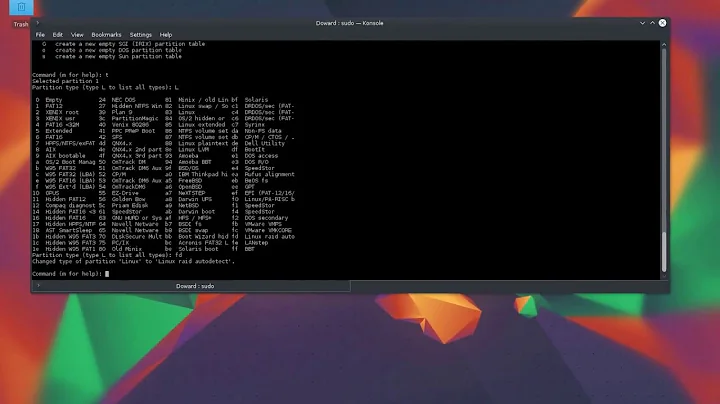

Patrick Pruneau over 6 yearsCrazy idea like this...."prehapse" I should drop the GPT table on one drive (ex.: /dev/sdb), so that it would be "the same" as /dev/sde? I don't remember if I set the drive alphabetically (damn!), but I made the same command as on the tutorial. So I had targeted /dev/sdb, and not /dev/sdb1 for sure.

-

Damon over 6 yearsI would say you should partition your drives identically at a space slightly smaller than the drive. Currently all three drives are different; even the two with the partitions do NOT match. I think this is part of the problem you encountered somehow (pure speculation). If partitions are used, they should ALL be Linux RAID partitions. You can do this without partitions from my understanding, I just personally would not do so.

-

Patrick Pruneau over 6 yearsWell, that's the weird part. I haven't used partition, and I'm (almost) sure I deleted the partiation that were on the drives prior creating the raid cluster. So I have not a single idea why they are still there.....

-

Damon over 6 yearsDid you officially "write" the partition tables to the disks? It is not automatic as you work through the commands. That is did you see the output of fdisk different after partitioning? I suspect the partition tables were not written.

-

Patrick Pruneau over 6 yearsDo you mean when I create the raid? If so, no since I didn't know! But if you talk albout the "old" partition, i used Gparted (I know, bad GUI, bad!) and apply the change.

-

-

Patrick Pruneau over 6 yearsMy mdadm.conf was "correctly" configured (as far as I know). I followed the instruction and added that line in the file : ARRAY /dev/md0 metadata=1.2 name=HTPCPAT:0 UUID=a31cc1b9:502dc85a:3c507697:d6859f80 I do believe that I forgot something somewhere (like completely cleaning my drive before creating the array), but I can't wrap my mind arround the fact that everything worked for a couple of weeks before the restart.

-

Menion over 6 yearsCan you remember what kind of commands you used trying to reassembe the array after you found it not mounted after the reboot? Another important information is that if it was the very first reboot after array creation

-

Patrick Pruneau over 6 yearsYes that was the first reboot. And If I remember correctely, the "first" command I issued was something like "sudo mdadm --assemble --scan -v".

-

Menion over 6 yearsOk Anyhow I am afraid that the arrays is lost behind any possibility of self recovery It is possible that some professional recovery service could retrieve some data, but usually it is crazy expensive for RAID arrays

-

Patrick Pruneau over 6 yearsI was kind of scare that would be the answer :( Any good recommendation on how to setup this correctly this time? From what I understand, the "best way" would be to create 3 equal size partition on each partition, and create the array on those partition. Is that right?

-

Menion over 6 yearsdigitalocean.com/community/tutorials/… Follow create RAID5 instruction, but first you must zeroize the superblock of the HD detected as RAID member Also make sure that everything works across reboot. And no, it is recommended to make RAID on physical device not a partition

-

Damon over 6 years@Menion Partitions that are a bit smaller than the full disk size help guarantee that a replacements disk will be of sufficient size. If a new disk is even 1 block smaller the disk would not be able to be added to the array. If you use a partition slightly smaller, you allow for such contingencies.