Creating a RAID1 partition with mdadm on Ubuntu

Solution 1

Finally some progress!

dmraid indeed was the culprit, as mdadm's Wikipedia entry suggested. I tried removing dmraid packages (and running update-initramfs though I'm not sure if that was relevant).

After that, and rebooting, the devices under /dev/mapper are gone (which is fine - I don't need to access the Windows NTFS partitions on Linux):

$ ls /dev/mapper/

control

And, most importantly, mdadm --create works!

$ sudo mdadm -Cv -l1 -n2 /dev/md0 /dev/sda4 /dev/sdb4

mdadm: size set to 241095104K

mdadm: array /dev/md0 started.

I checked /proc/mdstat and mdadm --detail /dev/md0 and both show that everything is fine with the newly created array.

$ cat /proc/mdstat

[..]

md0 : active raid1 sdb4[1] sda4[0]

241095104 blocks [2/2] [UU]

[==========>..........] resync = 53.1% (128205632/241095104)

finish=251.2min speed=7488K/sec

Then I created a filesystem on the new partition:

$ sudo mkfs.ext4 /dev/md0

And finally just mounted the thing under /opt (& updated /etc/fstab). (I could of course have used LVM here too, but frankly in this case I didn't see any point in that, and I've already wasted enough time trying to get this working...)

So now the RAID partition is ready to use, and I've got plenty of disk space. :-)

$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sdc5 70G 52G 15G 79% /

/dev/md0 227G 188M 215G 1% /opt

Update: there are still some issues with this RAID device of mine. Upon reboot, it fails to mount even though I have it in fstab, and sometimes (after reboot) it appears to be in an inactive state and cannot be mounted even manually. See the follow-up question I posted.

Solution 2

sudo mdadm -Cv /dev/md0 -l1 -n2 /dev/sd{a,b}4

mdadm: Cannot open /dev/sda4: Device or resource busy

mdadm: create aborted

This trick worked for me: Check /proc/mdstat: note the device name stated there eg. md_d0, then:

sudo mdadm --stop /dev/md_d0

Now the create command should work.

Solution 3

Maybe a stupid answer, but - are you sure sd{a,b}4 is not mounted anywhere by ubuntu?

And what motherboard you have as you say about hardware raid? It may be really some software raid that maybe disturbs here.

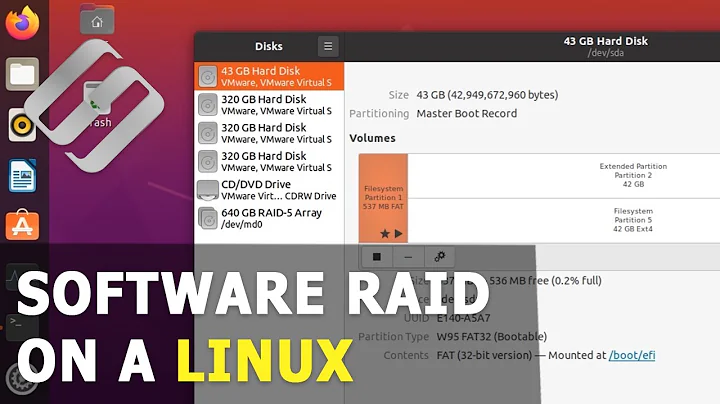

Related videos on Youtube

Assaf Levy

I'm a software developer by profession, and more active on Stack Overflow. I used to be more of a Linux geek, wasting a lot of free time tweaking my system, following Slashdot, etc. Fortunately I'm mostly past that. :) Nowadays I mainly use Mac at home, while still strongly preferring Linux (Ubuntu these days) for development at work and at work too.

Updated on September 17, 2022Comments

-

Assaf Levy over 1 year

I'm trying to set up a RAID1 partition on my Ubuntu 9.10 workstation.

On this dual-boot system, Ubuntu is running from a separate drive (

/dev/sdc- an SSD that is quite small, which is why I need more disk space). Besides that, there are two traditional 500 GB hard drives, which have Windows 7 installed (I want to keep the Windows installation intact), and about half of the space unallocated. This space is where I want to set up a single, large RAID1 partition for Linux.(This, to my understanding, would be software RAID, whereas the Windows partitions are on hardware RAID - I hope this isn't a problem... Edit: See Peter's comment. I guess this shouldn't be a problem since I see both drives separately on Linux.)

On both disks,

/dev/sdaand/dev/sdb, I created, using fdisk, identical new partitions of type "Linux raid autodetect" to fill up the unallocated space.Device Boot Start End Blocks Id System /dev/sda1 1 10 80293+ de Dell Utility /dev/sda2 * 11 106 768000 7 HPFS/NTFS Partition 2 does not end on cylinder boundary. /dev/sda3 106 30787 246439936 7 HPFS/NTFS /dev/sda4 30787 60801 241095200+ fd Linux raid autodetectSo, I would like to create a RAID array from /dev/sda4 and /dev/sdb4 using

mdadm. But I don't seem to get it working:$ sudo mdadm -Cv /dev/md0 -l1 -n2 /dev/sd{a,b}4 mdadm: Cannot open /dev/sda4: Device or resource busy mdadm: Cannot open /dev/sdb4: Device or resource busy mdadm: create abortedAfter booting the machine, the same command yields:

$ sudo mdadm -Cv /dev/md0 -l1 -n2 /dev/sda4 /dev/sdb4 mdadm: Cannot open /dev/sda4: No such file or directory mdadm: Cannot open /dev/sdb4: No such file or directorySo now it seems that the devices are not automatically detected in boot... Using fdisk both

sdaandsdbstill look correct though.Edit: After another reboot the devices are back:

$ ls /dev/sd* /dev/sda /dev/sda2 /dev/sda4 /dev/sdb1 /dev/sdb3 /dev/sdc /dev/sdc2 /dev/sda1 /dev/sda3 /dev/sdb /dev/sdb2 /dev/sdb4 /dev/sdc1 /dev/sdc5But so is "Device or resource busy" when trying to create the RAID array. Quite strange. Any help would be appreciated!

Update: Could the device mapper have something to do with this? How do

/dev/mapperanddmraidrelate to all thismdadmstuff anyway? Both provide software RAID, but.. differently? Sorry for my ignorance here.Under

/dev/mapper/there are some device files that, I think, somehow match the 3 Windows RAID partitions (sd{a,b}1 through sd{a,b}3). I don't know why there are four of these arrays though.$ ls /dev/mapper/ control isw_dgjjcdcegc_ARRAY1 isw_dgjjcdcegc_ARRAY3 isw_dgjjcdcegc_ARRAY isw_dgjjcdcegc_ARRAY2Resolution: It was the mdadm Wikipedia article that pushed me in the right direction. I posted details on how I got everything working in this answer.

-

Admin over 14 yearsIf your Windows were on a hardware RAID, you wouldn't be able to "see"

Admin over 14 yearsIf your Windows were on a hardware RAID, you wouldn't be able to "see"/dev/sdaand/dev/sdbas separate drives. -

Admin over 14 years@Peter: Ok, thanks. I don't know how the RAID for Windows works, but in that case it shouldn't be a problem on Linux side, at any rate.

Admin over 14 years@Peter: Ok, thanks. I don't know how the RAID for Windows works, but in that case it shouldn't be a problem on Linux side, at any rate. -

Admin over 14 yearsI think I found something: en.wikipedia.org/wiki/Mdadm#Known_problems "A common error when creating RAID devices is that the dmraid-driver has taken control of all the devices that are to be used in the new RAID device. Error-messages like this will occur: mdadm: Cannot open /dev/sdb1: Device or resource busy". I'll try what the Wiki article suggests.

Admin over 14 yearsI think I found something: en.wikipedia.org/wiki/Mdadm#Known_problems "A common error when creating RAID devices is that the dmraid-driver has taken control of all the devices that are to be used in the new RAID device. Error-messages like this will occur: mdadm: Cannot open /dev/sdb1: Device or resource busy". I'll try what the Wiki article suggests.

-

-

Assaf Levy over 14 yearsNo, sd{a,b}4 were not mounted. In a way different RAID systems indeed disturbed each other... see full explanation: superuser.com/questions/101630/…

-

quack quixote over 14 yearsit's perfectly appropriate to answer your own question here. you can accept your own answer as well. good work getting it figured out; the question was well-put and this answer is a nice write-up of the situation.

-

Assaf Levy over 14 yearsYeah, I know (and will accept when the time limit allows). I just felt like adding that comment, being a little disappointed / amused by having essentially used SU only as a "rubber duck". :-) But no matter – I'm glad I eventually got it all working.