Spark java.lang.OutOfMemoryError : Java Heap space

Solution 1

Things I would try:

1) Removing spark.memory.offHeap.enabled=true and increasing driver memory to something like 90% of the available memory on the box. You probably are aware of this since you didn't set executor memory, but in local mode the driver and the executor all run in the same process which is controlled by driver-memory. I haven't tried it, but the offHeap feature sounds like it has limited value. Reference

2) An actual cluster instead of local mode. More nodes will obviously give you more RAM.

3a) If you want to stick with local mode, try using less cores. You can do this by specifying the number of cores to use in the master setting like --master local[4] instead of local[*] which uses all of them. Running with less threads simultaneously processing data will lead to less data in RAM at any given time.

3b) If you move to a cluster, you may also want to tweak the number of executors cores for the same reason as mentioned above. You can do this with the --executor-cores flag.

4) Try with more partitions. In your example code you repartitioned to 500 partitions, maybe try 1000, or 2000? More partitions means each partition is smaller and less memory pressure.

Solution 2

Usually, this error is thrown when there is insufficient space to allocate an object in the Java heap. In this case, The garbage collector cannot make space available to accommodate a new object, and the heap cannot be expanded further. Also, this error may be thrown when there is insufficient native memory to support the loading of a Java class. In a rare instance, a java.lang.OutOfMemoryError may be thrown when an excessive amount of time is being spent doing garbage collection and little memory is being freed.

How to fix error :

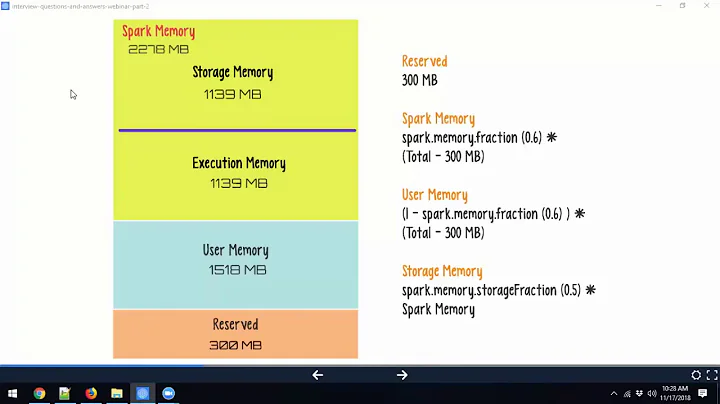

How to set Apache Spark Executor memory

Spark java.lang.OutOfMemoryError: Java heap space

Related videos on Youtube

user3245722

Updated on June 04, 2022Comments

-

user3245722 about 2 years

I am geting the above error when i run a model training pipeline with spark

`val inputData = spark.read .option("header", true) .option("mode","DROPMALFORMED") .csv(input) .repartition(500) .toDF("b", "c") .withColumn("b", lower(col("b"))) .withColumn("c", lower(col("c"))) .toDF("b", "c") .na.drop()`inputData has about 25 million rows and is about 2gb in size. the model building phase happens like so

val tokenizer = new Tokenizer() .setInputCol("c") .setOutputCol("tokens") val cvSpec = new CountVectorizer() .setInputCol("tokens") .setOutputCol("features") .setMinDF(minDF) .setVocabSize(vocabSize) val nb = new NaiveBayes() .setLabelCol("bi") .setFeaturesCol("features") .setPredictionCol("prediction") .setSmoothing(smoothing) new Pipeline().setStages(Array(tokenizer, cvSpec, nb)).fit(inputData)I am running the above spark jobs locally in a machine with 16gb RAM using the following command

spark-submit --class holmes.model.building.ModelBuilder ./holmes-model-building/target/scala-2.11/holmes-model-building_2.11-1.0.0-SNAPSHOT-7d6978.jar --master local[*] --conf spark.serializer=org.apache.spark.serializer.KryoSerializer --conf spark.kryoserializer.buffer.max=2000m --conf spark.driver.maxResultSize=2g --conf spark.rpc.message.maxSize=1024 --conf spark.memory.offHeap.enabled=true --conf spark.memory.offHeap.size=50g --driver-memory=12gThe oom error is triggered by (at the bottow of the stack trace) by org.apache.spark.util.collection.ExternalSorter.writePartitionedFile(ExternalSorter.scala:706)

Logs :

Caused by: java.lang.OutOfMemoryError: Java heap space at java.lang.reflect.Array.newInstance(Array.java:75) at java.io.ObjectInputStream.readArray(ObjectInputStream.java:1897) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1529) java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535) org.apache.spark.util.collection.ExternalSorter.writePartitionedFile(ExternalSorter.scala:706)Any suggestions will be great :)

-

vaquar khan about 6 yearsPlease add full logs

vaquar khan about 6 yearsPlease add full logs -

user3245722 about 6 years˚Caused by: java.lang.OutOfMemoryError: Java heap space at java.lang.reflect.Array.newInstance(Array.java:75) at java.io.ObjectInputStream.readArray(ObjectInputStream.java:1897) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1529) java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2027) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1535) org.apache.spark.util.collection.ExternalSorter.writePartitionedFile(ExternalSorter.scala:706)

-

ranger_sim_g about 3 yearsDoes this answer your question? Spark java.lang.OutOfMemoryError: Java heap space

-

-

user3245722 about 6 yearsThank you Ryan. I will try out your suggestions.

![[FIX] How to Solve java.lang.OutOfMemoryError Java Heap Space](https://i.ytimg.com/vi/vmI8rdV9EOo/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCVtnnA5nDdmmE6CDyv7tJSsxx2Uw)