Store orientation to an array - and compare

Solution 1

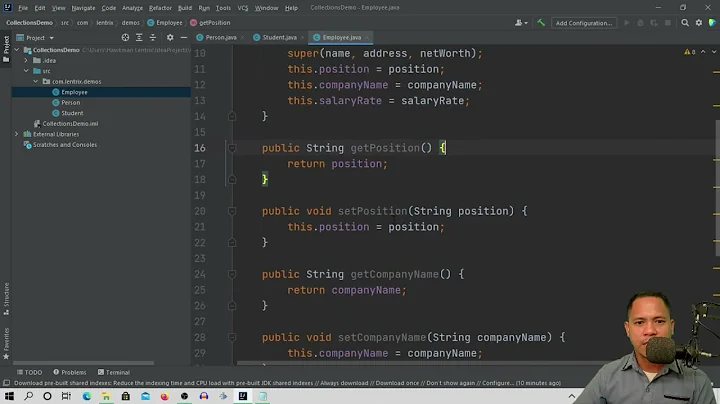

Try dynamic time warping. Here is an illustrative example with 1D arrays. In the database we already have the following 2 arrays:

Array 1: [5, 3, 1]

Array 2: [1, 3, 5, 8, 8]

We measured [2, 4, 6, 7]. Which array is the most similar to the newly measured? Obviously, the second array is similar to the newly measured and the first is not.

Let's compute the cost matrices according to this paper, subsection 2.1:

D(i,j)=Dist(i,j)+MIN(D(i-1,j),D(i,j-1),D(i-1,j-1))

Here D(i,j) is the (i,j) element of the cost matrix, see below. Check Figure 3 of that paper to see this recurrence relation is applied. In short: columns are computed first, starting from D(1,1); D(0,*) and D(*,0) are left out in the MIN. If we are comparing arrays A and B then Dist(i,j) is the distance between A[i] and B[j]. I simply used ABS(A[i]-B[j]). The cost matrices for this example:

For Array 1 we have 13 as score, for Array 2 we have 5. The lower score wins, so the most similar array is Array 2. The best warping path is marked gray.

This is only a sketch of DTW. There are a number of issues you have to address in a real-world application. For example using offset instead of fixed ending points, or defining measures of fit: see this paper, page 363, 5. boundary conditions and page 364. The above linked paper has further details too.

I just noticed you are using yaw, pitch and roll. Simply put: don't and another reason not to. Can you use the accelerometer data instead? "An accelerometer is a direct measurement of orientation" (from the DCM manuscript) and that is what you need. And as for tc's question, does the orientation relative to North matter? I guess not.

It is far easier to compare the acceleration vectors than orientations (Euler angles, rotation matrices, quaternions) as tc pointed that out. If you are using acceleration data, you have 3 dimensional vectors at each time point, the (x,y,z) coordinates. I would simply compute

Dist(i,j)=SQRT((A[i][X]-B[j][X])^2+(A[i][Y]-B[j][Y])^2+(A[i][Z]-B[j][Z])^2),

that is the Eucledian distance between the two points.

Solution 2

I think Ali's approach is in general a good way to go, but there is a general problem called gimbal lock (or SO discussions on this topic) when using Euler angles i.e. pitch, roll and yaw. You will run into it when you record a more complex movement lasting longer than a few ticks and thus leading to large angle deltas in different angular directions.

In a nutshell that means, that you will have more than one mathematical representation for the same position just depending on the order of movements you made to get there - and a loss of information on the other side. Consider an airplane flying up in the air from left to right. X axis is from left to right, Y axis points up to the air. The following two movement sequences will lead to the same end position although you will get there on totally different ways:

Sequence A:

- Rotation around yaw +90°

- Rotation around pitch +90°

Sequence B:

- Rotation around pitch +90°

- Rotation around roll +90°

In both cases your airplane points down to the ground and you can see its bottom from your position.

The only solution to this is to avoid Euler angles and thus make things more complicated. Quaternions are the best way to deal with this but it took a while (for me) to get an idea of this pretty abstract representation. OK, this answer doesn't take you any step further regarding your original problem, but it might help you avoiding waste of time. Maybe you can do some conceptual changes to set up your idea.

Kay

Related videos on Youtube

Mikael

-(id) initDeveloper { self.name = @"Mikael"; self.age = @"34"; self.platform = @"iOS"; self.favouriteBeer = @"Boston Lager"; return self; }

Updated on June 20, 2020Comments

-

Mikael almost 4 years

I want to achieve the following:

I want the user to be able to "record" the movement of the iPhone using the gyroscope. And after that, the user should be able to replicate the same movement. I extract the pitch, roll and yaw using:

[self.motionManager startDeviceMotionUpdatesToQueue:[NSOperationQueue currentQueue] withHandler: ^(CMDeviceMotion *motion, NSError *error) { CMAttitude *attitude = motion.attitude; NSLog(@"pitch: %f, roll: %f, yaw: %f]", attitude.pitch, attitude.roll, attitude.yaw); }];I'm thinking that I could store these values into an array, if the user is in record mode. And when the user tries to replicate that movement, I'm could compare the replicated movement array to the recorded one. The thing is, how can I compare the two arrays in a smart way? They will never have exactly the same values, but they can be somewhat the same.

Am I at all on the right track here?

UPDATE: I think that maybe Alis answer about using DTW could be the right way for me here. But I'm not that smart (apparently), so if anyone could help me out with the first steps with comparing to arrays I would be a happy man!

Thanks!

-

tc. almost 13 yearsDo you want to compare orientation (rotation), acceleration (~= movement), or both? Does orientation relative to the ground matter?

-

Mikael almost 13 yearsThanks Ali, I will have a go at it tomorrow. Then I will let you know how it goes :)

-

Mikael almost 13 years@tc: just the movement. It doesn't have to be relative to the ground.

-

tc. almost 13 yearsSo orientation or acceleration? Or both? Or something else?

-

Ali almost 13 years@zebulon I am glad you found my answer useful, thanks for the bounty. Please let me know how DWT worked out for you.

-

Mikael almost 13 years@Ali, Yes, thanks! Right now the client decided not to go down that road. But I'm still gonna try to get it work, it might be really useful in the future.

-

Infinite Possibilities about 11 years@Mikael have you succeed in doing the coding? Are you thinking on sharing it or not?

-

-

Mikael almost 13 yearsOoo, that was complex. I'm trying to figure out how it works. Thanks!

-

tc. almost 13 yearsQuaternions have the "double cover" problem instead (fortunately not as terrible). Either way, you need to compare the "distance" between two orientations, which isn't particularly trivial with any of the representations.

-

murt over 8 years@Mikael I think that because of this answer the title of this question should be changed to simplify the access to it for future user. Also in additional I will strongly recomend accelerometer to performing movement recognition, without removing influence of gravity and additional integration in purpose to avoid noise.So gravity will be distributed over axes and gives you data unique value in form of included info about orientation. Do not integrate data because I will slown down your measurment. I've written the thesis on DTW and ACC, so trust me. Great short meanigfull answer , I thanks too.

murt over 8 years@Mikael I think that because of this answer the title of this question should be changed to simplify the access to it for future user. Also in additional I will strongly recomend accelerometer to performing movement recognition, without removing influence of gravity and additional integration in purpose to avoid noise.So gravity will be distributed over axes and gives you data unique value in form of included info about orientation. Do not integrate data because I will slown down your measurment. I've written the thesis on DTW and ACC, so trust me. Great short meanigfull answer , I thanks too. -

Bruce Yo over 7 years@murt is the acceleration data like Dist(i,j)/t, where "t" is the time interval of A and B? How acceleration data include info about orientation? is it because it is calculated from orientation data? Could you share some of your published paper relating to this topic? Thanks in advance!

Bruce Yo over 7 years@murt is the acceleration data like Dist(i,j)/t, where "t" is the time interval of A and B? How acceleration data include info about orientation? is it because it is calculated from orientation data? Could you share some of your published paper relating to this topic? Thanks in advance! -

murt over 7 years@BruceYo the data is in form of D(x,y,z), and it includes gravity. So if you put you phone on the bottom edge, then one of the axis will show you the about 8-9m/s^2 value. Going further, Now if you will move the smartphone each axis will be influenced by that gravity, so the final force on each axis will consist the distributed force of gravity, hence it will let's say imitate an orientation. Of course it is not straightforward, so you can not determinate where is north,east etc. its more like in which position in respect to gravity is your phone. GitHubMurt/GestureRecognition

murt over 7 years@BruceYo the data is in form of D(x,y,z), and it includes gravity. So if you put you phone on the bottom edge, then one of the axis will show you the about 8-9m/s^2 value. Going further, Now if you will move the smartphone each axis will be influenced by that gravity, so the final force on each axis will consist the distributed force of gravity, hence it will let's say imitate an orientation. Of course it is not straightforward, so you can not determinate where is north,east etc. its more like in which position in respect to gravity is your phone. GitHubMurt/GestureRecognition

![Cấu trúc dữ liệu & Giải thuật [01]: Array - Mảng. #array](https://i.ytimg.com/vi/IRoOEsNqgzM/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLA001ZMhAVBdoRD6e9vndeqUKJqjA)