tensorflow:Your input ran out of data

Solution 1

To make sure that you have "at least steps_per_epoch * epochs batches", set the steps_per_epoch to

steps_per_epoch = len(X_train)//batch_size

validation_steps = len(X_test)//batch_size # if you have validation data

You can see the maximum number of batches that model.fit() can take by the progress bar when the training interrupts:

5230/10000 [==============>...............] - ETA: 2:05:22 - loss: 0.0570

Here, the maximum would be 5230 - 1

Importantly, keep in mind that by default, batch_size is 32 in model.fit().

If you're using a tf.data.Dataset, you can also add the repeat() method, but be careful: it will loop indefinitely (unless you specify a number).

Solution 2

I have also had a number of models crash with the same warnings while trying to train them. The training dataset if created using the tf.keras.preprocessing.image_dataset_from_directory() and split 80/20. I have created a variable to try and not run out of image. Using ResNet50 with my own images.....

TRAIN_STEPS_PER_EPOCH = np.ceil((image_count*0.8/BATCH_SIZE)-1)

# to ensure that there are enough images for training bahch

VAL_STEPS_PER_EPOCH = np.ceil((image_count*0.2/BATCH_SIZE)-1)

but it still does. BATCH_SIZE is set to 32 so i am taking 80% of the number of images and dividing by 32 then taking away 1 to have surplus.....or so i thought.

history = model.fit(

train_ds,

steps_per_epoch=TRAIN_STEPS_PER_EPOCH,

epochs=EPOCHS,

verbose = 1,

validation_data=val_ds,

validation_steps=VAL_STEPS_PER_EPOCH,

callbacks=tensorboard_callback)

Error after 3 hours processing a a single successful Epoch is:

Epoch 1/25 374/374 [==============================] - 8133s 22s/step - loss: 7.0126 - accuracy: 0.0028 - val_loss: 6.8585 - val_accuracy: 0.0000e+00 Epoch 2/25 1/374 [..............................] - ETA: 0s - loss: 6.0445 - accuracy: 0.0000e+00WARNING:tensorflow:Your input ran out of data; interrupting training. Make sure that your dataset or generator can generate at least

steps_per_epoch * epochsbatches (in this case, 9350.0 batches). You may need to use the repeat() function when building your dataset.

this might help....

> > print(train_ds) <BatchDataset shapes: ((None, 224, 224, 3), (None,)), types: (tf.float32, tf.int32)>

>

> print(val_ds) BatchDataset shapes: ((None, 224, 224, 3), (None,)),types: (tf.float32, tf.int32)>

>

> print(TRAIN_STEPS_PER_EPOCH)

> 374.0

>

> print(VAL_STEPS_PER_EPOCH)

> 93.0

Solution 3

I had same problem and decreasing validation_steps from 50 to 10 solved the issue.

Solution 4

Solution which worked for me was to set drop_remainder=True while generating the dataset. This automatically handles any extra data that is left over.

For example:

dataset = tf.data.Dataset.from_tensor_slices((images, targets)) \

.batch(12, drop_remainder=True)

Solution 5

If you create a dataset with image_dataset_from_directory, remove steps_per_epoch and validation_steps parameters from model.fit.

The reason is steps has been initiated when batch_size passed into image_dataset_from_directory, and you can trying get the steps number with len.

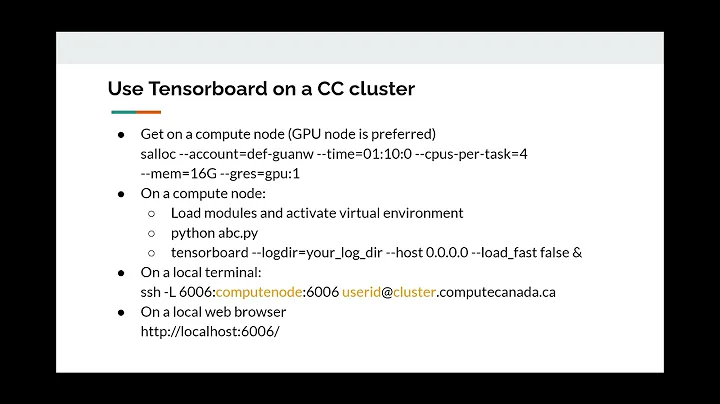

Related videos on Youtube

Arthos

Updated on February 18, 2022Comments

-

Arthos about 2 years

Arthos about 2 yearsI am working on a seq2seq keras/tensorflow 2.0 model. Every time the user inputs something, my model prints the response perfectly fine. However on the last line of each response I get this:

You: WARNING:tensorflow:Your input ran out of data; interrupting training. Make sure that your dataset or generator can generate at least

steps_per_epoch * epochsbatches (in this case, 2 batches). You may need to use the repeat() function when building your dataset.The "You:" is my last output, before the user is supposed to type something new in. The model works totally fine, but I guess no error is ever good, but I don't quite get this error. It says "interrupting training", however I am not training anything, this program loads an already trained model. I guess this is why the error is not stopping the program?

In case it helps, my model looks like this:

intent_model = keras.Sequential([ keras.layers.Dense(8, input_shape=[len(train_x[0])]), # input layer keras.layers.Dense(8), # hidden layer keras.layers.Dense(len(train_y[0]), activation="softmax"), # output layer ]) intent_model.compile(optimizer="adam", loss="categorical_crossentropy", metrics=["accuracy"]) intent_model.fit(train_x, train_y, epochs=epochs) test_loss, test_acc = intent_model.evaluate(train_x, train_y) print("Tested Acc:", test_acc) intent_model.save("models/intent_model.h5")-

Dr. Snoopy over 4 yearsI don't think the question makes any sense without the code that actually produces the warning, I don't see code where the user inputs anything and the model is used.

-

-

Arthos over 4 yearsAh okay. I am not home at the moment, but I look forward to testing this. Thank you very much

Arthos over 4 yearsAh okay. I am not home at the moment, but I look forward to testing this. Thank you very much -

Nicolas Gervais almost 4 yearsThis is incorrect. It has nothing to do with memory.

Nicolas Gervais almost 4 yearsThis is incorrect. It has nothing to do with memory. -

Victor Maricato over 3 yearsAdding to the "This is incorrect" answer above, it is good to emphasize WHY this worked. When you lowered the batch size, what you did was actually reducing the amount of data that it "requested" from training set in each batch/step/epoch as a whole. Indeed, this does reduces memory consumption (less data, less memory), but it was not due to some kind of memory optimization that it worked.

Victor Maricato over 3 yearsAdding to the "This is incorrect" answer above, it is good to emphasize WHY this worked. When you lowered the batch size, what you did was actually reducing the amount of data that it "requested" from training set in each batch/step/epoch as a whole. Indeed, this does reduces memory consumption (less data, less memory), but it was not due to some kind of memory optimization that it worked. -

Stefan Falk about 3 yearsI can also recommend setting

Stefan Falk about 3 yearsI can also recommend settingdrop_remainder=Truewhen usingtf.data.experimental.bucket_by_sequence_length() -

Jay over 2 yearsyou should remove

Jay over 2 yearsyou should removesteps_per_epochandvalidation_steps -

Anuj Raghuvanshi over 2 yearsI have total of 62400 records in my dataset and I am trying with these flags but yet its giving me issue -

--batch_size 624 \ --learning_rate 0.1 \ --steps_per_epoch 100 \ --num_epochs 1 \ --gradient_clip_norm 1.0 \ --max_history_length 10What should I do to make this working ?

![Building GPU-Accelerated Workflows with TensorFlow and Kubernetes [I] - Daniel Whitenack](https://i.ytimg.com/vi/OZSA5hmkb0o/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCl35JeYvvmPMba1WlTf3m29X67DQ)