Ubuntu 16.04 lts - network does not start on boot - bonding

Solved - Ubuntu 16.04 lts bonding with IEEE 802.3ad Dynamic Link Aggregation

Situation

Dell PowerEdge 2950 running NextCloud Server over Ubuntu 16.04 lts with unstable bonded 802.3ad dynamic link aggregation network with intermittent running timeouts and boot errors.

Troubleshooting

Past a myriad of server side configuration testing (thanks to George for the support) the intermittent network problem persisted. A compatibility issue was deduced between the builtin Broadcom and the pci Intel nics when bonded in Ubuntu 16.04 lts.

Hardware Solution

Two dual Intel pci nics were installed on the 2950 riser pci slots, nvram cleared and the builtin broadcom were disabled from bios. This was done to favor bandwidth i.e. 4 (1Gb) nics instead of the 2 (1Gb) builtin interfaces.

Server Solution

There are conflicting bonding configuration suggestions for Ubuntu 16.04 lts and this is what worked for me.

1. Ran ifconfig -a to get hold of the new interface bios and dev names

borgf003@CLD01:~$ ifconfig -a

..........

enp10s0f0 Link encap:Ethernet HWaddr 00:15:17:4a:94:26

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:5161 errors:0 dropped:0 overruns:0 frame:0

TX packets:361 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:809816 (809.8 KB) TX bytes:31274 (31.2 KB)

Interrupt:17 Memory:fdae0000-fdb00000

enp10s0f1 Link encap:Ethernet HWaddr 00:15:17:4a:94:26

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:11440 errors:0 dropped:0 overruns:0 frame:0

TX packets:167 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1963591 (1.9 MB) TX bytes:20970 (20.9 KB)

Interrupt:18 Memory:fdaa0000-fdac0000

enp14s0f0 Link encap:Ethernet HWaddr 00:15:17:4a:94:26

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:4769 errors:0 dropped:4 overruns:0 frame:0

TX packets:3294 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:582742 (582.7 KB) TX bytes:1546925 (1.5 MB)

Interrupt:16 Memory:fd6e0000-fd700000

enp14s0f1 Link encap:Ethernet HWaddr 00:15:17:4a:94:26

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:3910 errors:0 dropped:1 overruns:0 frame:0

TX packets:2548 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:497874 (497.8 KB) TX bytes:838297 (838.2 KB)

Interrupt:17 Memory:fd6a0000-fd6c0000

..........

2. As I had bonding preconfigured before I ran sudo apt install --reinstall ifenslave

3. Checked if bonding is loaded at boot sudo nano /etc/modules

loop lp bonding

NOTE: I remove rtc as it is depreciated in 16.04 lts and I like a clean boot

4. Stopped networking in my case I use sudo /etc/init.d/networking stop

5. Edited the interfaces /etc/network/interfaces with the bond as follows. Note that you need to change the interfaces name with yours, including the ips

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto enp10s0f0

iface enp10s0f0 inet manual

bond-master bond0

# The second network interface

auto enp10s0f1

iface enp10s0f1 inet manual

bond-master bond0

# The third network interface

auto enp14s0f0

iface enp14s0f0 inet manual

bond-master bond0

# The forth network interface

auto enp14s0f1

iface enp14s0f1 inet manual

bond-master bond0

# The bond master network interface

auto bond0

iface bond0 inet static

address xxx.xxx.xxx.xxx

netmask xxx.xxx.xxx.xxx

network xxx.xxx.xxx.xxx

broadcast xxx.xxx.xxx.xxx

gateway xxx.xxx.xxx.xxx

# dns-* options are implemented by the resolvconf package, if installed

dns-nameservers xxx.xxx.xxx.xxx

dns-search yourdomain.com

bond-mode 4

bond-miimon 100

bond-slaves all

6. Reloaded the kernel bond module sudo modprobe bonding

7. Created a bonding configuration /etc/modprobe.d/bonding.conf with

alias bond0 bonding options bonding mode=4 miimon=100 lacp_rate=1

8. Restarted the network, in my case I use sudo /etc/init.d/networking restart

9. Checked the bond cat /proc/net/bonding/bond0

10. Reboot to see if all holds up!

Related videos on Youtube

Fab

Advisor, InfoSec and SysAdmin at Local Government of Gzira (Malta - EU). https://www.linkedin.com/in/borgfabian/ Author of: From Dump to Production

Updated on September 18, 2022Comments

-

Fab over 1 year

Fab over 1 yearUbuntu 16.04 lts server with 4 nics bonded on IEEE 802.3ad Dynamic link aggregation.

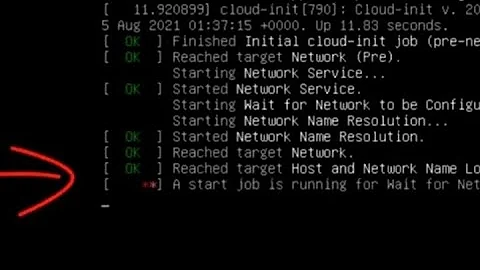

Upon reboot, the network service fails to load automatically and have to do a manual start using

sudo /etc/init.d/networking startAll the interfaces including the bond have an auto load in the

/etc/network/interfaces# The loopback network interface auto lo iface lo inet loopback # The primary network interface auto eth0 iface eth0 inet manual bond-master bond0 # The second network interface auto eth1 iface eth1 inet manual bond-master bond0 # The third network interface auto eth2 iface eth2 inet manual bond-master bond0 # The forth network interface auto eth3 iface eth3 inet manual bond-master bond0 # The bond master network interface auto bond0 iface bond0 inet static address 192.168.1.201 netmask 255.255.255.0 network 192.168.1.0 broadcast 192.168.1.255 gateway 192.168.1.254 dns-nameservers xx.xx.xx.x xx.xx.xx.xx dns-search xxx.xxx.xxx.xx bond-mode 4 bond-miimon 100 bond-slaves all bond-primary eth1 eth2 eth3 eth0This is supposed to start the network service upon reboot, but its not. What can I do to have the network start on boot?

Edit 1

Edit 2

Output of

dmesg | grep -i bond0:borgf003@CLD01:~$ dmesg | grep -i bond0 [ 12.110687] bond0: Setting MII monitoring interval to 100 [ 12.121534] IPv6: ADDRCONF(NETDEV_UP): bond0: link is not ready [ 12.191915] bond0: Adding slave eno2 [ 12.348156] bond0: Enslaving eno2 as a backup interface with a down link [ 12.350247] bond0: Adding slave enp12s0f0 [ 12.608573] bond0: Enslaving enp12s0f0 as a backup interface with a down link [ 12.608598] bond0: Adding slave enp12s0f1 [ 12.856531] bond0: Enslaving enp12s0f1 as a backup interface with a down link [ 12.856552] bond0: Adding slave eno1 [ 12.980244] bond0: Enslaving eno1 as a backup interface with a down link [ 13.980065] bond0: link status definitely up for interface eno2, 100 Mbps ful l duplex [ 13.980071] bond0: now running without any active interface! [ 13.980076] bond0: link status definitely up for interface enp12s0f0, 100 Mbp s full duplex [ 14.080093] IPv6: ADDRCONF(NETDEV_CHANGE): bond0: link becomes ready [ 14.280062] bond0: link status definitely up for interface enp12s0f1, 100 Mbp s full duplex [ 14.680033] bond0: link status definitely up for interface eno1, 100 Mbps ful l duplex [ 194.727579] bond0: Removing slave enp12s0f1 [ 194.727776] bond0: Releasing active interface enp12s0f1 [ 195.010788] bond0: Removing slave eno2 [ 195.010872] bond0: Releasing active interface eno2 [ 195.010876] bond0: the permanent HWaddr of eno2 - 00:1a:a0:06:f1:dd - is stil l in use by bond0 - set the HWaddr of eno2 to a different address to avoid confl icts [ 195.010879] bond0: first active interface up! [ 195.207016] bond0: Removing slave enp12s0f0 [ 195.207075] bond0: Releasing active interface enp12s0f0 [ 195.207079] bond0: first active interface up! [ 195.278500] bond0: Removing slave eno1 [ 195.278553] bond0: Removing an active aggregator [ 195.278556] bond0: Releasing active interface eno1 [ 195.293395] bonding: bond0 is being deleted... [ 195.293460] bond0 (unregistering): Released all slaves [ 195.358558] bonding: bond0 is being created... [ 195.404957] bond0: Setting MII monitoring interval to 100 [ 195.407792] IPv6: ADDRCONF(NETDEV_UP): bond0: link is not ready [ 195.462566] bond0: Adding slave eno1 [ 195.584227] bond0: Enslaving eno1 as a backup interface with a down link [ 195.665846] bond0: Adding slave eno2 [ 195.796166] bond0: Enslaving eno2 as a backup interface with a down link [ 195.864614] bond0: Adding slave enp12s0f0 [ 196.104464] bond0: Enslaving enp12s0f0 as a backup interface with a down link [ 196.167753] bond0: Adding slave enp12s0f1 [ 196.408475] bond0: Enslaving enp12s0f1 as a backup interface with a down link [ 197.204043] bond0: link status definitely up for interface eno1, 100 Mbps ful l duplex [ 197.204052] bond0: now running without any active interface! [ 197.204110] IPv6: ADDRCONF(NETDEV_CHANGE): bond0: link becomes ready [ 197.504039] bond0: link status definitely up for interface eno2, 100 Mbps ful l duplex [ 197.504045] bond0: link status definitely up for interface enp12s0f0, 100 Mbp s full duplex [ 197.804037] bond0: link status definitely up for interface enp12s0f1, 100 Mbp s full duplex [ 1048.788210] bond0: Removing slave enp12s0f1 [ 1048.788421] bond0: Releasing active interface enp12s0f1 [ 1048.996159] bond0: Removing slave enp12s0f0 [ 1048.996331] bond0: Releasing active interface enp12s0f0 [ 1049.200059] bond0: Removing slave eno2 [ 1049.200152] bond0: Releasing active interface eno2 [ 1049.366490] bond0: Removing slave eno1 [ 1049.366548] bond0: Removing an active aggregator [ 1049.366551] bond0: Releasing active interface eno1 [ 1049.377410] bonding: bond0 is being deleted... [ 1049.377479] bond0 (unregistering): Released all slaves [ 1049.449847] bonding: bond0 is being created... [ 1049.507089] bond0: Setting MII monitoring interval to 100 [ 1049.510405] IPv6: ADDRCONF(NETDEV_UP): bond0: link is not ready [ 1049.554116] bond0: Adding slave eno1 [ 1049.657057] bond0: Enslaving eno1 as a backup interface with a down link [ 1049.730913] bond0: Adding slave eno2 [ 1049.849103] bond0: Enslaving eno2 as a backup interface with a down link [ 1049.914038] bond0: Adding slave enp12s0f0 [ 1050.160523] bond0: Enslaving enp12s0f0 as a backup interface with a down link [ 1050.226176] bond0: Adding slave enp12s0f1 [ 1050.476519] bond0: Enslaving enp12s0f1 as a backup interface with a down link [ 1051.312039] bond0: link status definitely up for interface eno1, 100 Mbps ful l duplex [ 1051.312049] bond0: now running without any active interface! [ 1051.312103] IPv6: ADDRCONF(NETDEV_CHANGE): bond0: link becomes ready [ 1051.612040] bond0: link status definitely up for interface eno2, 100 Mbps ful l duplex [ 1051.612046] bond0: link status definitely up for interface enp12s0f0, 100 Mbp s full duplex [ 1051.912060] bond0: link status definitely up for interface enp12s0f1, 100 Mbp s full duplex borgf003@CLD01:~$

Edit 3

Checking bond0

borgf003@CLD01:~$ cat /proc/net/bonding/bond0 Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011) Bonding Mode: IEEE 802.3ad Dynamic link aggregation Transmit Hash Policy: layer2 (0) MII Status: up MII Polling Interval (ms): 100 Up Delay (ms): 0 Down Delay (ms): 0 802.3ad info LACP rate: slow Min links: 0 Aggregator selection policy (ad_select): stable Slave Interface: eno1 MII Status: up Speed: 100 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:1a:a0:06:f1:db Slave queue ID: 0 Aggregator ID: 1 Actor Churn State: monitoring Partner Churn State: monitoring Actor Churned Count: 0 Partner Churned Count: 0 Slave Interface: eno2 MII Status: up Speed: 100 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:1a:a0:06:f1:dd Slave queue ID: 0 Aggregator ID: 1 Actor Churn State: monitoring Partner Churn State: monitoring Actor Churned Count: 0 Partner Churned Count: 0 Slave Interface: enp12s0f0 MII Status: up Speed: 100 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:15:17:4a:94:26 Slave queue ID: 0 Aggregator ID: 1 Actor Churn State: monitoring Partner Churn State: monitoring Actor Churned Count: 0 Partner Churned Count: 0 Slave Interface: enp12s0f1 MII Status: up Speed: 100 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 00:15:17:4a:94:27 Slave queue ID: 0 Aggregator ID: 1 Actor Churn State: monitoring Partner Churn State: monitoring Actor Churned Count: 0 Partner Churned Count: 0 borgf003@CLD01:~$

The link is slow due to the old Cisco Catalyst Switch in place.

Edit 4

● networking.service - Raise network interfaces Loaded: loaded (/lib/systemd/system/networking.service; enabled; vendor prese Drop-In: /run/systemd/generator/networking.service.d └─50-insserv.conf-$network.conf Active: active (exited) since Mon 2016-12-19 16:11:01 CET; 4min 10s ago Docs: man:interfaces(5) Process: 3783 ExecStop=/sbin/ifdown -a --read-environment (code=exited, status Process: 3933 ExecStart=/sbin/ifup -a --read-environment (code=exited, status= Process: 3927 ExecStartPre=/bin/sh -c [ "$CONFIGURE_INTERFACES" != "no" ] && [ Main PID: 3933 (code=exited, status=0/SUCCESS) Tasks: 0 Memory: 0B CPU: 0 CGroup: /system.slice/networking.service Dec 19 16:11:00 CLD01 systemd[1]: Starting Raise network interfaces... Dec 19 16:11:00 CLD01 ifup[3933]: Waiting for bond master bond0 to be ready Dec 19 16:11:01 CLD01 systemd[1]: Started Raise network interfaces.The bond seems to be initiating before the interfaces are raised

Edit 5

Problem was solved see the answer here

-

George Udosen over 7 yearssee my updated answer.

George Udosen over 7 yearssee my updated answer. -

George Udosen over 7 yearsare you using a router ?

George Udosen over 7 yearsare you using a router ? -

George Udosen over 7 yearsupdated answer look at step 7 Got that from ServerVault. There seem to be a slight difference in the way bonding is done on Ubuntu Xenial.

George Udosen over 7 yearsupdated answer look at step 7 Got that from ServerVault. There seem to be a slight difference in the way bonding is done on Ubuntu Xenial.

-

-

Fab over 7 yearsGeorge, I did reboot and checked if networking is enabled and it is active past boot. I am able to ping most of the internal lan ips but cannot ping outside the gateway. To resolve the issue I would need to restart the networking service via sudo /etc/init.d/networking/restart. What might be the problem?

Fab over 7 yearsGeorge, I did reboot and checked if networking is enabled and it is active past boot. I am able to ping most of the internal lan ips but cannot ping outside the gateway. To resolve the issue I would need to restart the networking service via sudo /etc/init.d/networking/restart. What might be the problem? -

George Udosen over 7 yearsplease do that and let me know if it works.

George Udosen over 7 yearsplease do that and let me know if it works. -

Fab over 7 yearsGeorge, I reboot and check if the network is active as you instructed which is past boot, and did enter sudo systemctl enable networking as you guided. Unfortunately as informed in my previous comment, I am able to ping the inner lan but cannot ping outside the gateway unless I do a sudo /etc/init.d/networking restart. I am also noticing that I am getting disconnected over a period of time that exceeds 24 hours. The network seems so unstable. How can I test further to give you better guidance.

Fab over 7 yearsGeorge, I reboot and check if the network is active as you instructed which is past boot, and did enter sudo systemctl enable networking as you guided. Unfortunately as informed in my previous comment, I am able to ping the inner lan but cannot ping outside the gateway unless I do a sudo /etc/init.d/networking restart. I am also noticing that I am getting disconnected over a period of time that exceeds 24 hours. The network seems so unstable. How can I test further to give you better guidance. -

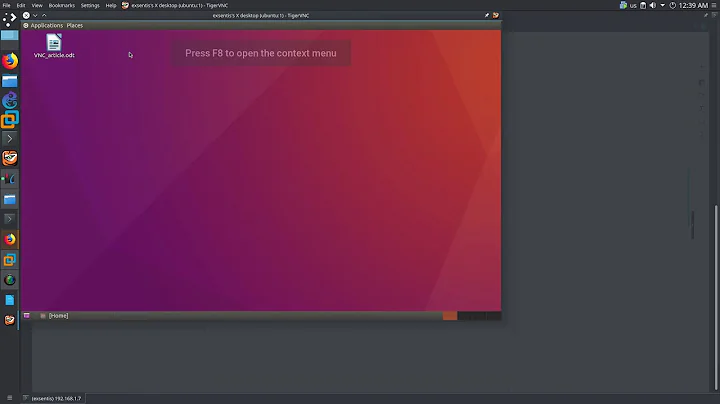

Fab over 7 yearsGeorge, please check the Edit 1 as I uploaded an image of the display showing your suggestions and the fact that past them I am still not able to ping outside my gateway until I do sudo /etc/init.d/network restart. Please guide.

Fab over 7 yearsGeorge, please check the Edit 1 as I uploaded an image of the display showing your suggestions and the fact that past them I am still not able to ping outside my gateway until I do sudo /etc/init.d/network restart. Please guide. -

George Udosen over 7 yearsyour doing bonding i guess, now did you install this package

George Udosen over 7 yearsyour doing bonding i guess, now did you install this packageifenslave? -

Fab over 7 yearsYes i did install ifenslave as I followed the bonding instructions on UbuntuBonding

Fab over 7 yearsYes i did install ifenslave as I followed the bonding instructions on UbuntuBonding -

George Udosen over 7 yearsplease do an

George Udosen over 7 yearsplease do ansudo apt update && sudo apt dist-upgradejust to be sure some thing isn't off. -

Fab over 7 yearsI did a restore with sudo apt install --reinstall ifenslave and checked the entire bonding setup step by step to no avail.

Fab over 7 yearsI did a restore with sudo apt install --reinstall ifenslave and checked the entire bonding setup step by step to no avail. -

George Udosen over 7 yearsI will looking for a solution when I get one will post it here.

George Udosen over 7 yearsI will looking for a solution when I get one will post it here. -

Fab over 7 yearsGeorge, recalling the initial installation of the server, the NICs were renamed to the conventional eth* would this be the cause of the problem?

Fab over 7 yearsGeorge, recalling the initial installation of the server, the NICs were renamed to the conventional eth* would this be the cause of the problem? -

George Udosen over 7 yearsAny possibility of reverting them to see if it allows access ?

George Udosen over 7 yearsAny possibility of reverting them to see if it allows access ? -

Fab over 7 yearsI have amended the grub file and removed "net.ifnames=0 biosdevname=0" from GRUB_CMDLINE_LINUX, reconfigured the grub.cfg file, edited the /etc/network/interfaces to reflect the original interfaces name, reboot, etc. but still I cannot get to ping outside the inner lan.

Fab over 7 yearsI have amended the grub file and removed "net.ifnames=0 biosdevname=0" from GRUB_CMDLINE_LINUX, reconfigured the grub.cfg file, edited the /etc/network/interfaces to reflect the original interfaces name, reboot, etc. but still I cannot get to ping outside the inner lan. -

George Udosen over 7 yearsSorry to hear that, did you try step 7 in my updated answer ?

George Udosen over 7 yearsSorry to hear that, did you try step 7 in my updated answer ? -

Fab over 7 yearsDid that and checked bond0 (see Edit 3)

Fab over 7 yearsDid that and checked bond0 (see Edit 3) -

Fab over 7 yearsGeorge I did a sudo rm /etc/modprobe.d/bonding.conf and created the file again. Rebooted twice and the network is now holding up.

Fab over 7 yearsGeorge I did a sudo rm /etc/modprobe.d/bonding.conf and created the file again. Rebooted twice and the network is now holding up. -

Fab over 7 yearsUnbelievable, the network went down again...

Fab over 7 yearsUnbelievable, the network went down again... -

George Udosen over 7 yearsOh great to hear, what a day with you. All the best!

George Udosen over 7 yearsOh great to hear, what a day with you. All the best! -

George Udosen over 7 yearscheck the status using

George Udosen over 7 yearscheck the status usingsudo systemctl status networking -

Fab over 7 yearsDid that under Edit 4

Fab over 7 yearsDid that under Edit 4 -

George Udosen over 7 yearschange the mode to 1, in steps 7.

George Udosen over 7 yearschange the mode to 1, in steps 7. -

Fab over 7 yearsBut I am on bond 802.3ad = mode 4 would that impact if I change the mode to 1

Fab over 7 yearsBut I am on bond 802.3ad = mode 4 would that impact if I change the mode to 1 -

George Udosen over 7 yearsit switches between your slaves see here, kinda suspecting hardware.

George Udosen over 7 yearsit switches between your slaves see here, kinda suspecting hardware. -

Fab over 7 yearsI am suspecting that too, kinda compatibility issues between 2 Intels with 2 Broadcoms. It worked fine with ESXi but seems to generate issues with Ubuntu's bonding. First thing in the morning, I will remove the bond and compile just one made of the broadcoms which are built in on the main board of the PowerEdge.

Fab over 7 yearsI am suspecting that too, kinda compatibility issues between 2 Intels with 2 Broadcoms. It worked fine with ESXi but seems to generate issues with Ubuntu's bonding. First thing in the morning, I will remove the bond and compile just one made of the broadcoms which are built in on the main board of the PowerEdge. -

George Udosen over 7 yearsOk, let me know how it all goes. Best of luck and compliments of the season.

George Udosen over 7 yearsOk, let me know how it all goes. Best of luck and compliments of the season. -

Fab over 7 yearsGeorge as we both suspected the problem was hardware compatibility between the Intels and the Broadcoms. What I did was disabled the built in broadcoms and place two dual 100/1000mbs Intel cards on the dell riser pci slots, thankfully had some in stock and reconfigured the /etc/network/interfaces to reflect the new nic. Checked the bond and reboot the server four times in a row to test for boot up errors and it worked just fine. Will be posting the finds in this post as an answer for others to be guided with. Compliments to you too for the season.

Fab over 7 yearsGeorge as we both suspected the problem was hardware compatibility between the Intels and the Broadcoms. What I did was disabled the built in broadcoms and place two dual 100/1000mbs Intel cards on the dell riser pci slots, thankfully had some in stock and reconfigured the /etc/network/interfaces to reflect the new nic. Checked the bond and reboot the server four times in a row to test for boot up errors and it worked just fine. Will be posting the finds in this post as an answer for others to be guided with. Compliments to you too for the season. -

George Udosen over 7 yearsSure glad that was finally sorted out.

George Udosen over 7 yearsSure glad that was finally sorted out. -

Fab about 7 yearsthe problem was solved via accepted answer. The issue was indeed an incompatibility issue between broadcom and intel nics.

Fab about 7 yearsthe problem was solved via accepted answer. The issue was indeed an incompatibility issue between broadcom and intel nics.