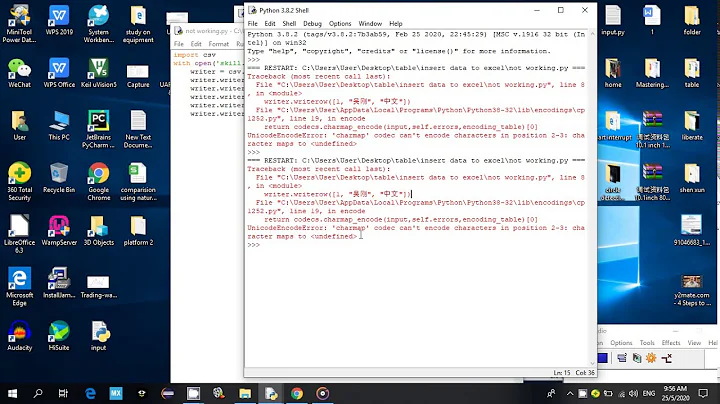

UnicodeEncodeError: 'cp949' codec can't encode character '\u20a9' in position 90: illegal multibyte sequence

20,337

Python 3 opens text files in the locale default encoding; if that encoding cannot handle the Unicode values you are trying to write to it, pick a different codec:

with open('result.csv', 'w', encoding='UTF-8', newline='') as f:

That'd encode any unicode strings to UTF-8 instead, an encoding which can handle all of the Unicode standard.

Note that the csv module recommends you open files using newline='' to prevent newline translation.

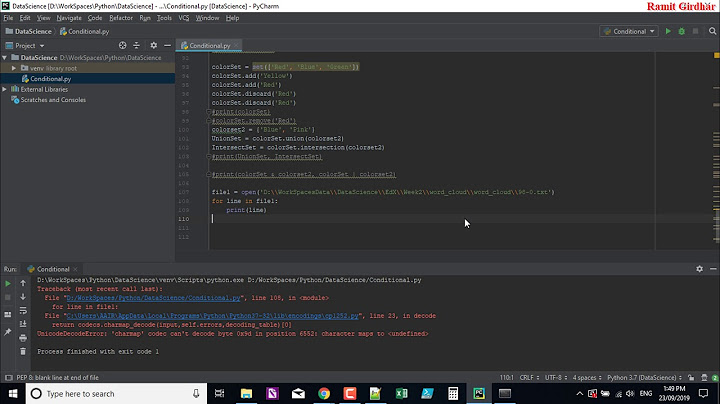

You also need to open the file just once, outside of the for loop:

with open('result.csv', 'w') as f: # Just use 'w' mode in 3.x

fields = ('title', 'developer', 'developer_link', 'price', 'rating', 'reviewers',

'downloads', 'date_published', 'operating_system', 'content_rating',

'category')

w = csv.DictWriter(f, )

w.writeheader()

for div in soup.findAll( 'div', {'class' : 'details'} ):

#

# build app_details

#

w.writerow(app_details)

Related videos on Youtube

Author by

user3172987

Updated on January 23, 2020Comments

-

user3172987 over 4 years

I'm a python beginner.

I'm trying to crawl google play store and export to csv file. But I got a error message.

UnicodeEncodeError: 'cp949' codec can't encode character '\u20a9' in position 90: illegal multibyte sequenceHere is my source code.

When I command print, it works. But it shows error message when exporting to csv file

please help me

from bs4 import BeautifulSoup import urllib.request import urllib.parse import codecs import json import pickle from datetime import datetime import sys import csv import os req = 'https://play.google.com/store/search?q=hana&c=apps&num=300' response = urllib.request.urlopen(req) the_page = response.read() soup = BeautifulSoup(the_page) #app_link = soup.find('a', {'class' : 'title'}) #app_url = app_link.get('href') for div in soup.findAll( 'div', {'class' : 'details'} ): title = div.find( 'a', {'class':'title'} ) #print(title.get('href')) app_url = title.get('href') app_details={} g_app_url = 'https://play.google.com' + app_url app_response = urllib.request.urlopen(g_app_url) app_page = app_response.read() soup = BeautifulSoup(app_page) #print(soup) #print( g_app_url ) title_div = soup.find( 'div', {'class':'document-title'} ) app_details['title'] = title_div.find( 'div' ).get_text().strip() subtitle = soup.find( 'a', {'class' : 'document-subtitle primary'} ) app_details['developer'] = subtitle.get_text().strip() app_details['developer_link'] = subtitle.get( 'href' ).strip() price_buy_span = soup.find( 'span', {'class' : 'price buy'} ) price = price_buy_span.find_all( 'span' )[-1].get_text().strip() price = price[:-4].strip() if price != 'Install' else 'Free' app_details['price'] = price rating_value_meta = soup.find( 'meta', {'itemprop' : 'ratingValue'} ) app_details['rating'] = rating_value_meta.get( 'content' ).strip() reviewers_count_meta = soup.find( 'meta', {'itemprop' : 'ratingCount'} ) app_details['reviewers'] = reviewers_count_meta.get( 'content' ).strip() num_downloads_div = soup.find( 'div', {'itemprop' : 'numDownloads'} ) if num_downloads_div: app_details['downloads'] = num_downloads_div.get_text().strip() date_published_div = soup.find( 'div', {'itemprop' : 'datePublished'} ) app_details['date_published'] = date_published_div.get_text().strip() operating_systems_div = soup.find( 'div', {'itemprop' : 'operatingSystems'} ) app_details['operating_system'] = operating_systems_div.get_text().strip() content_rating_div = soup.find( 'div', {'itemprop' : 'contentRating'} ) app_details['content_rating'] = content_rating_div.get_text().strip() category_span = soup.find( 'span', {'itemprop' : 'genre'} ) app_details['category'] = category_span.get_text() #print(app_details) with open('result.csv', 'w') as f: # Just use 'w' mode in 3.x w = csv.DictWriter(f, app_details.keys()) w.writeheader() w.writerow(app_details) -

user3172987 over 10 yearsThanks :) I have one more question for csv result. I tried the code and opened 'result.csv' file. And I found there is just one row which is last loop result. Can you explain why it stores the last loop result??

-

Martijn Pieters over 10 years@user3172987: You are re-opening the

csvfile every loop iteration, which clears the file and starts a-new. Each iteration. Move opening the file out of the loop (including creating theDictWriter()object and callingw.writeheader()) and only callw.writerow()in the loop. -

Martijn Pieters over 10 years@user3172987: Done; give

DictWriter()a list of keys you want to write away first.

![[SOLVED] UnicodeEncodeError: 'charmap' codec can't encode character...](https://i.ytimg.com/vi/TumTf8-wY1k/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDxAJJVfjJXkcss8mnp2RS0kfxoAQ)