Update statement using a WHERE clause that contains columns with null Values

Solution 1

Since null = null evaluates to false you need to check if two fields are both null in addition to equality check:

UPDATE table_one SET table_one.x = table_two.y

FROM table_two

WHERE

(table_one.invoice_number = table_two.invoice_number

OR (table_one.invoice_number is null AND table_two.invoice_number is null))

AND

(table_one.submitted_by = table_two.submitted_by

OR (table_one.submitted_by is null AND table_two.submitted_by is null))

AND

-- etc

You could also use the coalesce function which is more readable:

UPDATE table_one SET table_one.x = table_two.y

FROM table_two

WHERE

coalesce(table_one.invoice_number, '') = coalesce(table_two.invoice_number, '')

AND coalesce(table_one.submitted_by, '') = coalesce(table_two.submitted_by, '')

AND -- etc

But you need to be careful about the default values (last argument to coalesce).

It's data type should match the column type (so that you don't end up comparing dates with numbers for example) and the default should be such that it doesn't appear in the data

E.g coalesce(null, 1) = coalesce(1, 1) is a situation you'd want to avoid.

Update (regarding performance):

Seq Scan on table_two - this suggests that you don't have any indexes on table_two.

So if you update a row in table_one then to find a matching row in table_two the database basically has to scan through all the rows one by one until it finds a match.

The matching rows could be found much faster if the relevant columns were indexed.

On the flipside if table_one has any indexes then that slows down the update.

According to this performance guide:

Table constraints and indexes heavily delay every write. If possible, you should drop all the indexes, triggers and foreign keys while the update runs and recreate them at the end.

Another suggestion from the same guide that might be helpful is:

If you can segment your data using, for example, sequential IDs, you can update rows incrementally in batches.

So for example if table_one an id column you could add something like

and table_one.id between x and y

to the where condition and run the query several times changing the values of x and y so that all rows are covered.

The EXPLAIN ANALYZE option took also forever

You might want to be careful when using the ANALYZE option with EXPLAIN when dealing with statements with sideffects.

According to documentation:

Keep in mind that the statement is actually executed when the ANALYZE option is used. Although EXPLAIN will discard any output that a SELECT would return, other side effects of the statement will happen as usual.

Solution 2

Try below, similar to the above @binoternary. Just beat me to the answer.

update table_one

set column_x = (select column_y from table_two

where

(( table_two.invoice_number = table_one.invoice_number)OR (table_two.invoice_number IS NULL AND table_one.invoice_number IS NULL))

and ((table_two.submitted_by=table_one.submitted_by)OR (table_two.submitted_by IS NULL AND table_one.submitted_by IS NULL))

and ((table_two.passport_number=table_one.passport_number)OR (table_two.passport_number IS NULL AND table_one.passport_number IS NULL))

and ((table_two.driving_license_number=table_one.driving_license_number)OR (table_two.driving_license_number IS NULL AND table_one.driving_license_number IS NULL))

and ((table_two.national_id_number=table_one.national_id_number)OR (table_two.national_id_number IS NULL AND table_one.national_id_number IS NULL))

and ((table_two.tax_pin_identification_number=table_one.tax_pin_identification_number)OR (table_two.tax_pin_identification_number IS NULL AND table_one.tax_pin_identification_number IS NULL))

and ((table_two.vat_number=table_one.vat_number)OR (table_two.vat_number IS NULL AND table_one.vat_number IS NULL))

and ((table_two.ggcg_number=table_one.ggcg_number)OR (table_two.ggcg_number IS NULL AND table_one.ggcg_number IS NULL))

and ((table_two.national_association_number=table_one.national_association_number)OR (table_two.national_association_number IS NULL AND table_one.national_association_number IS NULL))

);

Solution 3

You can use a null check function like Oracle's NVL. For Postgres, you will have to use coalesce.

i.e. your query can look like :

UPDATE table_one SET table_one.x =(select table_two.y from table_one,table_two

WHERE

coalesce(table_one.invoice_number,table_two.invoice_number,1) = coalesce(table_two.invoice_number,table_one.invoice_number,1)

AND

coalesce(table_one.submitted_by,table_two.submitted_by,1) = coalesce(table_two.submitted_by,table_one.submitted_by,1))

where table_one.table_one_pk in (select table_one.table_one_pk from table_one,table_two

WHERE

coalesce(table_one.invoice_number,table_two.invoice_number,1) = coalesce(table_two.invoice_number,table_one.invoice_number,1)

AND

coalesce(table_one.submitted_by,table_two.submitted_by,1) = coalesce(table_two.submitted_by,table_one.submitted_by,1));

Solution 4

Your current query joins two tables using Nested Loop, which means that the server processes

9,661,262 * 299,998 = 2,898,359,277,476

rows. No wonder it takes forever.

To make the join efficient you need an index on all joined columns. The problem is NULL values.

If you use a function on the joined columns, generally the index can't be used.

If you use an expression like this in the JOIN:

coalesce(table_one.invoice_number, '') = coalesce(table_two.invoice_number, '')

an index can't be used.

So, we need an index and we need to do something with NULL values to make index usable.

We don't need to make any changes in table_one, because it has to be scanned in full in any case.

But, table_two definitely can be improved. Either change the table itself, or create a separate (temporary) table. It has only 300K rows, so it should not be a problem.

Make all columns that are used in the JOIN to be NOT NULL.

CREATE TABLE table_two (

id int4 NOT NULL,

invoice_number varchar(30) NOT NULL,

submitted_by varchar(20) NOT NULL,

passport_number varchar(30) NOT NULL,

driving_license_number varchar(30) NOT NULL,

national_id_number varchar(30) NOT NULL,

tax_pin_identification_number varchar(30) NOT NULL,

vat_number varchar(30) NOT NULL,

ggcg_number varchar(30) NOT NULL,

national_association_number varchar(30) NOT NULL,

column_y int,

CONSTRAINT table_two_pkey PRIMARY KEY (id)

);

Update the table and replace NULL values with '', or some other appropriate value.

Create an index on all columns that are used in JOIN plus column_y. column_y has to be included last in the index. I assume that your UPDATE is well-formed, so index should be unique.

CREATE UNIQUE INDEX IX ON table_two

(

invoice_number,

submitted_by,

passport_number,

driving_license_number,

national_id_number,

tax_pin_identification_number,

vat_number,

ggcg_number,

national_association_number,

column_y

);

The query will become

UPDATE table_one SET table_one.x = table_two.y

FROM table_two

WHERE

COALESCE(table_one.invoice_number, '') = table_two.invoice_number AND

COALESCE(table_one.submitted_by, '') = table_two.submitted_by AND

COALESCE(table_one.passport_number, '') = table_two.passport_number AND

COALESCE(table_one.driving_license_number, '') = table_two.driving_license_number AND

COALESCE(table_one.national_id_number, '') = table_two.national_id_number AND

COALESCE(table_one.tax_pin_identification_number, '') = table_two.tax_pin_identification_number AND

COALESCE(table_one.vat_number, '') = table_two.vat_number AND

COALESCE(table_one.ggcg_number, '') = table_two.ggcg_number AND

COALESCE(table_one.national_association_number, '') = table_two.national_association_number

Note, that COALESCE is used only on table_one columns.

It is also a good idea to do UPDATE in batches, rather than the whole table at once. For example, pick a range of ids to update in a batch.

UPDATE table_one SET table_one.x = table_two.y

FROM table_two

WHERE

table_one.id >= <some_starting_value> AND

table_one.id < <some_ending_value> AND

COALESCE(table_one.invoice_number, '') = table_two.invoice_number AND

COALESCE(table_one.submitted_by, '') = table_two.submitted_by AND

COALESCE(table_one.passport_number, '') = table_two.passport_number AND

COALESCE(table_one.driving_license_number, '') = table_two.driving_license_number AND

COALESCE(table_one.national_id_number, '') = table_two.national_id_number AND

COALESCE(table_one.tax_pin_identification_number, '') = table_two.tax_pin_identification_number AND

COALESCE(table_one.vat_number, '') = table_two.vat_number AND

COALESCE(table_one.ggcg_number, '') = table_two.ggcg_number AND

COALESCE(table_one.national_association_number, '') = table_two.national_association_number

Related videos on Youtube

Comments

-

lukik over 1 year

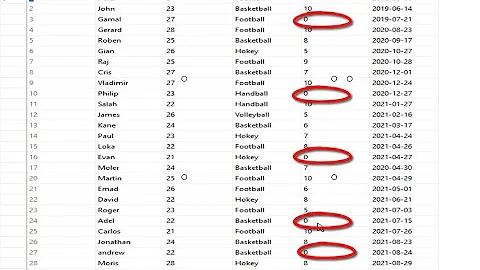

I am updating a column on one table using data from another table. The

WHEREclause is based on multiple columns and some of the columns are null. From my thinking, this nulls are what arethrowing offyour standardUPDATE TABLE SET X=Y WHERE A=Bstatement.See this SQL Fiddle of the two tables where am trying to update

table_onebased on data fromtable_two. My query currently looks like this:UPDATE table_one SET table_one.x = table_two.y FROM table_two WHERE table_one.invoice_number = table_two.invoice_number AND table_one.submitted_by = table_two.submitted_by AND table_one.passport_number = table_two.passport_number AND table_one.driving_license_number = table_two.driving_license_number AND table_one.national_id_number = table_two.national_id_number AND table_one.tax_pin_identification_number = table_two.tax_pin_identification_number AND table_one.vat_number = table_two.vat_number AND table_one.ggcg_number = table_two.ggcg_number AND table_one.national_association_number = table_two.national_association_numberThe query fails for some rows in that

table_one.xisn't getting updated when any of the columns in either table arenull. i.e. it only gets updated when all columns have some data.This question is related to my earlier one here on SO where I was getting distinct values from a large data set using

Distinct On. What I now I want is to populate the large data set with a value from the table which has unique fields.UPDATE

I used the first update statement provided by @binotenary. For small tables, it runs in a flash. Example is had one table with 20,000 records and the update was completed in like 20 seconds. But another table with 9 million plus records has been running for 20 hrs so far!. See below the output for

EXPLAINfunctionUpdate on table_one (cost=0.00..210634237338.87 rows=13615011125 width=1996) -> Nested Loop (cost=0.00..210634237338.87 rows=13615011125 width=1996) Join Filter: ((((my_update_statement_here)))) -> Seq Scan on table_one (cost=0.00..610872.62 rows=9661262 width=1986) -> Seq Scan on table_two (cost=0.00..6051.98 rows=299998 width=148)The

EXPLAIN ANALYZEoption took also forever so I canceled it.Any ideas on how to make this type of update faster? Even if it means using a different update statement or even using a custom function to loop through and do the update.

-

a_horse_with_no_name almost 8 yearsThe statement is invalid. You can't reference a second table without using a

a_horse_with_no_name almost 8 yearsThe statement is invalid. You can't reference a second table without using afromin theupdatestatement.

-

-

binoternary almost 8 years

coalesce(x,y,1) = coalesce(y,x,1)this condition is alwaystrueif eitherxoryisnull -

lukik almost 8 yearsGot a challenge with the code without coalesce. For a table with 20,000 rows, the update statement take about about 20 seconds. But for a table with 9 million rows, its now been 20hrs and the update statement hasn't yet finished. Any pointers? I've updated the question with the output of

EXPLAIN -

lukik almost 8 yearsI want to start a bounty on this question. It's the first time am doing so am not very sure what implications there are for having an accepted answer on a question with a Bounty. So I'll untick the accepted answer for now and raise the bounty then see how it pans out. Either way, see if we can make the query faster than it is using whatever means there are out there.

-

lukik almost 8 yearsYou're solution is very similar to another one I was referred to. Let me explain and u tell me if it could be an improvement on what you've suggested. The idea is to create a new column on both tables call it

id_merge. Then merge all "distinct" columns into that one column using coalesce so that you replace the nulls. After that, you index those two new columns and then run a standard update statement. See this fiddle. I ran it against 9 million rows and it completed in about 30 mins. I however have a table with 65 million rows! Can it be improved? Thanks -

Vladimir Baranov almost 8 yearsThe index on

Vladimir Baranov almost 8 yearsThe index ontable_twoshould includecolumn_y. It should be the last column in index. Index ontable_twoshould be unique. If you don't create an index ontable_one, its update may be faster. -

lukik almost 8 yearsSo you mean something like this?

CREATE INDEX table_two_idx_01 ON table_two (id_merge_y, column_y);? Can you explain why? I thought the select will be based on theid_merge_ycolumn? Also, why not indextable_one? My understanding is that sincetable_one.id_merge_xwill be compared totable_two.id_merge_y, then an index on this two columns would help speed things up? -

Vladimir Baranov almost 8 yearsIn SQL Server I'd expect it to make a difference. In Postgres - maybe not. Try both variants and compare execution plans. Server has to read each row in

Vladimir Baranov almost 8 yearsIn SQL Server I'd expect it to make a difference. In Postgres - maybe not. Try both variants and compare execution plans. Server has to read each row intable_oneany way, so having an index ontable_oneonly makes update slower. For each row intable_oneserver has to find a row intable_twoas fast as possible. Here index helps. Ifcolumn_yis part of the index, server can read only index; if not, it has to do extra lookup. Having index unique gives an extra useful hint to optimiser. -

maniek almost 8 years+1 the coalesce solution is the way to go, you will get a merge join plan with it. The "t1.c1 = t2.c1 or t1.c1 is null and t2.c1 is null" will result in nested loop joins. This is a thing you want to avoid.