What's the best way to perform a parallel copy on Unix?

Solution 1

As long as you limit the copy commands you're running you could probably use a script like the one posted by Scrutinizer

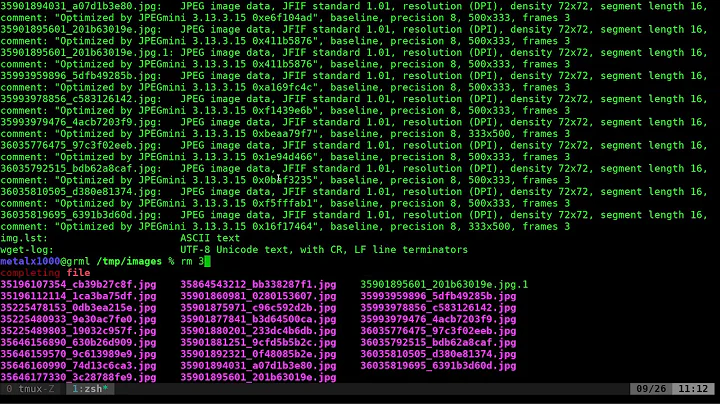

SOURCEDIR="$1"

TARGETDIR="$2"

MAX_PARALLEL=4

nroffiles=$(ls "$SOURCEDIR" | wc -w)

setsize=$(( nroffiles/MAX_PARALLEL + 1 ))

ls -1 "$SOURCEDIR"/* | xargs -n "$setsize" | while read workset; do

cp -p "$workset" "$TARGETDIR" &

done

wait

Solution 2

If you have GNU Parallel http://www.gnu.org/software/parallel/ installed you can do this:

parallel -j10 cp {} destdir/ ::: *

You can install GNU Parallel simply by:

$ (wget -O - pi.dk/3 || lynx -source pi.dk/3 || curl pi.dk/3/ || \

fetch -o - http://pi.dk/3 ) > install.sh

$ sha1sum install.sh | grep 883c667e01eed62f975ad28b6d50e22a

12345678 883c667e 01eed62f 975ad28b 6d50e22a

$ md5sum install.sh | grep cc21b4c943fd03e93ae1ae49e28573c0

cc21b4c9 43fd03e9 3ae1ae49 e28573c0

$ sha512sum install.sh | grep da012ec113b49a54e705f86d51e784ebced224fdf

79945d9d 250b42a4 2067bb00 99da012e c113b49a 54e705f8 6d51e784 ebced224

fdff3f52 ca588d64 e75f6033 61bd543f d631f592 2f87ceb2 ab034149 6df84a35

$ bash install.sh

Explanation of commands, arguments, and options

- parallel --- Fairly obvious; a call to the parallel command

- build and execute shell command lines from standard input in parallel - man deeplink

- -j10 ------- Run 10 jobs in parallel

- Number of jobslots on each machine. Run up to N jobs in parallel. 0 means as many as possible. Default is 100% which will run one job per CPU on each machine. - man deeplink

- cp -------- The command to run in parallel

- {} --------- Replace received values here. i.e.

source_fileargument for commandcp.- This replacement string will be replaced by a full line read from the input source. The input source is normally stdin (standard input), but can also be given with -a, :::, or ::::. The replacement string {} can be changed with -I. If the command line contains no replacement strings then {} will be appended to the command line. - man deeplink

- destdir/ - The destination directory

- ::: -------- Tell parallel to use the next argument as input instead of stdin

- Use arguments from the command line as input source instead of stdin (standard input). Unlike other options for GNU parallel ::: is placed after the command and before the arguments. - man deeplink

- * ---------- All files in the current directory

Learn more

Your command line will love you for it.

See more examples: http://www.gnu.org/software/parallel/man.html

Watch the intro videos: https://www.youtube.com/playlist?list=PL284C9FF2488BC6D1

Walk through the tutorial: http://www.gnu.org/software/parallel/parallel_tutorial.html

Get the book 'GNU Parallel 2018' at http://www.lulu.com/shop/ole-tange/gnu-parallel-2018/paperback/product-23558902.html or download it at: https://doi.org/10.5281/zenodo.1146014 Read at least chapter 1+2. It should take you less than 20 minutes.

Print the cheat sheet: https://www.gnu.org/software/parallel/parallel_cheat.pdf

Sign up for the email list to get support: https://lists.gnu.org/mailman/listinfo/parallel

Solution 3

Honestly, the best tool is Google's gsutil. It handles parallel copies with directory recursion. Most of the other methods I've seen can't handle directory recursion. They don't specifically mention local filesystem to local filesystem copies in their docs, but it works like a charm.

It's another binary to install, but probably one you might already run considering all of the cloud service adoption nowadays.

Solution 4

One way would be to use rsync which will only copy the changes - new files and the changed parts of other files.

http://linux.die.net/man/1/rsync

Running any form of parallel copy operation will probably flood your network and the copy operation will just grind to a halt or suffer from bottlenecks at the source or destination disk.

Solution 5

Parallel rsync using find:

export SOURCE_DIR=/a/path/to/nowhere

export DEST_DIR=/another/path/to/nowhere

# sync folder structure first

rsync -a -f'+ */' -f'- *' $SOURCE_DIR $DEST_DIR

# cwd

cd $SOURCE_DIR

# use find to help filter files etc. into list and pipe into gnu parallel to run 4 rsync jobs simultaneously

find . -type f | SHELL=/bin/sh parallel --linebuffer --jobs=4 'rsync -av {} $DEST_DIR/{//}/'

on a corporate LAN, single rsync does about 800Mbps; with 6-8 jobs i am able to get over 2.5Gbps (at the expense of high load). Limited by the disks.

Related videos on Youtube

dsg

Updated on September 18, 2022Comments

-

dsg over 1 year

I routinely have to copy the contents of a folder on a network file system to my local computer. There are many files (1000s) on the remote folder that are all relatively small but due to network overhead a regular copy

cp remote_folder/* ~/local_folder/takes a very long time (10 mins).I believe it's because the files are being copied sequentially – each file waits until the previous is finished before the copy begins.

What's the simplest way to increase the speed of this copy? (I assume it is to perform the copy in parallel.)

Zipping the files before copying will not necessarily speed things up because they may be all saved on different disks on different servers.

-

David Schwartz over 11 yearsZipping the files before copying will speed things up massively because there will not need to be any more "did you get that file", "yes, I did", "here's the next one", "okay", ... It's those "turnarounds" that slow you down.

-

Joel Coehoorn over 11 yearsIt's probably disk speed, rather than network speed, that is your limiting factor, and if that is the case then doing this per file in parallel will make the operation slower, not faster, because you will force the disk to constantly seek back and forth between files.

-

Rob over 11 yearsWhile zipping might not be a good idea (running compression algo over 1000s of files might take a little while), tar might be viable.

-

Oceanus Teo about 11 years@JoelCoehoorn still, there are cases when this is not the case: e.g. multiple spindles + small files (or simply random reads). In this scenario, "parallel cp" would help.

-

Ciro Santilli Путлер Капут 六四事 over 8 years

Ciro Santilli Путлер Капут 六四事 over 8 years

-

-

slhck over 12 yearsNote of warning though: This script breaks with filenames containing spaces or globbing characters.

slhck over 12 yearsNote of warning though: This script breaks with filenames containing spaces or globbing characters. -

dsg over 12 years@OldWolf -- Can you explain how this script works? For example, which part does the parallelization?

-

Adobe over 12 yearsDoesn't worked for me. I'm not sure it is possible to speed up

cp. You obviosly can speed up calculation through the multithreading. But I don't think same holds for hard drive data coping. -

Elijah Lynn about 4 yearsThis answer could be improved by explaining the command and using long options where possible. e.g. What is

:::for and what is{}doing.-j10can be made--jobs 10etc. -

SgtPooki over 3 years@ElijahLynn edit submitted and approved.

-

Tom Hale about 3 yearsBetter to

Tom Hale about 3 yearsBetter tofind -print0 |for safety.